Содержание

- 2. The process of making an archive file is called archiving or packing. Reconstructing the original files

- 3. Archive files are sometimes accompanied by separate parity archive (PAR) files that allow for additional error

- 4. Lossless compression is used when it is important that the original and the decompressed data be

- 5. best possible for a particular statistical model, which is given by the information entropy, whereas Huffman

- 6. Lossless compression methods may be categorized according to the type of data they are designed to

- 7. Multimedia Techniques that take advantage of the specific characteristics of images such as the common phenomenon

- 8. The adaptive encoding uses the probabilities from the previous sample in sound encoding, from the left

- 9. Huffman tree generated from the exact frequencies of the text "this is an example of a

- 10. In computer science and information theory, Huffman coding is an entropy encoding algorithm used for lossless

- 11. for less common source symbols. Huffman was able to design the most efficient compression method of

- 12. Although Huffman coding is optimal for a symbol-by-symbol coding (i.e. a stream of unrelated symbols) with

- 13. Lempel–Ziv–Welch (LZW) is a universal lossless data compression algorithm created by Abraham Lempel, Jacob Ziv, and

- 14. In this way successively longer strings are registered in the dictionary and made available for subsequent

- 15. In this way the decoder builds up a dictionary which is identical to that used by

- 16. LZW compression provided a better compression ratio, in most applications, than any well-known method available up

- 17. JBIG is an image compression standard for bi-level images, developed by the Joint Bi-level Image Experts

- 18. Lossy compression A lossy compression method is one where compressing data and then decompressing it retrieves

- 19. Lossy and lossless compression It is possible to compress many types of digital data in a

- 20. In many cases files or data streams contain more information than is needed. For example, a

- 21. There is also an interlaced "Progressive JPEG" format, in which data is compressed in multiple passes

- 22. There are two JPEG compression algorithms: the oldest one is simply referred to as ‘JPEG’ within

- 24. Скачать презентацию

Слайд 2The process of making an archive file is called archiving or packing.

The process of making an archive file is called archiving or packing.

An archive file is a file that is composed of one or more files along with metadata that can include source volume and medium information, file directory structure, error detection and recovery information, file comments, and usually employs some form of lossless compression. Archive files may also be encrypted in part or as a whole. Archive files are used to collect multiple data files together into a single file for easier portability and storage.

Computer archive files are created by File archiver software, Optical disc authoring software, or Disk image software that uses an archive format determined by that software. The file extension or file header of the archive file are indicators of the file format used.

Слайд 3Archive files are sometimes accompanied by separate parity archive (PAR) files that

Archive files are sometimes accompanied by separate parity archive (PAR) files that

Archives can have extensions like .zip, .rar, .tar and etc.

Lossless data compression is a class of data compression algorithms that allows the exact original data to be reconstructed from the compressed data. The term lossless is in contrast to lossy data compression, which only allows an approximation of the original data to be reconstructed, in exchange for better compression rates.

Lossless data compression is used in many applications. For example, it is used in the popular ZIP file format and in the Unix tool gzip. It is also often used as a component within lossy data compression technologies.

Слайд 4Lossless compression is used when it is important that the original and

Lossless compression is used when it is important that the original and

Lossless compression techniques

Most lossless compression programs do two things in sequence: the first step generates a statistical model for the input data, and the second step uses this model to map input data to bit sequences in such a way that "probable" (e.g. frequently encountered) data will produce shorter output than "improbable" data.

The primary encoding algorithms used to produce bit sequences are Huffman coding and arithmetic coding. Arithmetic coding achieves compression rates close to the

Слайд 5best possible for a particular statistical model, which is given by the

best possible for a particular statistical model, which is given by the

There are two primary ways of constructing statistical models: in a static model, the data is analyzed and a model is constructed, then this model is stored with the compressed data. This approach is simple and modular, but has the disadvantage that the model itself can be expensive to store, and also that it forces a single model to be used for all data being compressed, and so performs poorly on files containing heterogeneous data. Adaptive models dynamically update the model as the data is compressed. Both the encoder and decoder begin with a trivial model, yielding poor compression of initial data, but as they learn more about the data performance improves. Most popular types of compression used in practice now use adaptive coders.

Слайд 6Lossless compression methods may be categorized according to the type of data

Lossless compression methods may be categorized according to the type of data

Text

Statistical modeling algorithms for text (or text-like binary data such as executables) include:

• Context Tree Weighting method (CTW)

• Burrows-Wheeler transform (block sorting preprocessing that makes compression more efficient)

• LZ77 (used by DEFLATE)

• LZW

Слайд 7Multimedia

Techniques that take advantage of the specific characteristics of images such as

Multimedia

Techniques that take advantage of the specific characteristics of images such as

A hierarchical version of this technique takes neighboring pairs of data points, stores their difference and sum, and on a higher level with lower resolution continues with the sums. This is called discrete wavelet transform. JPEG2000 additionally uses data points from other pairs and multiplication factors to mix then into the difference. These factors have to be integers so that the result is an integer under all circumstances. So the values are increased, increasing file size, but hopefully the distribution of values is more peaked.

Слайд 8The adaptive encoding uses the probabilities from the previous sample in sound

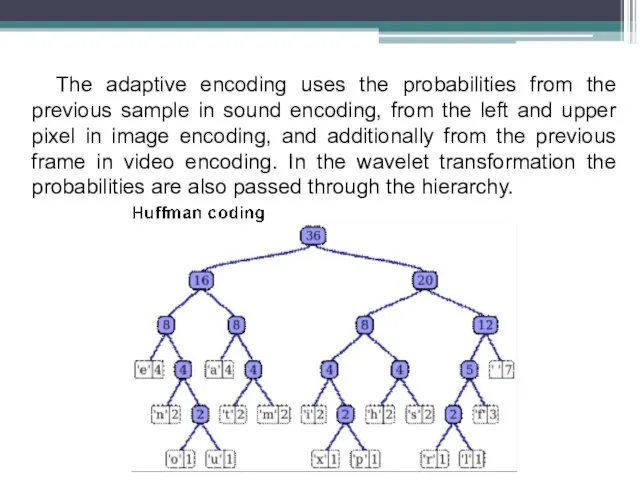

The adaptive encoding uses the probabilities from the previous sample in sound

Слайд 9Huffman tree generated from the exact frequencies of the text "this is

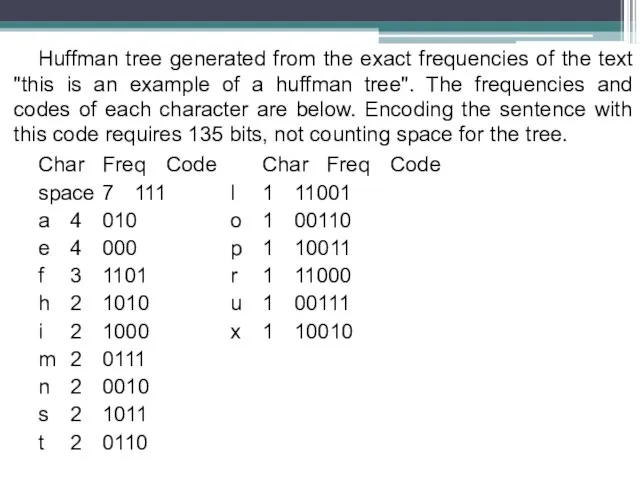

Huffman tree generated from the exact frequencies of the text "this is

Char Freq Code Char Freq Code

space 7 111 l 1 11001

a 4 010 o 1 00110

e 4 000 p 1 10011

f 3 1101 r 1 11000

h 2 1010 u 1 00111

i 2 1000 x 1 10010

m 2 0111

n 2 0010

s 2 1011

t 2 0110

Слайд 10In computer science and information theory, Huffman coding is an entropy encoding

In computer science and information theory, Huffman coding is an entropy encoding

Huffman coding uses a specific method for choosing the representation for each symbol, resulting in a prefix code (sometimes called "prefix-free codes") (that is, the bit string representing some particular symbol is never a prefix of the bit string representing any other symbol) that expresses the most common characters using shorter strings of bits than are used

Слайд 11for less common source symbols. Huffman was able to design the most

for less common source symbols. Huffman was able to design the most

For a set of symbols with a uniform probability distribution and a number of members which is a power of two, Huffman coding is equivalent to simple binary block encoding, e.g., ASCII coding. Huffman coding is such a widespread method for creating prefix codes that the term "Huffman code" is widely used as a synonym for "prefix code" even when such a code is not produced by Huffman's algorithm.

Слайд 12Although Huffman coding is optimal for a symbol-by-symbol coding (i.e. a stream

Although Huffman coding is optimal for a symbol-by-symbol coding (i.e. a stream

Слайд 13Lempel–Ziv–Welch (LZW) is a universal lossless data compression algorithm created by Abraham

Lempel–Ziv–Welch (LZW) is a universal lossless data compression algorithm created by Abraham

Encoding

A dictionary is initialized to contain the single-character strings corresponding to all the possible input characters (and nothing else). The algorithm works by scanning through the input string for successively longer substrings until it finds one that is not in the dictionary. When such a string is found, it is added to the dictionary, and the index for the string less the last character (i.e., the longest substring that is in the dictionary) is retrieved from the dictionary and sent to output. The last input character is then used as the next starting point to scan for substrings.

Слайд 14In this way successively longer strings are registered in the dictionary and

In this way successively longer strings are registered in the dictionary and

Decoding

The decoding algorithm works by reading a value from the encoded input and outputting the corresponding string from the initialized dictionary. At the same time it obtains the next value from the input, and registers to the dictionary the concatenation of that string and the first character of the string obtained by decoding the next input value. The decoder then proceeds to the next input value (which was already read in as the "next value" in the previous pass) and repeats the process until there is no more input, at which point the final input value is decoded without any more additions to the dictionary.

Слайд 15In this way the decoder builds up a dictionary which is identical

In this way the decoder builds up a dictionary which is identical

The method became widely used in the program compress, which became a more or less standard utility in Unix systems circa 1986. (It has since disappeared from many for both legal and technical reasons, but as of 2008 at least FreeBSD includes the utility of compress and uncompress as a part of the distribution.) Several other popular compression utilities also used the method, or closely related ones.

It became very widely used after it became part of the GIF image format in 1987. It may also (optionally) be used in TIFF files.

Слайд 16LZW compression provided a better compression ratio, in most applications, than any

LZW compression provided a better compression ratio, in most applications, than any

Today, an implementation of the algorithm is contained within the popular Adobe Acrobat software program.

Run-length encoding (RLE) is a very simple form of data compression in which runs of data (that is, sequences in which the same data value occurs in many consecutive data elements) are stored as a single data value and count, rather than as the original run. This is most useful on data that contains many such runs: for example, relatively simple graphic images such as icons, line drawings, and animations. It is not recommended for use with files that don't have many runs as it could potentially double the file size.

Слайд 17JBIG is an image compression standard for bi-level images, developed by the

JBIG is an image compression standard for bi-level images, developed by the

JBIG is a method for compressing bi-level (two-color) image data. The acronym JBIG stands for Joint Bi-level Image Experts Group, a standards committee that had its origins within the International Standards Organization (ISO). The compression standard they developed bears the name of this committee.

The main features of JBIG are:

• Lossless compression of one-bit-per-pixel image data

• Ability to encode individual bitplanes of multiple-bit pixels

• Progressive or sequential encoding of image data

Слайд 18Lossy compression

A lossy compression method is one where compressing data and then

Lossy compression

A lossy compression method is one where compressing data and then

Слайд 19Lossy and lossless compression

It is possible to compress many types of digital

Lossy and lossless compression

It is possible to compress many types of digital

The original contains a certain amount of information; there is a lower limit to the size of file that can carry all the information. As an intuitive example, most people know that a compressed ZIP file is smaller than the original file; but repeatedly compressing the file will not reduce the size to nothing.

Слайд 20In many cases files or data streams contain more information than is

In many cases files or data streams contain more information than is

JPEG compression

The compression method is usually lossy, meaning that some original image information is lost and cannot be restored (possibly affecting image quality.) There are variations on the standard baseline JPEG that are lossless; however, these are not widely supported.

Слайд 21There is also an interlaced "Progressive JPEG" format, in which data is

There is also an interlaced "Progressive JPEG" format, in which data is

There are also many medical imaging systems that create and process 12-bit JPEG images. The 12-bit JPEG format has been part of the JPEG specification for some time, but again, this format is not as widely supported.

JPEG stands for Joint Photographic Experts Group, which is a standardization committee. It also stands for the compression algorithm that was invented by this committee.

Слайд 22There are two JPEG compression algorithms: the oldest one is simply referred

There are two JPEG compression algorithms: the oldest one is simply referred

JPEG is a lossy compression algorithm that has been conceived to reduce the file size of natural, photographic-like true-color images as much as possible without affecting the quality of the image as experienced by the human sensory engine. We perceive small changes in brightness more readily than we do small changes in color. It is this aspect of our perception that JPEG compression exploits in an effort to reduce the file size

Токарные инструменты

Токарные инструменты МУДРЫЕ ВЫСКАЗЫВАНИЯ

МУДРЫЕ ВЫСКАЗЫВАНИЯ Космические роботы

Космические роботы М.Ю.Лермонтов «Утес»

М.Ю.Лермонтов «Утес» С Днем геолога

С Днем геолога Что умеет компьютер (1 класс)

Что умеет компьютер (1 класс) Имя Алексей происходит от древнегреческого слова «алекс», означающего «защищать».

Имя Алексей происходит от древнегреческого слова «алекс», означающего «защищать». Ten Principles of Economics 1

Ten Principles of Economics 1 Организация работы стационаров. Инсультные центры

Организация работы стационаров. Инсультные центры  Понятие о праве

Понятие о праве АГ-лечение

АГ-лечение 2009 год молодёжи

2009 год молодёжи ВІЛЬНЕ ПАДІННЯ

ВІЛЬНЕ ПАДІННЯ Приготовление котлетной массы из птицы и полуфабрикатов из нее

Приготовление котлетной массы из птицы и полуфабрикатов из нее Презентация на тему Смерчи и торнадо

Презентация на тему Смерчи и торнадо ООО Тринити-К. Зимняя практика 2021

ООО Тринити-К. Зимняя практика 2021 Вычисление объемов пространственных тел с помощью интеграла

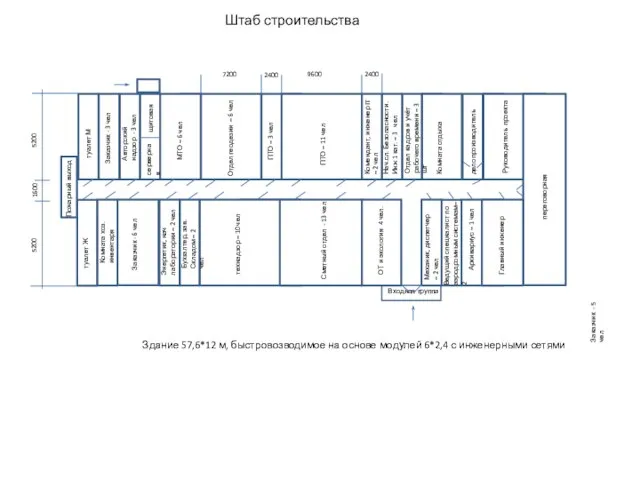

Вычисление объемов пространственных тел с помощью интеграла Штаб строительства

Штаб строительства FREE Крис Андерсон

FREE Крис Андерсон В. Маяковский

В. Маяковский 20170903_sladkaya_promyshlennost

20170903_sladkaya_promyshlennost Самолет президента спешит на помощь

Самолет президента спешит на помощь ЭКОЛОГИЧЕСКИЙ ПРАКТИКУМ

ЭКОЛОГИЧЕСКИЙ ПРАКТИКУМ Народный бюджет. Современная спортивно-игровая площадка ПолиСада

Народный бюджет. Современная спортивно-игровая площадка ПолиСада А.Н.Островский

А.Н.Островский Математика – наука молодых. Иначе и не может быть. Занятия математикой - это

Математика – наука молодых. Иначе и не может быть. Занятия математикой - это Презентация на тему Треугольники 7 класс

Презентация на тему Треугольники 7 класс Социальные инвестиции автомобильного завода

Социальные инвестиции автомобильного завода