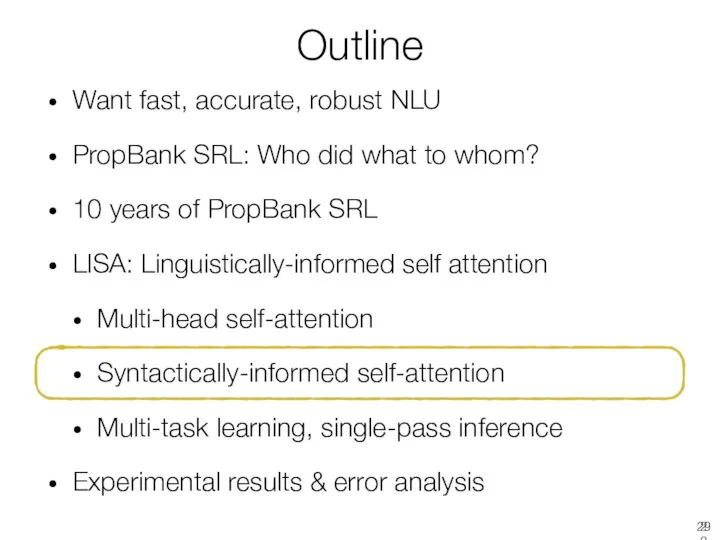

Содержание

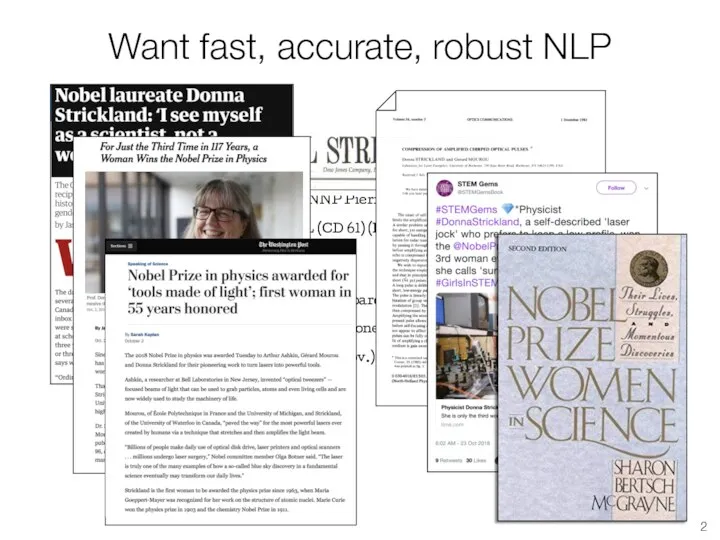

- 2. Want fast, accurate, robust NLP

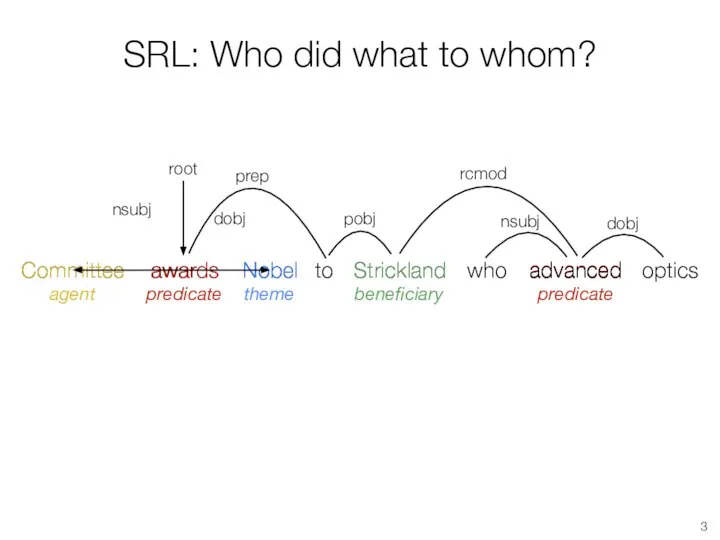

- 3. Strickland Committee Nobel who awards advanced advanced SRL: Who did what to whom? Committee awards Nobel

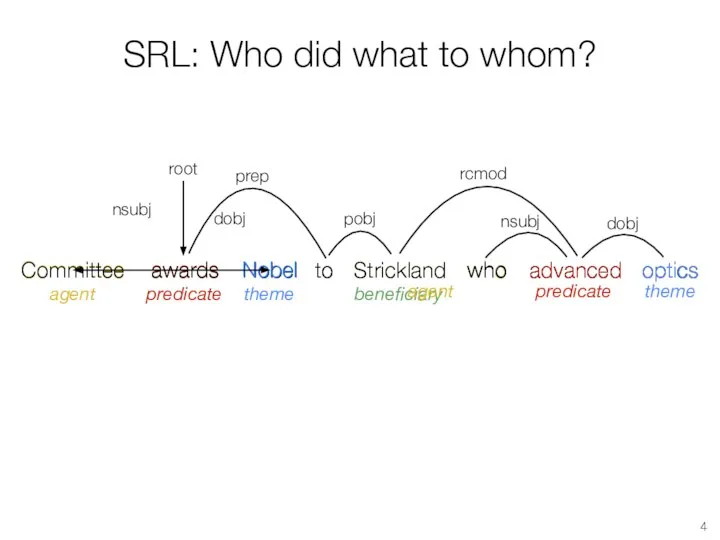

- 4. Nobel advanced optics Strickland Strickland who who advanced awards Committee SRL: Who did what to whom?

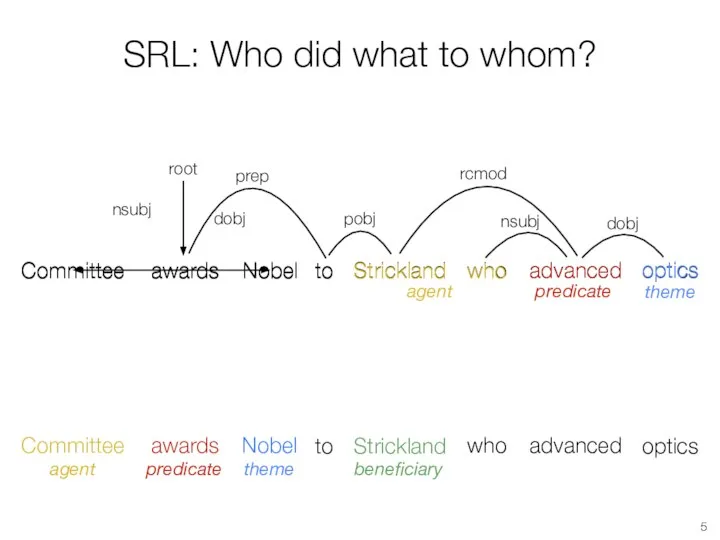

- 5. who advanced optics awards Nobel Committee Strickland to SRL: Who did what to whom? root nsubj

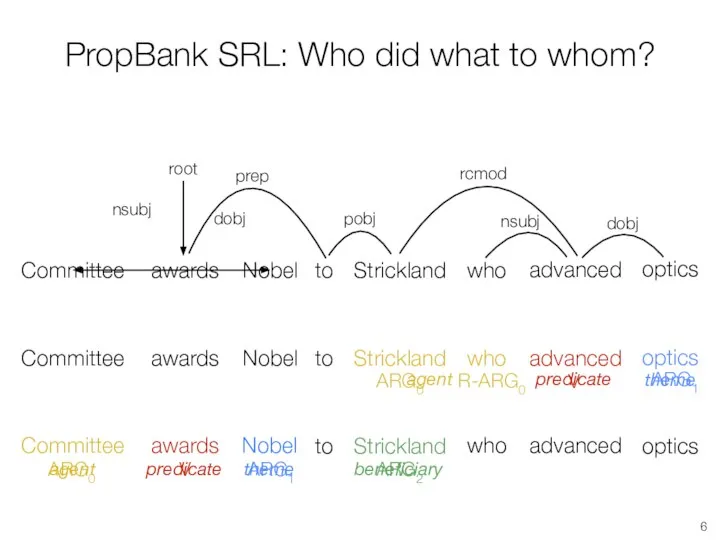

- 6. who advanced awards Committee Nobel Strickland who to optics agent predicate theme beneficiary Strickland advanced Nobel

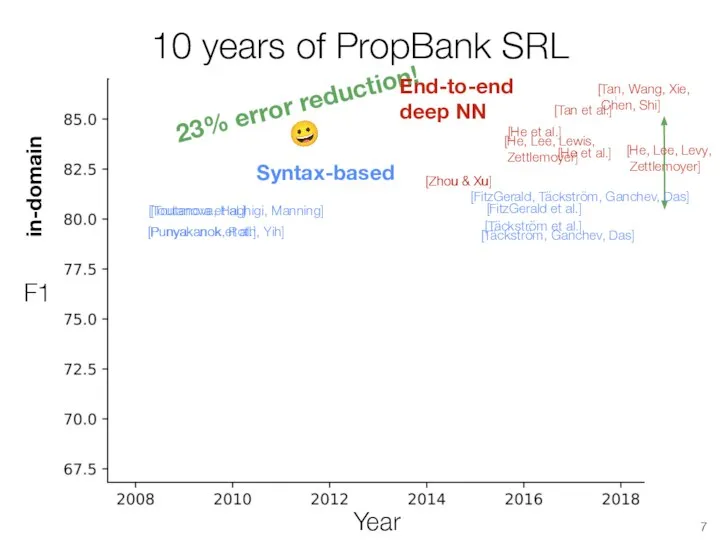

- 7. 10 years of PropBank SRL Year F1 23% error reduction! ? [Punyakanok et al.] [Toutanova et

- 8. 10 years of PropBank SRL Year F1 [Punyakanok et al.] [Toutanova et al.] [Tackström et al.]

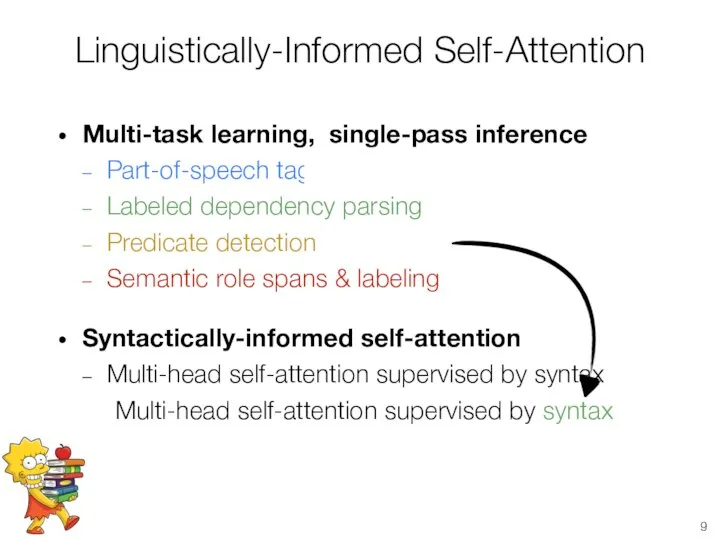

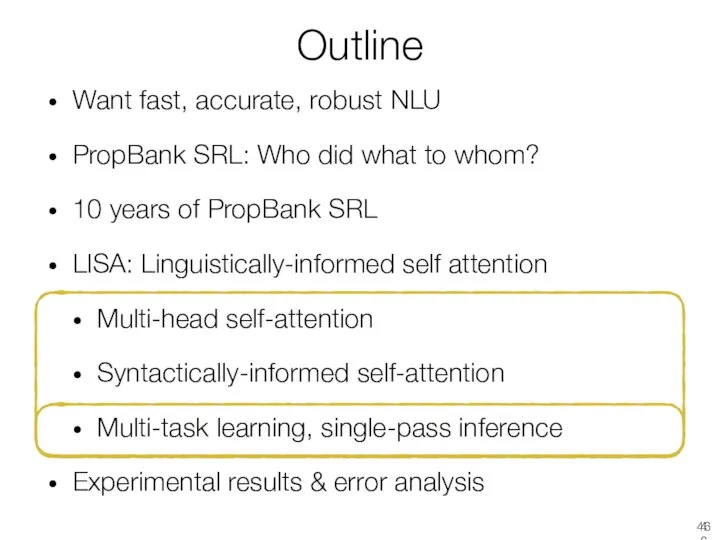

- 9. Linguistically-Informed Self-Attention Multi-task learning, single-pass inference Part-of-speech tagging Labeled dependency parsing Predicate detection Semantic role spans

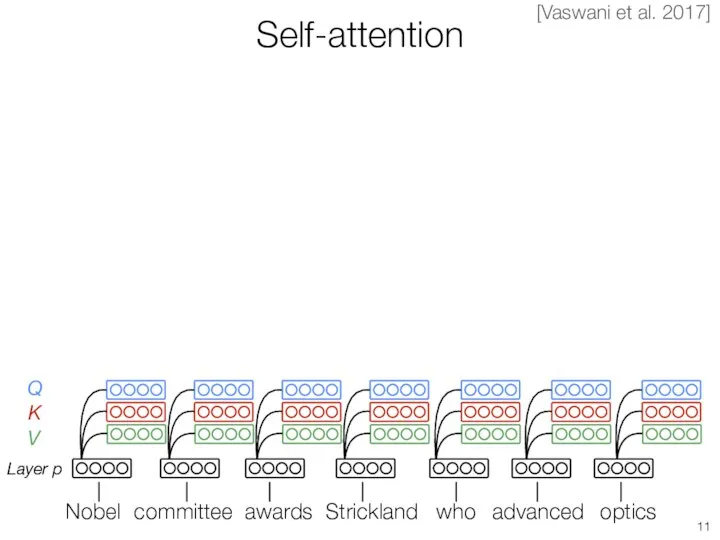

- 10. [Vaswani et al. 2017]

- 11. Self-attention committee awards Strickland advanced optics who Layer p Q K V [Vaswani et al. 2017]

- 12. Self-attention Layer p Q K V [Vaswani et al. 2017] committee awards Strickland advanced optics who

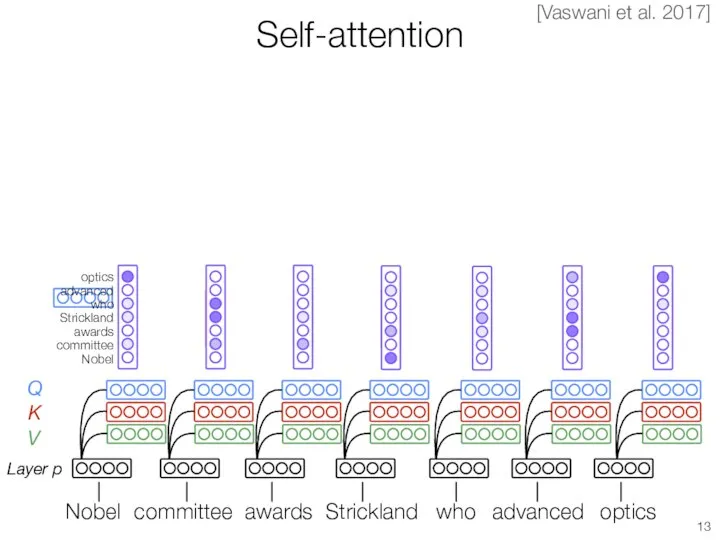

- 13. Self-attention Layer p Q K V optics advanced who Strickland awards committee Nobel [Vaswani et al.

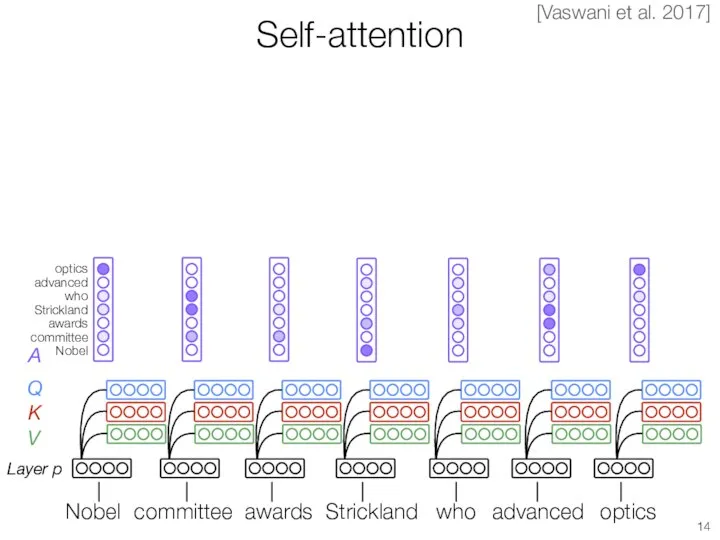

- 14. optics advanced who Strickland awards committee Nobel Self-attention Layer p Q K V A [Vaswani et

- 15. Self-attention Layer p Q K V [Vaswani et al. 2017] committee awards Strickland advanced optics who

- 16. Self-attention Layer p Q K V [Vaswani et al. 2017] committee awards Strickland advanced optics who

- 17. Self-attention Layer p Q K V M [Vaswani et al. 2017] committee awards Strickland advanced optics

- 18. Self-attention Layer p Q K V M [Vaswani et al. 2017] committee awards Strickland advanced optics

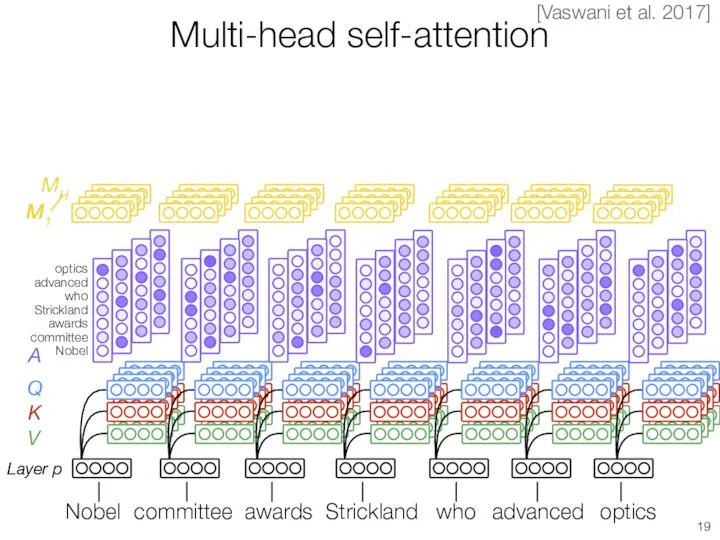

- 19. Multi-head self-attention Layer p Q K V M M1 MH [Vaswani et al. 2017] committee awards

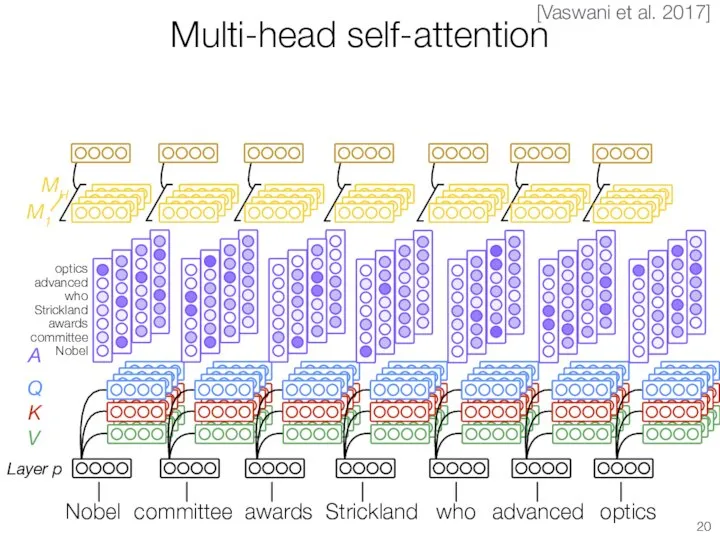

- 20. Multi-head self-attention Layer p Q K V MH M1 [Vaswani et al. 2017] committee awards Strickland

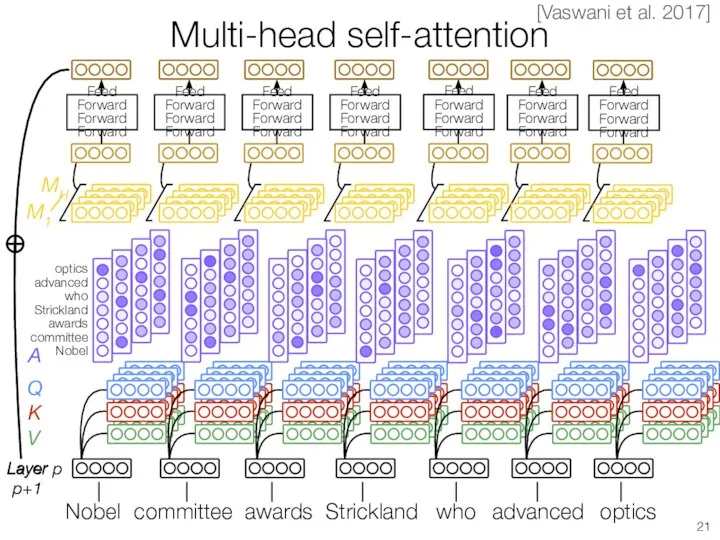

- 21. Multi-head self-attention Layer p Q K V MH M1 Layer p+1 committee awards Strickland advanced optics

- 22. Multi-head self-attention committee awards Strickland advanced optics who Nobel [Vaswani et al. 2017] p+1

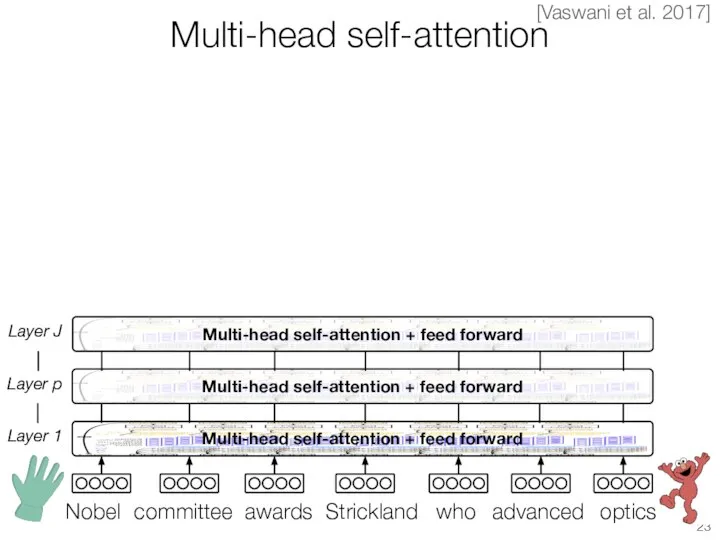

- 23. Multi-head self-attention + feed forward Multi-head self-attention + feed forward Multi-head self-attention Layer 1 Layer p

- 24. [Vaswani et al. 2017]

- 25. How to incorporate syntax? Multi-task learning [Caruana 1993; Collobert et al. 2011]: Overfits to training domain

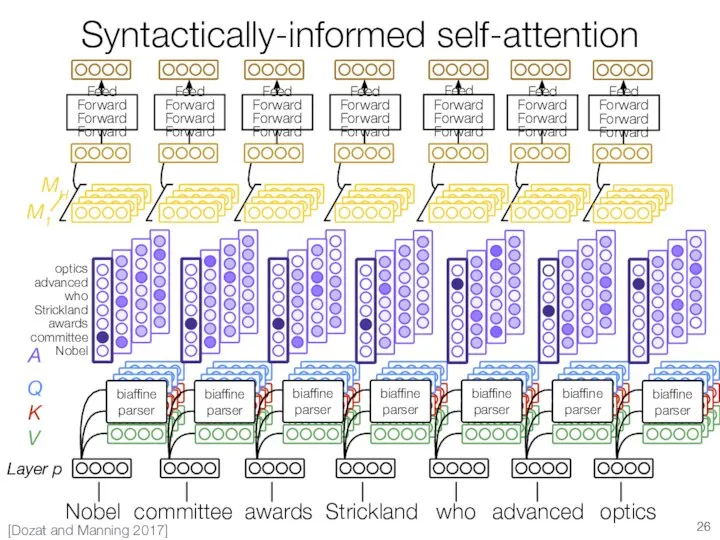

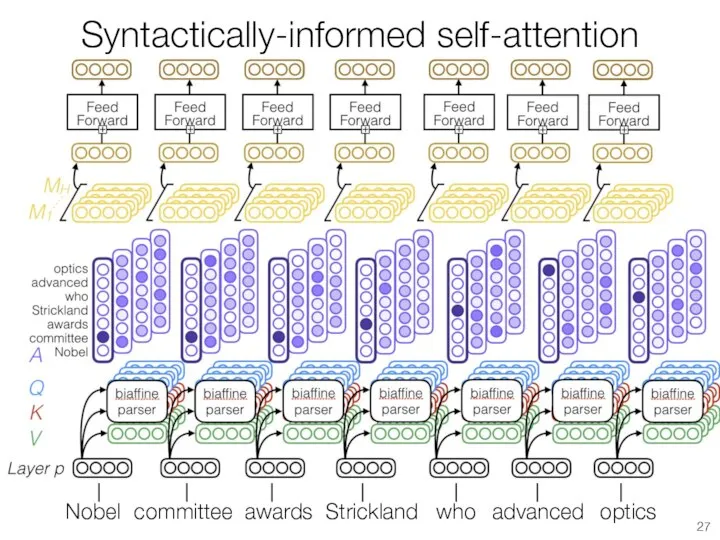

- 26. Syntactically-informed self-attention Layer p Q K V biaffine parser biaffine parser biaffine parser biaffine parser biaffine

- 27. Syntactically-informed self-attention committee awards Strickland advanced optics who Nobel

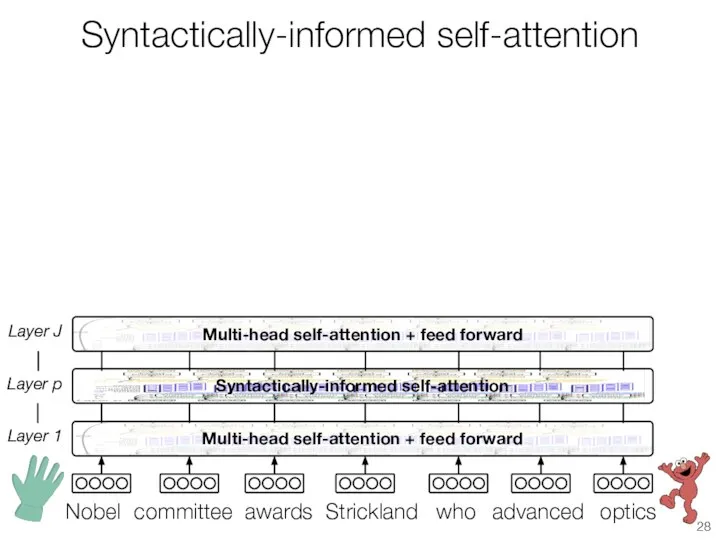

- 28. Syntactically-informed self-attention Multi-head self-attention + feed forward Syntactically-informed self-attention Layer 1 Layer p Multi-head self-attention +

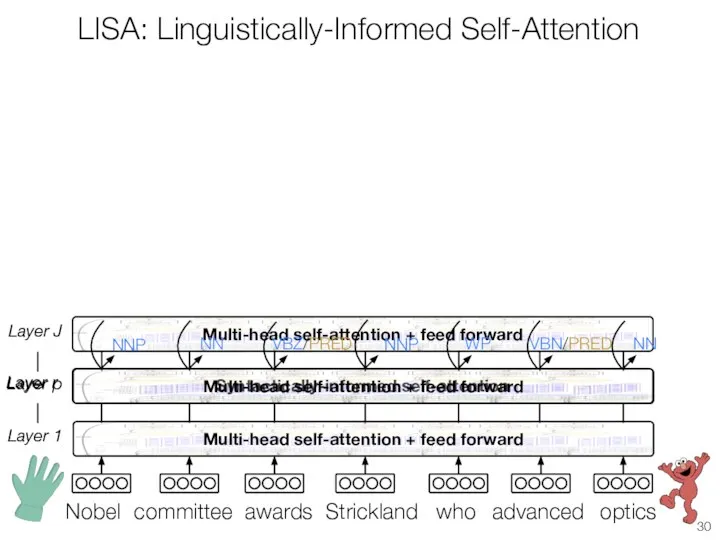

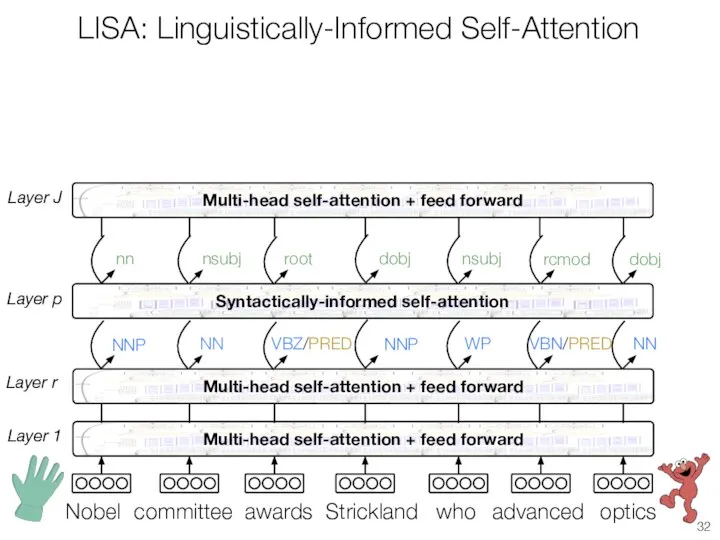

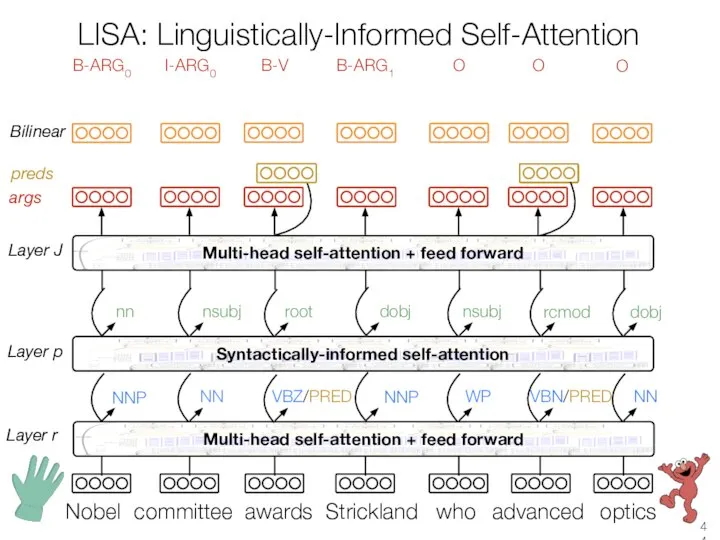

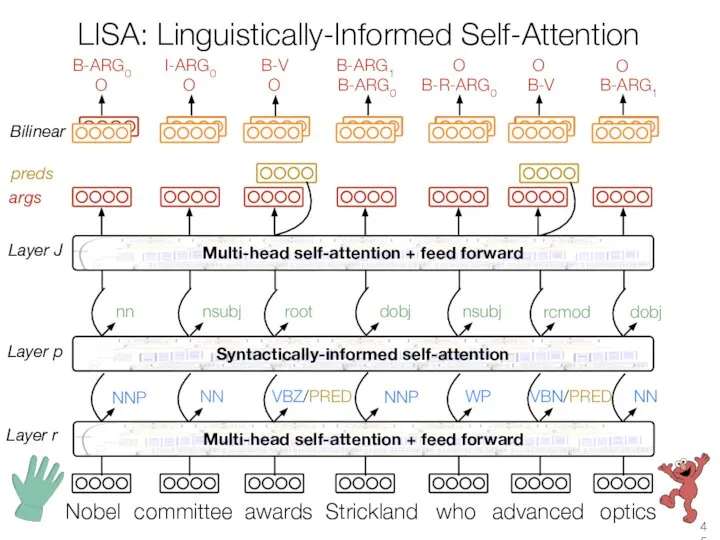

- 30. LISA: Linguistically-Informed Self-Attention Layer 1 Layer r NNP NN VBZ/PRED NNP WP VBN/PRED NN committee awards

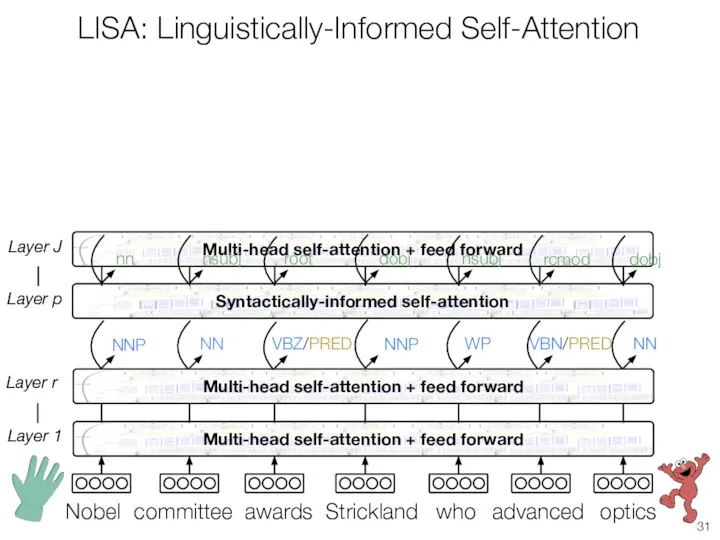

- 31. LISA: Linguistically-Informed Self-Attention Layer 1 Syntactically-informed self-attention Layer p Layer r committee awards Strickland advanced optics

- 32. LISA: Linguistically-Informed Self-Attention committee awards Strickland advanced optics who Nobel

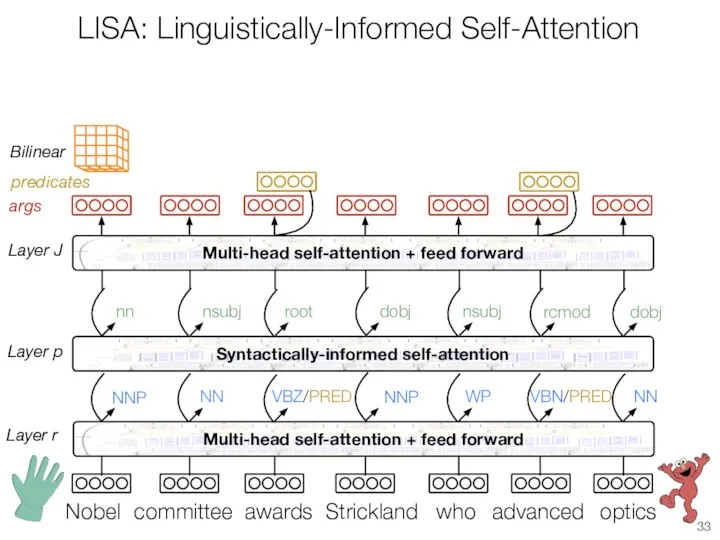

- 33. LISA: Linguistically-Informed Self-Attention committee awards Strickland advanced optics who Nobel args predicates Bilinear

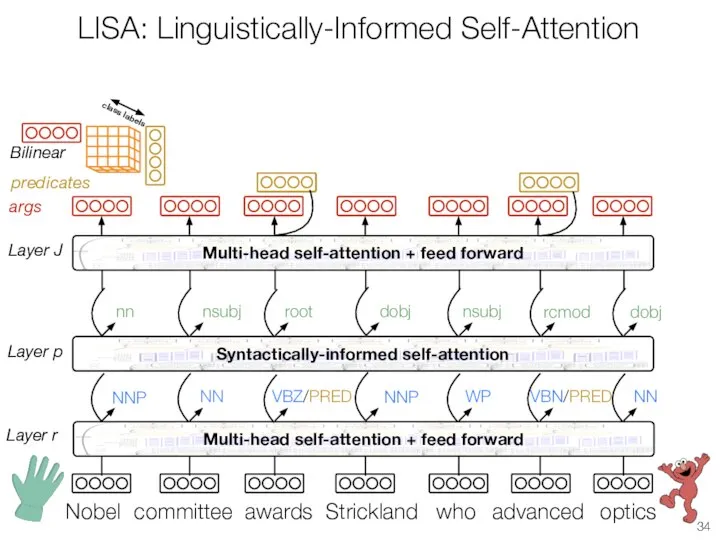

- 34. LISA: Linguistically-Informed Self-Attention committee awards Strickland advanced optics who Nobel args predicates Bilinear

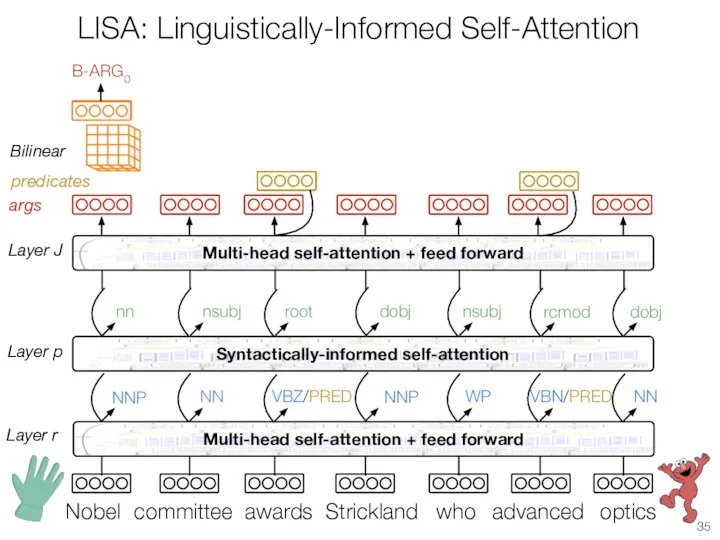

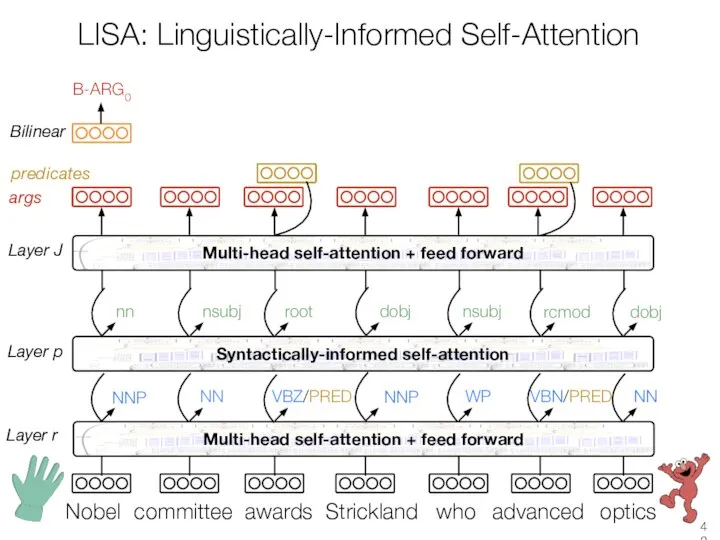

- 35. LISA: Linguistically-Informed Self-Attention committee awards Strickland advanced optics who Nobel B-ARG0 args predicates Bilinear

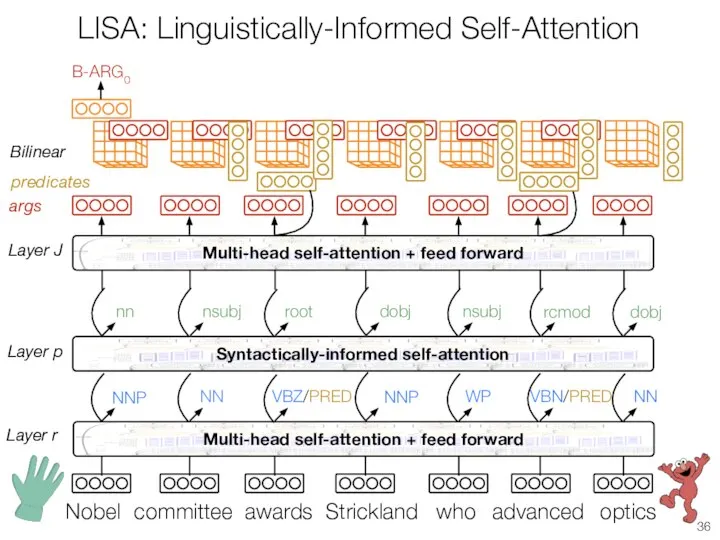

- 36. LISA: Linguistically-Informed Self-Attention committee awards Strickland advanced optics who Nobel B-ARG0 args predicates Bilinear

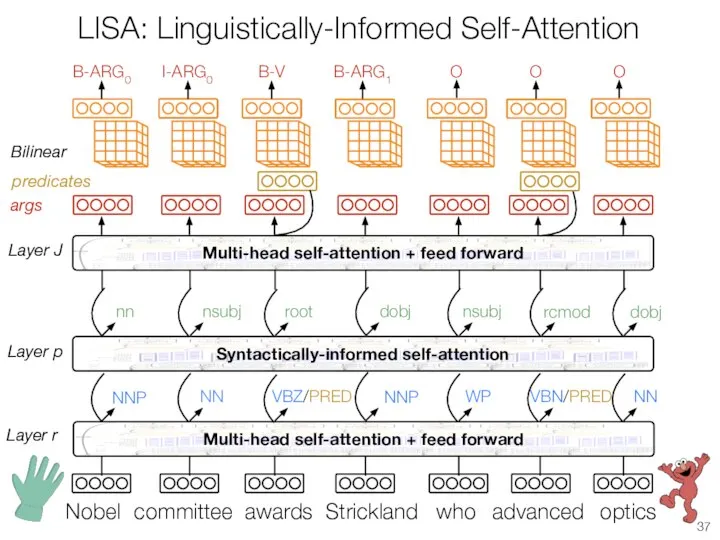

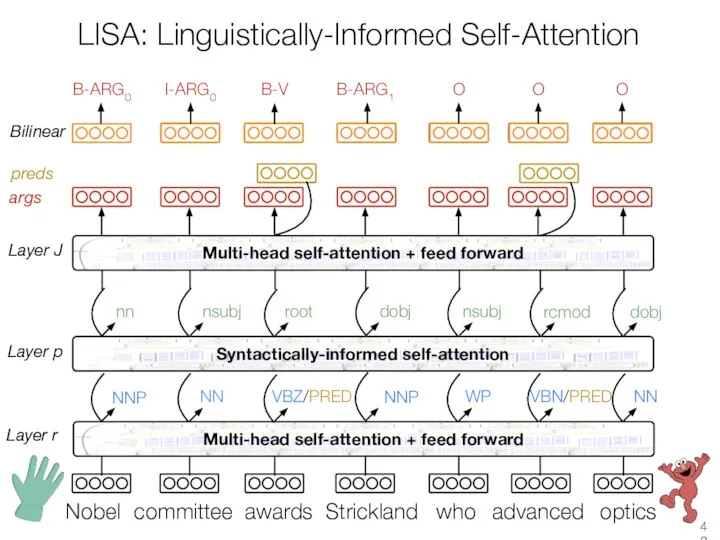

- 37. LISA: Linguistically-Informed Self-Attention committee awards Strickland advanced optics who Nobel B-ARG0 args predicates Bilinear

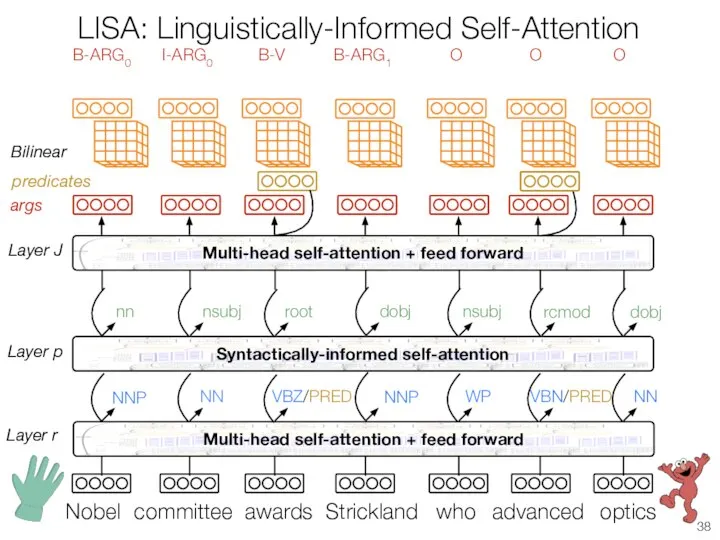

- 38. LISA: Linguistically-Informed Self-Attention committee awards Strickland advanced optics who Nobel B-ARG0 args predicates Bilinear

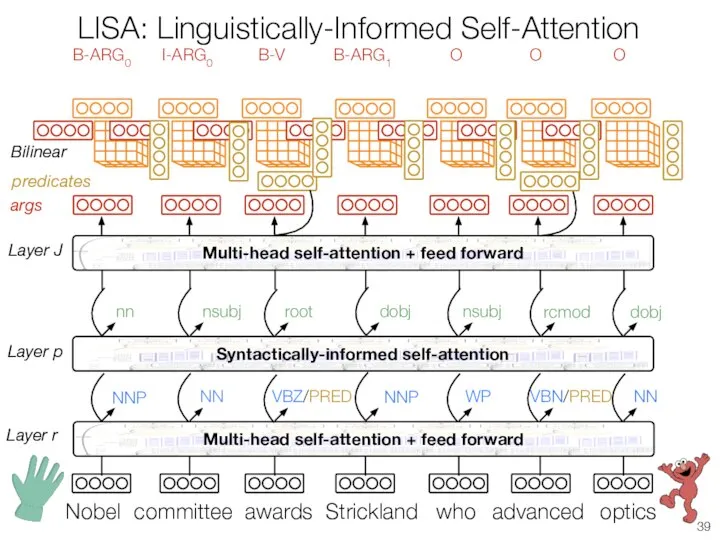

- 39. LISA: Linguistically-Informed Self-Attention committee awards Strickland advanced optics who Nobel B-ARG0 args predicates Bilinear

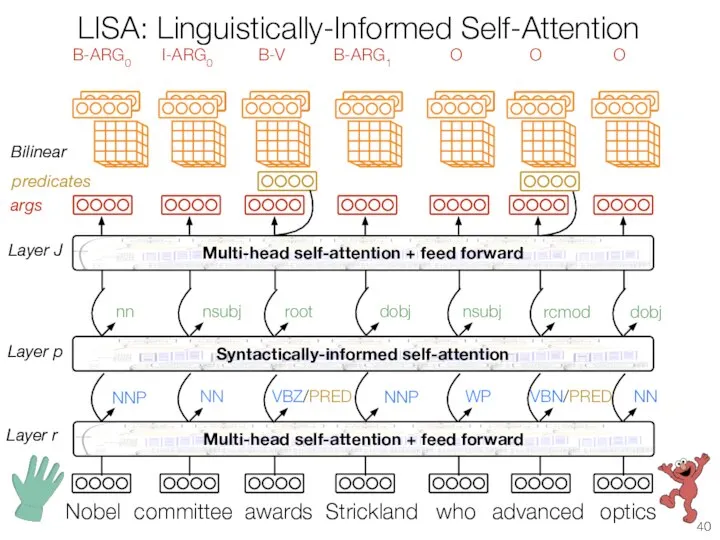

- 40. LISA: Linguistically-Informed Self-Attention committee awards Strickland advanced optics who Nobel B-ARG0 args predicates Bilinear

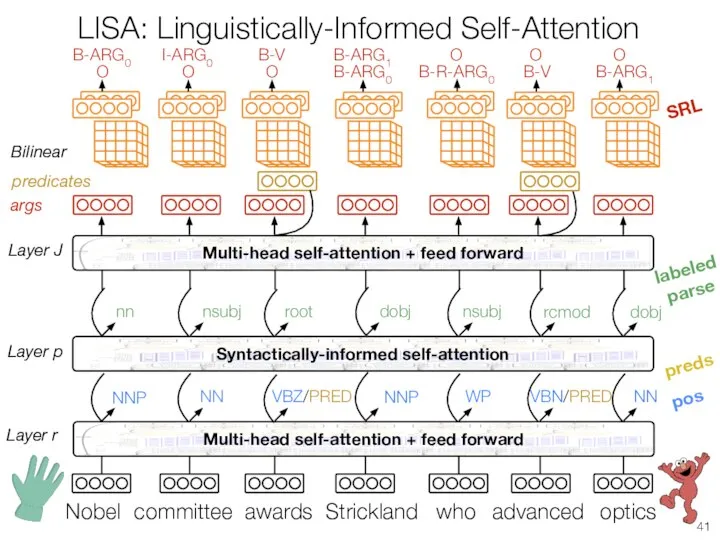

- 41. LISA: Linguistically-Informed Self-Attention committee awards Strickland advanced optics who Nobel B-ARG0 args predicates Bilinear pos preds

- 42. LISA: Linguistically-Informed Self-Attention committee awards Strickland advanced optics who Nobel B-ARG0 args predicates Bilinear

- 43. LISA: Linguistically-Informed Self-Attention committee awards Strickland advanced optics who Nobel B-ARG0 args preds Bilinear

- 44. LISA: Linguistically-Informed Self-Attention committee awards Strickland advanced optics who Nobel B-ARG0 args preds Bilinear

- 45. LISA: Linguistically-Informed Self-Attention committee awards Strickland advanced optics who Nobel B-ARG0 args preds Bilinear

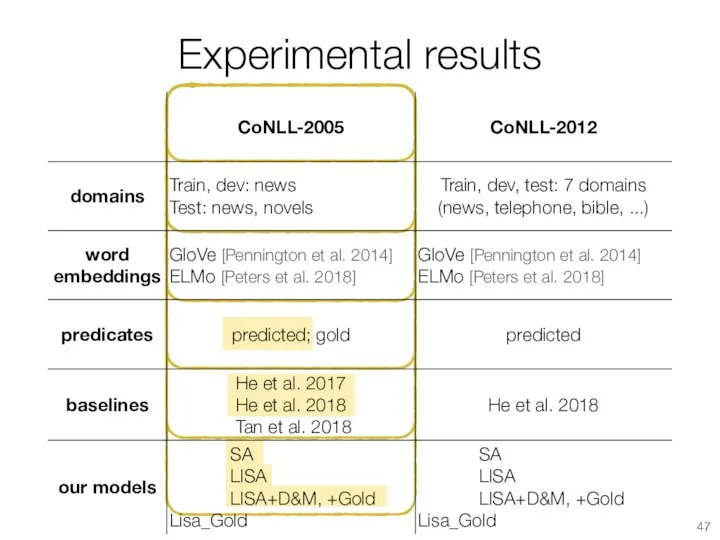

- 47. Experimental results

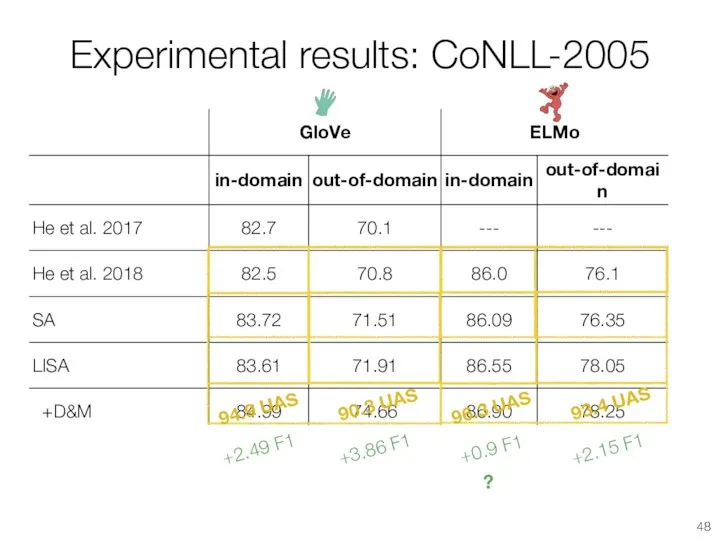

- 48. Experimental results: CoNLL-2005 +2.49 F1 +3.86 F1 +0.9 F1 +2.15 F1 ? 94.9 UAS 96.3 UAS

- 49. Experimental results: CoNLL-2005 +3.23 F1 +2.46 F1 96.5 UAS!

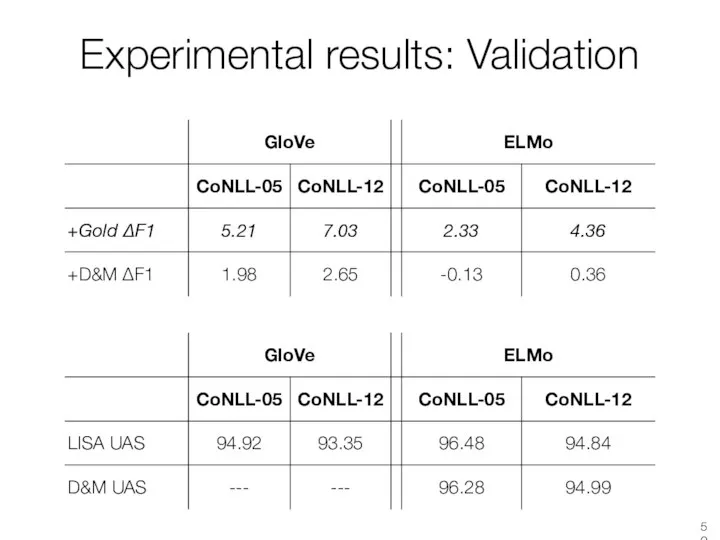

- 50. Experimental results: Validation

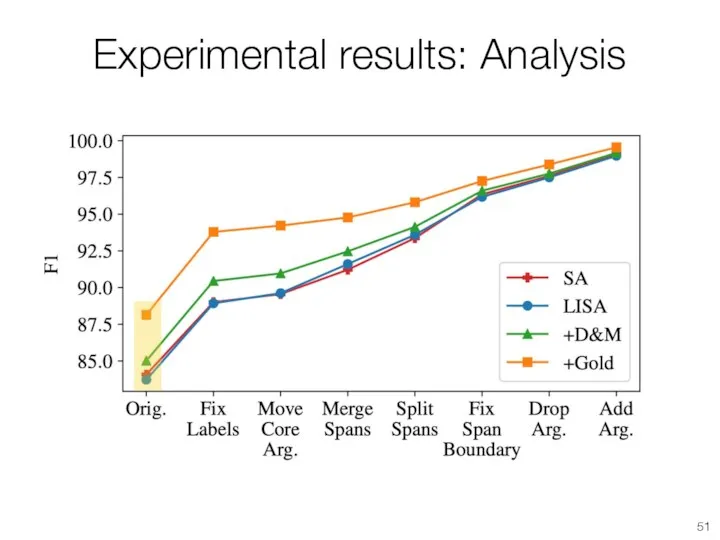

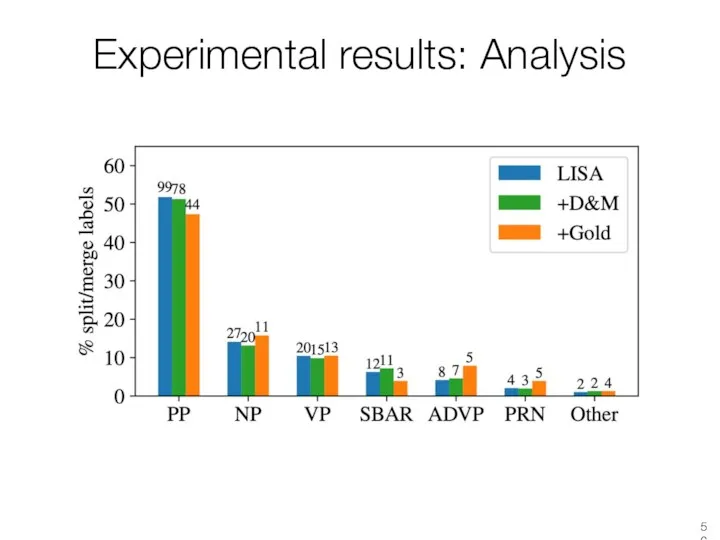

- 51. Experimental results: Analysis

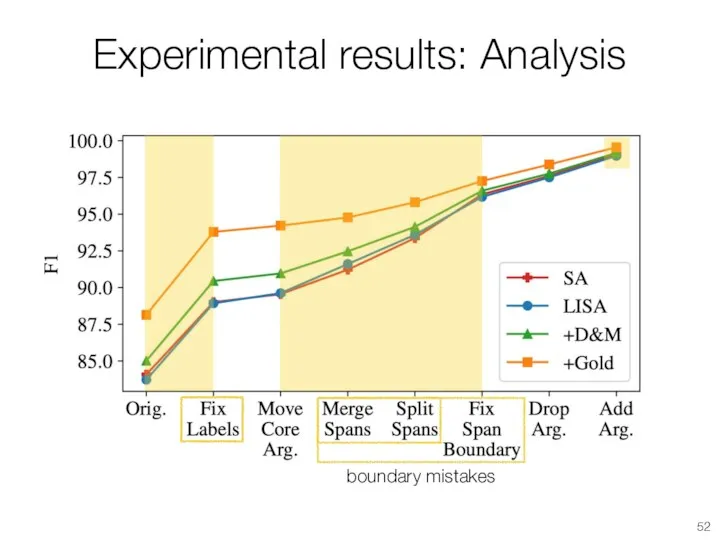

- 52. Experimental results: Analysis boundary mistakes

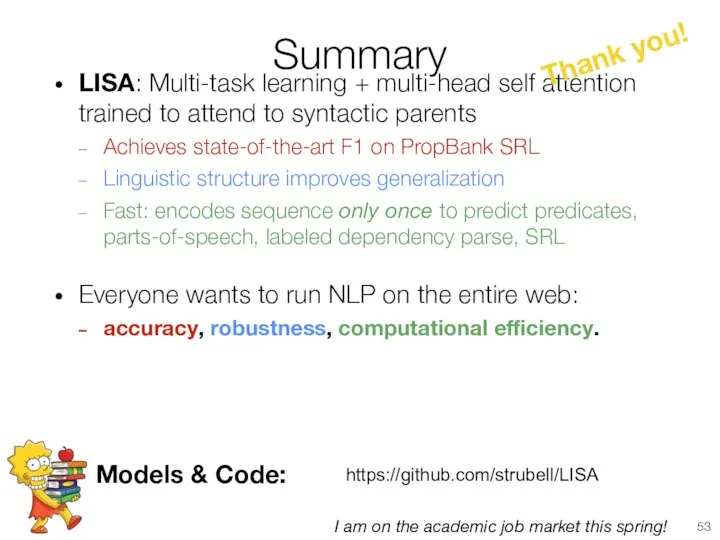

- 53. Summary LISA: Multi-task learning + multi-head self attention trained to attend to syntactic parents Achieves state-of-the-art

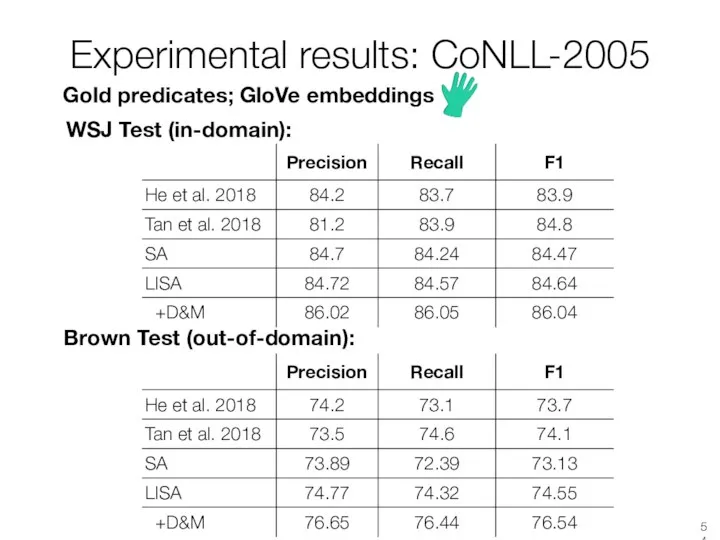

- 54. Experimental results: CoNLL-2005 Gold predicates; GloVe embeddings WSJ Test (in-domain): Brown Test (out-of-domain):

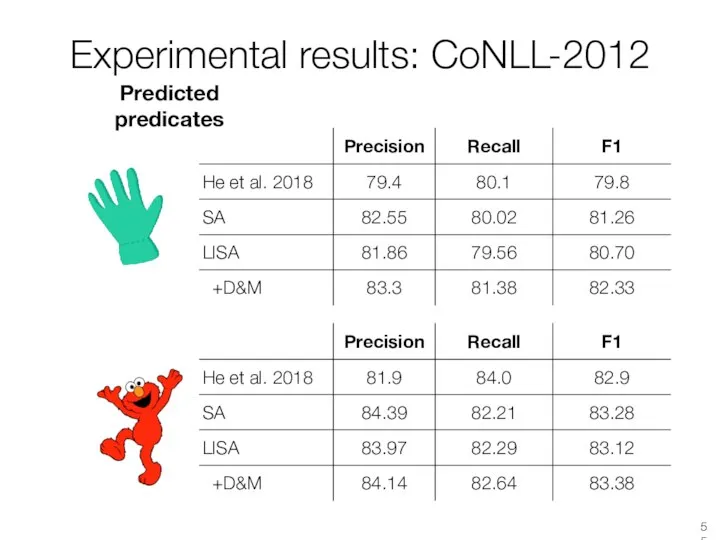

- 55. Experimental results: CoNLL-2012 Predicted predicates

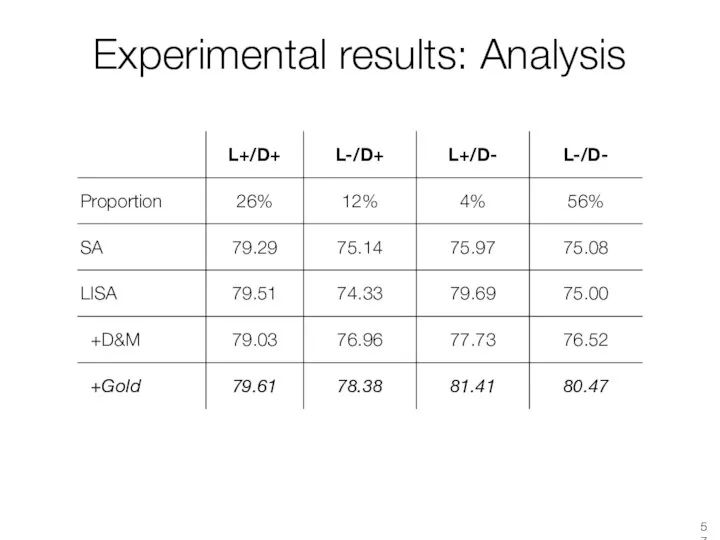

- 56. Experimental results: Analysis

- 57. Experimental results: Analysis

- 59. Скачать презентацию

![10 years of PropBank SRL Year F1 [Punyakanok et al.] [Toutanova et](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/1130813/slide-7.jpg)

![[Vaswani et al. 2017]](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/1130813/slide-9.jpg)

![Self-attention Layer p Q K V [Vaswani et al. 2017] committee awards](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/1130813/slide-11.jpg)

![Self-attention Layer p Q K V [Vaswani et al. 2017] committee awards](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/1130813/slide-14.jpg)

![Self-attention Layer p Q K V [Vaswani et al. 2017] committee awards](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/1130813/slide-15.jpg)

![Self-attention Layer p Q K V M [Vaswani et al. 2017] committee](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/1130813/slide-16.jpg)

![Self-attention Layer p Q K V M [Vaswani et al. 2017] committee](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/1130813/slide-17.jpg)

![Multi-head self-attention committee awards Strickland advanced optics who Nobel [Vaswani et al. 2017] p+1](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/1130813/slide-21.jpg)

![[Vaswani et al. 2017]](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/1130813/slide-23.jpg)

![How to incorporate syntax? Multi-task learning [Caruana 1993; Collobert et al. 2011]:](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/1130813/slide-24.jpg)

Английский алфавит

Английский алфавит Grammar. Unit 5

Grammar. Unit 5 My favourite teachers

My favourite teachers My life

My life Animals pics game

Animals pics game Конструкции I wish и if only

Конструкции I wish и if only School supplies

School supplies ЕГЭ по английскому. Секрет английских времен

ЕГЭ по английскому. Секрет английских времен Toys -игрушки

Toys -игрушки Двойной модальный комплекс: ошибка или нет?

Двойной модальный комплекс: ошибка или нет? Портрет. Учитель изобразительного искусства: Семяшкина Людмила Семёновна.

Портрет. Учитель изобразительного искусства: Семяшкина Людмила Семёновна. Find the treasure

Find the treasure Теория языка

Теория языка Present simple. Bowling

Present simple. Bowling Презентация на тему Welcome to Altai

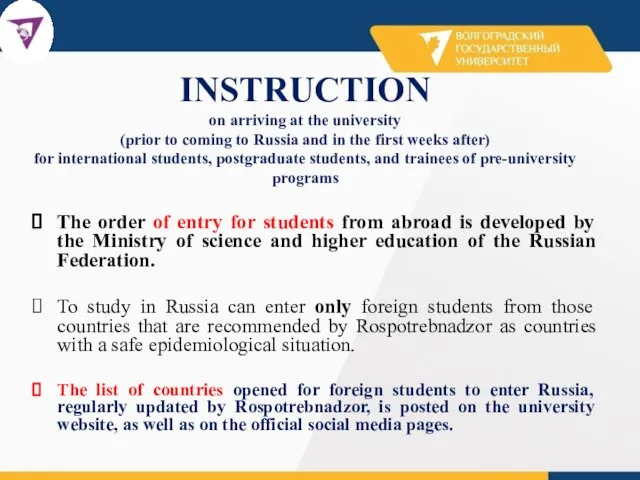

Презентация на тему Welcome to Altai  Instruction on arriving at the university

Instruction on arriving at the university Word-buildings of nouns and adjectives

Word-buildings of nouns and adjectives Symptoms of diseases of liver and bile ducts

Symptoms of diseases of liver and bile ducts Comprehension Skills

Comprehension Skills Count the sheep and click the right answer to help

Count the sheep and click the right answer to help We read with a Bun

We read with a Bun Презентация к уроку английского языка "The US Government The US Constitution" -

Презентация к уроку английского языка "The US Government The US Constitution" -  Презентация на тему What do you know about the USA?

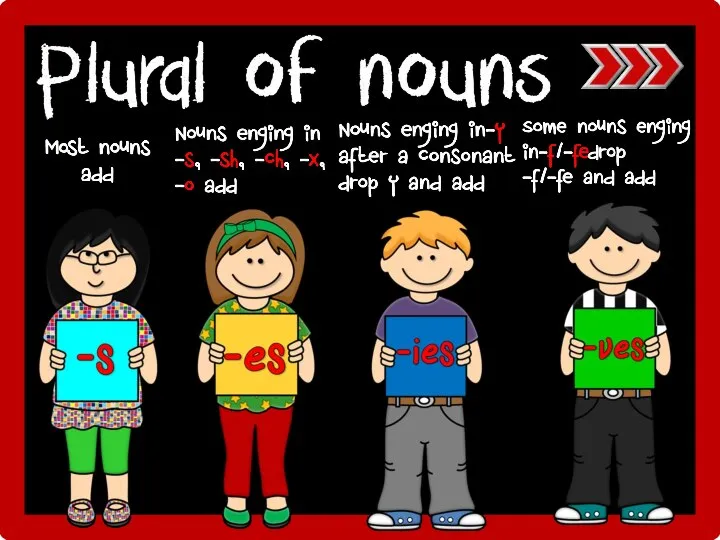

Презентация на тему What do you know about the USA?  Plural of nouns game

Plural of nouns game Hamlet

Hamlet Tell us about the food

Tell us about the food Annotation of foreign literature. Purpose and types of annotations

Annotation of foreign literature. Purpose and types of annotations Космическая игра-викторина для 2 класса по английскому языку

Космическая игра-викторина для 2 класса по английскому языку