Содержание

- 2. Outline Software Quality Assurance and Testing Fundamentals Software Testing Types: Functional and Non-Functional Testing Types Black-Box

- 3. Software Quality Assurance Fundamentals

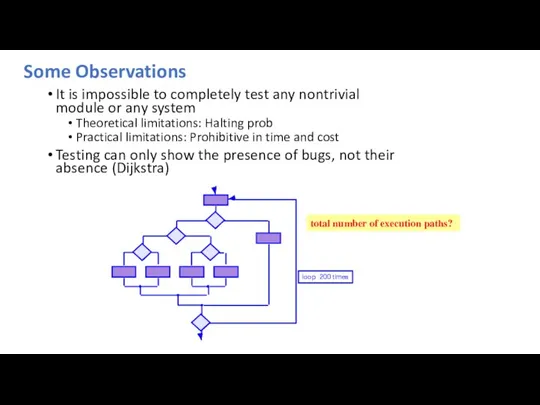

- 4. Some Observations It is impossible to completely test any nontrivial module or any system Theoretical limitations:

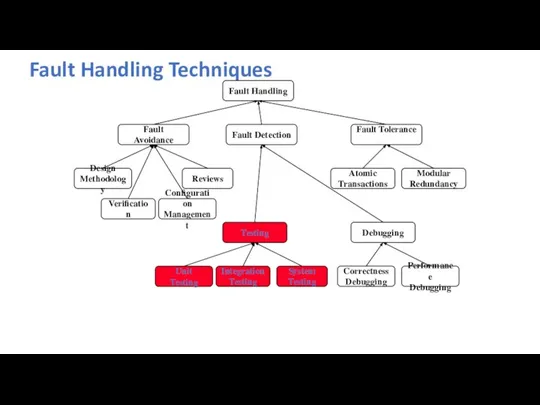

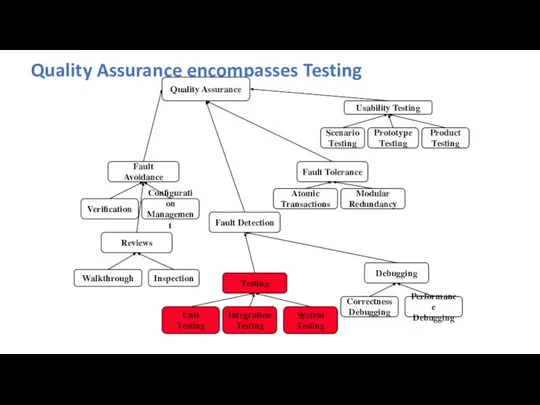

- 5. Fault Handling Techniques Testing Fault Handling Fault Avoidance Fault Tolerance Fault Detection Debugging Unit Testing Integration

- 6. Quality Assurance encompasses Testing Usability Testing Quality Assurance Testing Prototype Testing Scenario Testing Product Testing Fault

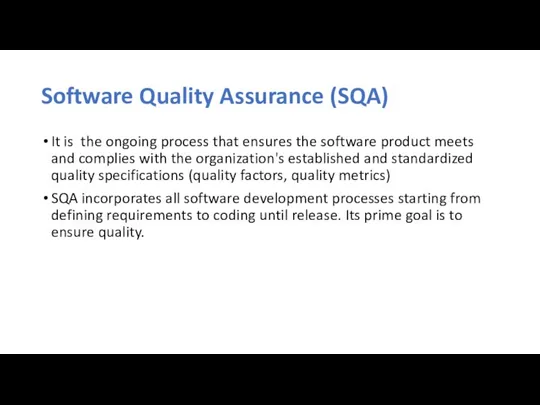

- 7. Software Quality Assurance (SQA) It is the ongoing process that ensures the software product meets and

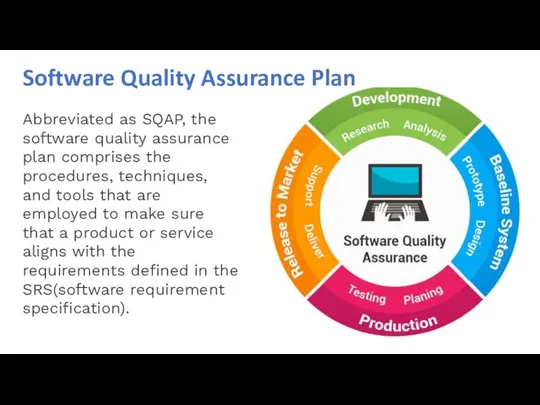

- 8. Software Quality Assurance Plan Abbreviated as SQAP, the software quality assurance plan comprises the procedures, techniques,

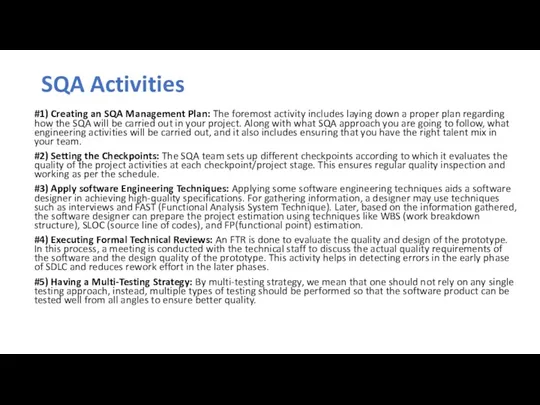

- 9. SQA Activities #1) Creating an SQA Management Plan: The foremost activity includes laying down a proper

- 10. SQA Activities #6) Enforcing Process Adherence: This activity insists on the need for process adherence during

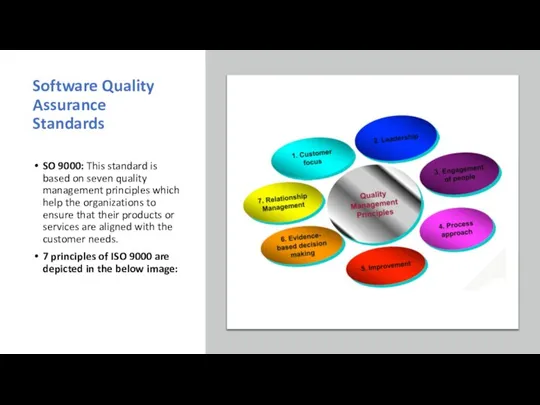

- 11. Software Quality Assurance Standards SO 9000: This standard is based on seven quality management principles which

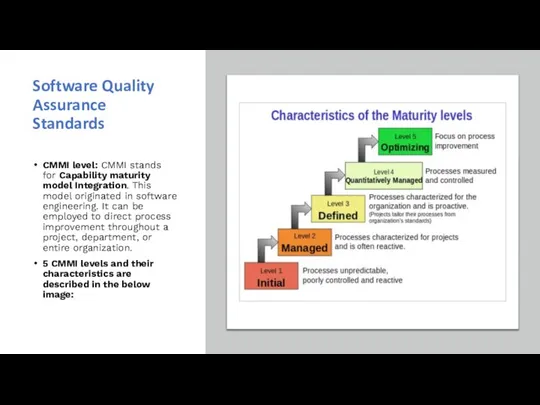

- 12. CMMI level: CMMI stands for Capability maturity model Integration. This model originated in software engineering. It

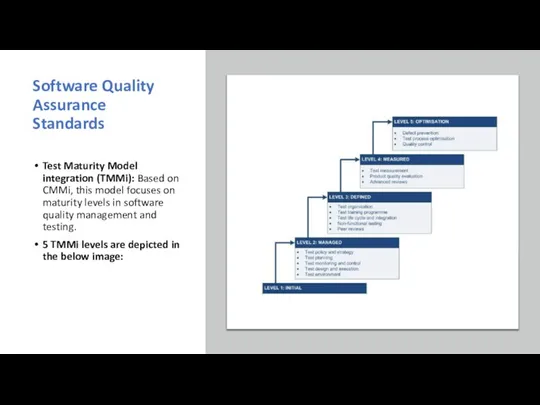

- 13. Test Maturity Model integration (TMMi): Based on CMMi, this model focuses on maturity levels in software

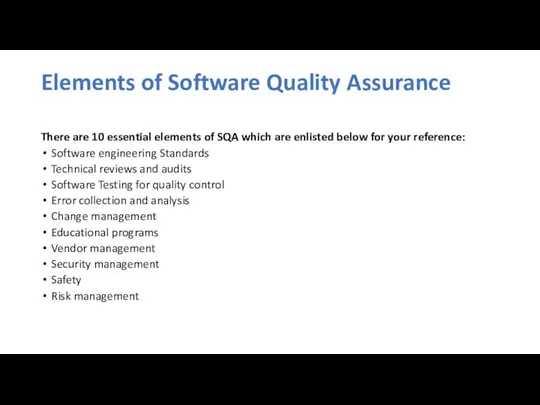

- 14. Elements of Software Quality Assurance There are 10 essential elements of SQA which are enlisted below

- 15. Software Testing Types

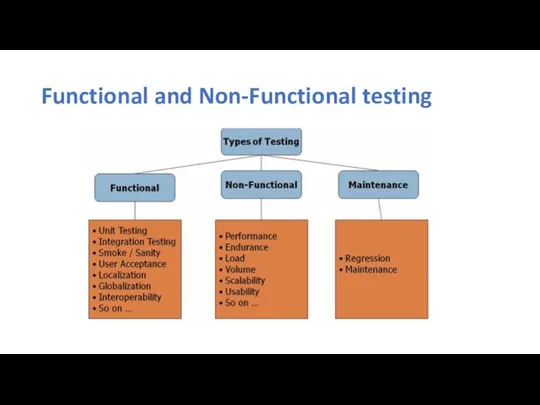

- 16. Functional and Non-Functional testing

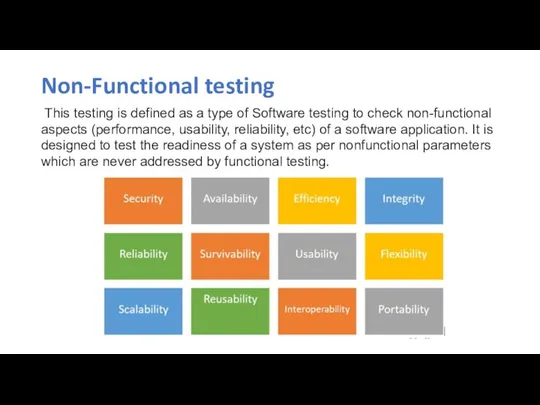

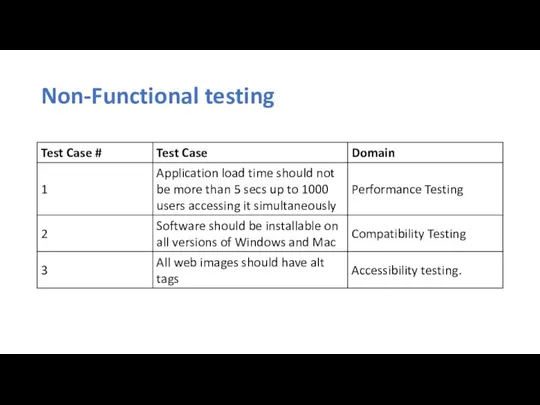

- 17. Non-Functional testing This testing is defined as a type of Software testing to check non-functional aspects

- 18. Non-Functional testing

- 19. Functional Testing It is a type of software testing that validates the software system against the

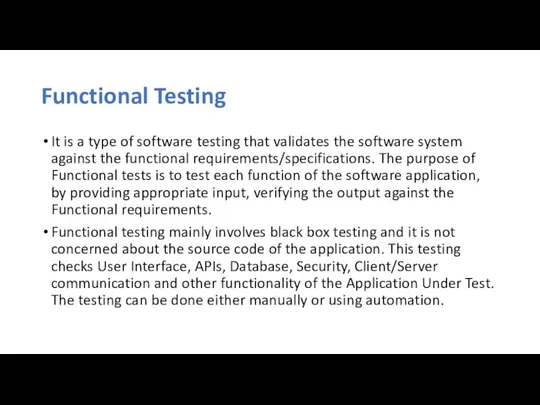

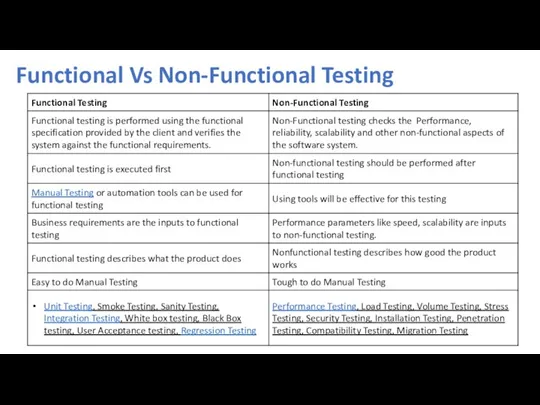

- 20. Functional Vs Non-Functional Testing

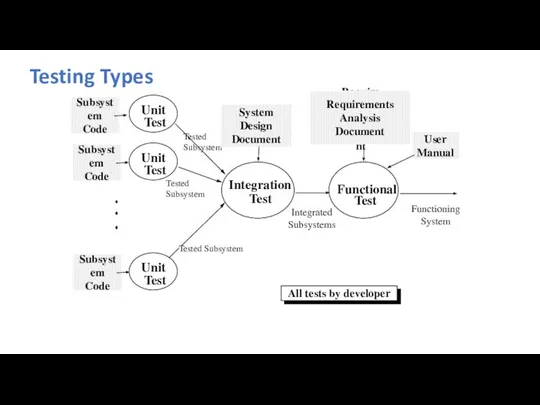

- 21. Testing Types Tested Subsystem Subsystem Code Functional Integration Unit Tested Subsystem Requirements Analysis Document System Design

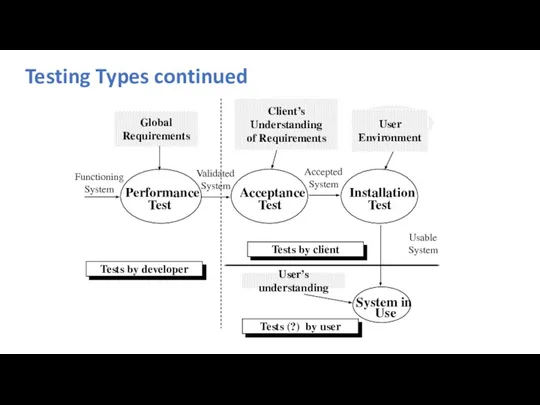

- 22. Global Requirements Testing Types continued User’s understanding Tests by developer Performance Acceptance Client’s Understanding of Requirements

- 23. Levels of Testing in V Model system requirements system integration software requirements preliminary design detailed design

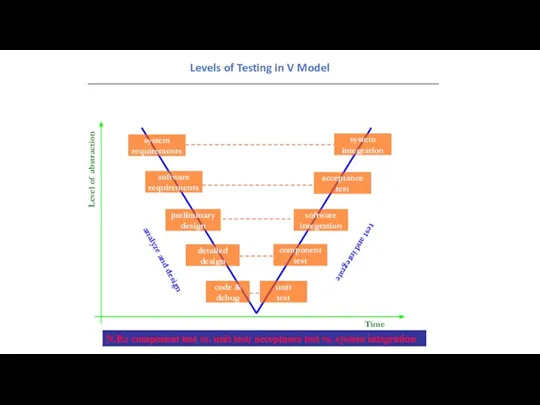

- 24. Types of Testing Unit Testing: Individual subsystem Carried out by developers Goal: Confirm that subsystems is

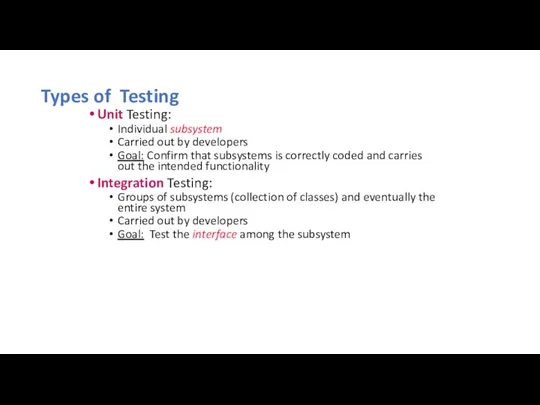

- 25. Types of Testing System Testing: The entire system Carried out by developers Goal: Determine if the

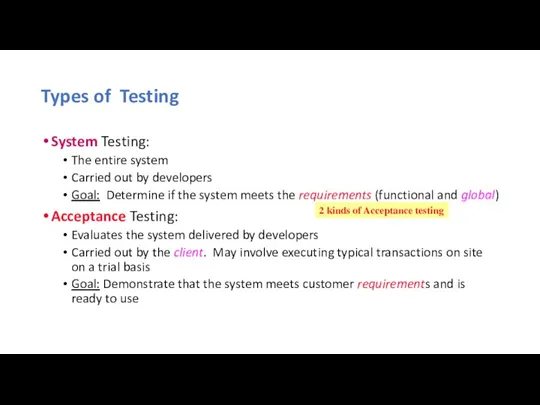

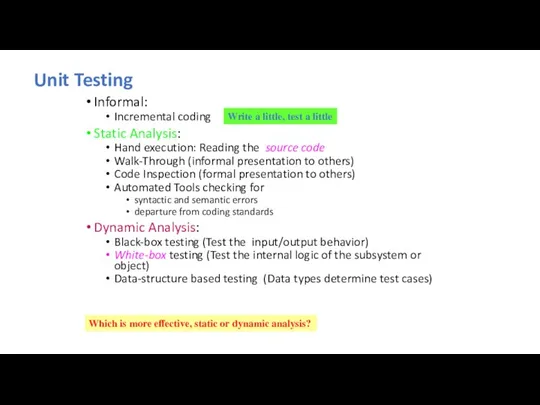

- 26. Unit Testing Informal: Incremental coding Static Analysis: Hand execution: Reading the source code Walk-Through (informal presentation

- 27. Black-Box vs. White-Box Testing

- 28. Black-box Testing Focus: I/O behavior. If for any given input, we can predict the output, then

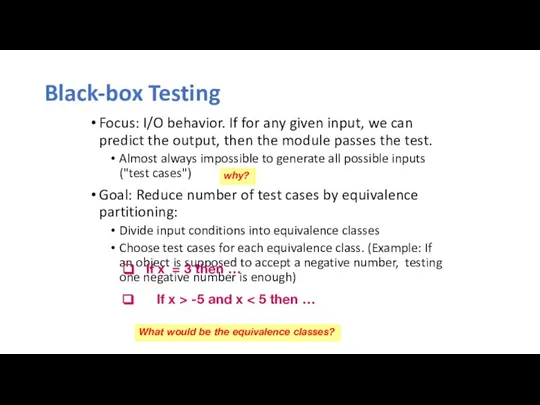

- 29. Black-box Testing (Continued) Selection of equivalence classes (No rules, only guidelines): Input is valid across range

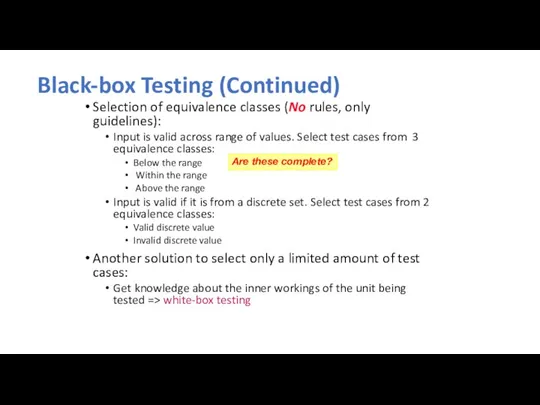

- 30. White-box Testing Focus: Thoroughness (Coverage). Every statement in the component is executed at least once. Four

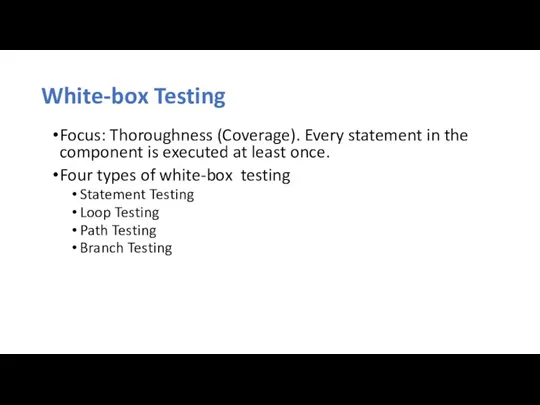

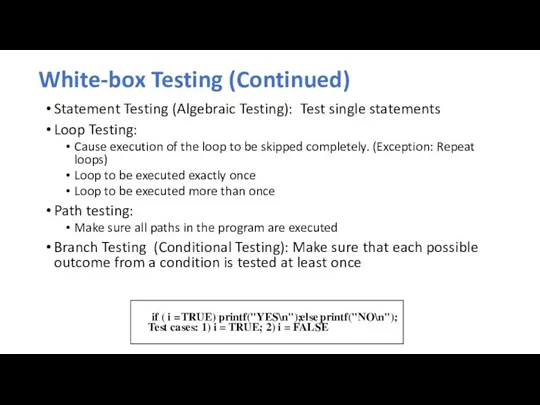

- 31. White-box Testing (Continued) Statement Testing (Algebraic Testing): Test single statements Loop Testing: Cause execution of the

- 32. White-Box Testing: Loop Testing Nested Loops Concatenated Loops Unstructured Loops Simple loop [Pressman]

- 33. /*Read in and sum the scores*/ White-box Testing Example FindMean(float Mean, FILE ScoreFile) { SumOfScores =

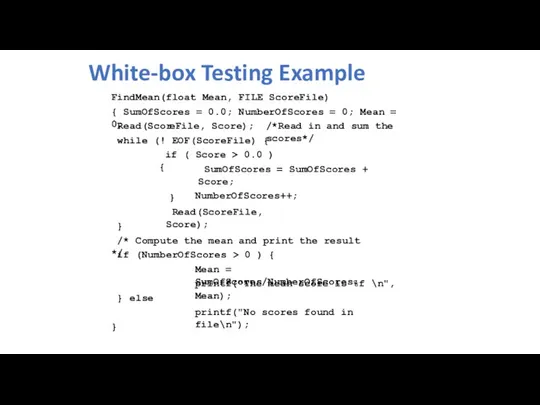

- 34. White-box Testing Example: Determining the Paths FindMean (FILE ScoreFile) { float SumOfScores = 0.0; int NumberOfScores

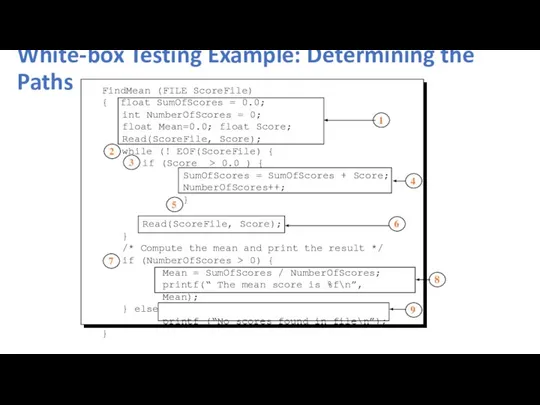

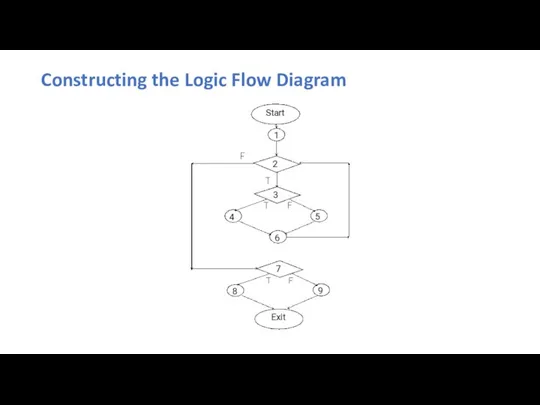

- 35. Constructing the Logic Flow Diagram

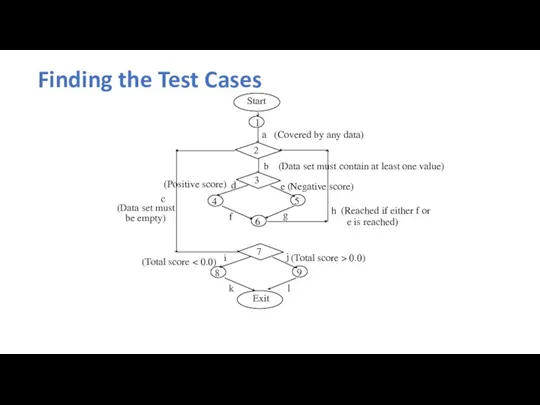

- 36. Finding the Test Cases Start 2 3 4 5 6 7 8 9 Exit 1 b

- 37. Comparison of White & Black-box Testing White-box Testing: Potentially infinite number of paths have to be

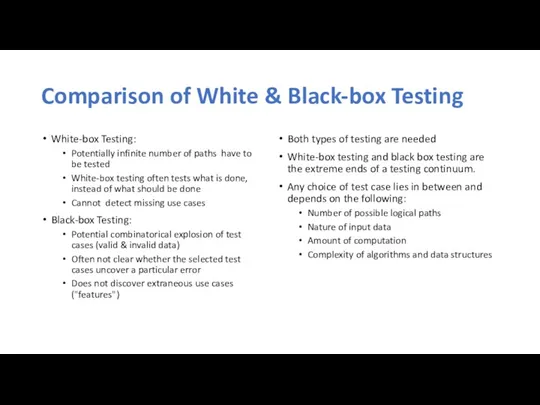

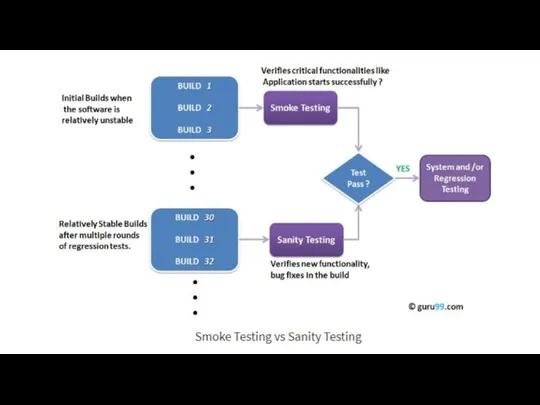

- 38. Sanity vs. Smoke Testing

- 40. What is a Software Build? If you are developing a simple computer program which consists of

- 41. Smoke Testing Smoke Testing is a software testing technique performed post software build to verify that

- 42. Sanity Testing Sanity testing is a kind of Software Testing performed after receiving a software build,

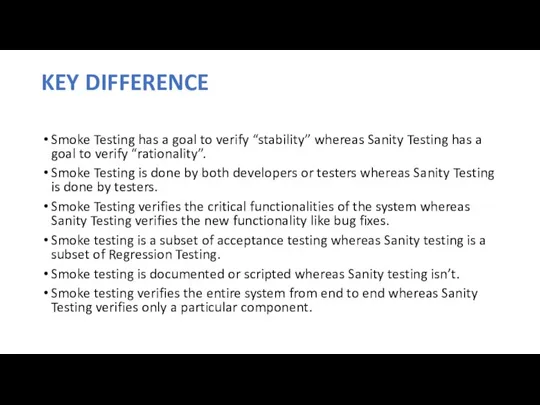

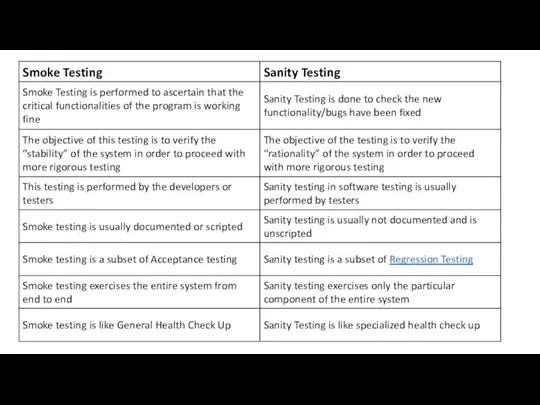

- 43. KEY DIFFERENCE Smoke Testing has a goal to verify “stability” whereas Sanity Testing has a goal

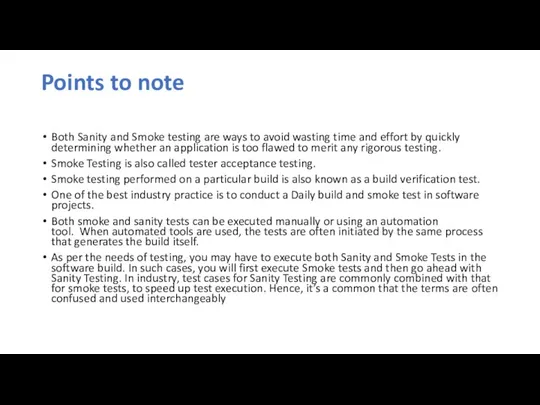

- 45. Points to note Both Sanity and Smoke testing are ways to avoid wasting time and effort

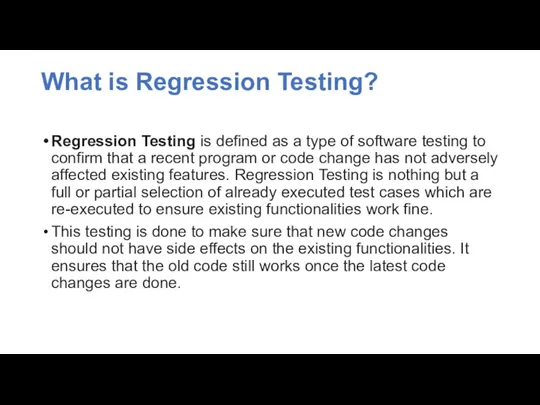

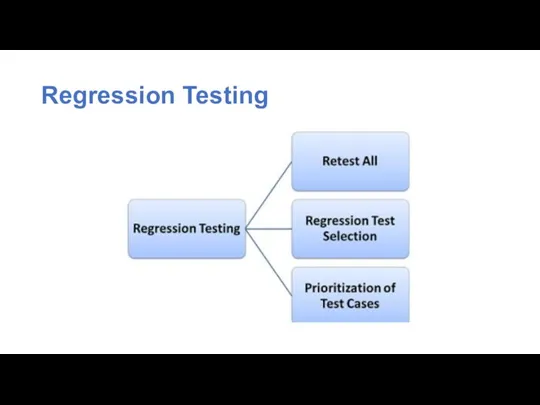

- 46. What is Regression Testing? Regression Testing is defined as a type of software testing to confirm

- 47. Regression Testing

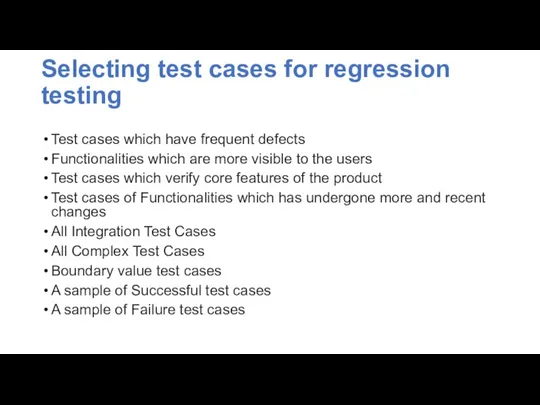

- 48. Selecting test cases for regression testing Test cases which have frequent defects Functionalities which are more

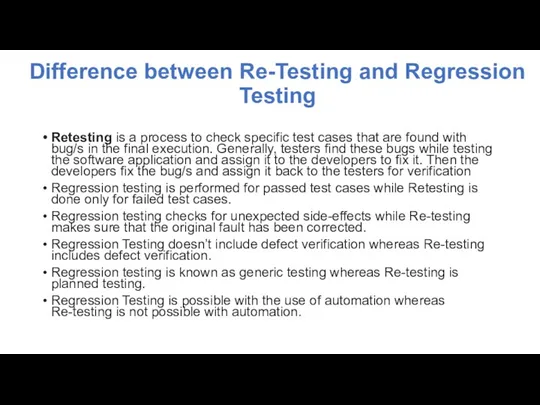

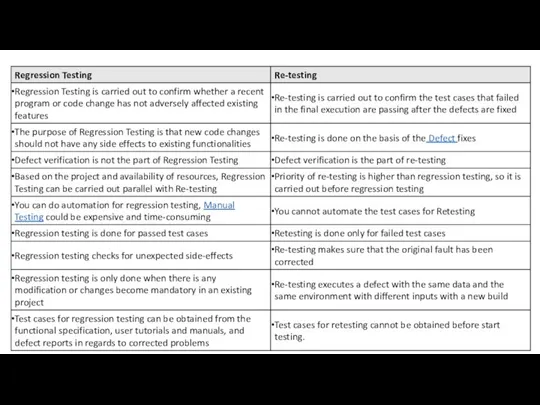

- 49. Difference between Re-Testing and Regression Testing Retesting is a process to check specific test cases that

- 51. Bug definition A bug is the consequence/outcome of a coding fault. A Defect in Software Testing

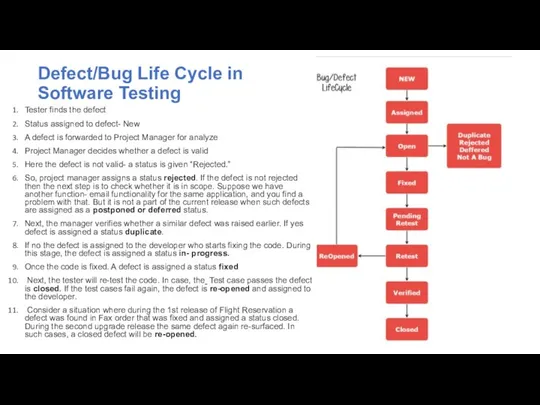

- 52. Tester finds the defect Status assigned to defect- New A defect is forwarded to Project Manager

- 53. Test Documentation: a Bug Report Defect_ID – Unique identification number for the defect. Defect Description –

- 54. Test Planning A Test Plan: covers all types and phases of testing guides the entire testing

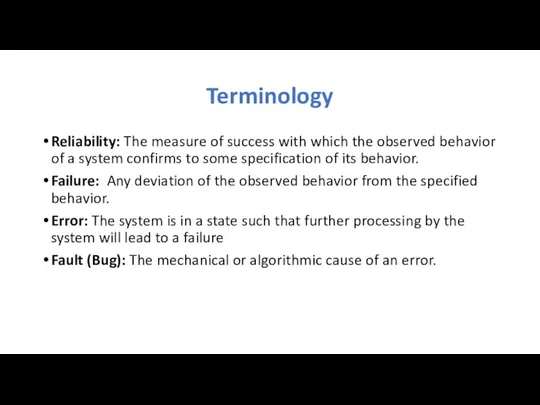

- 55. Terminology Reliability: The measure of success with which the observed behavior of a system confirms to

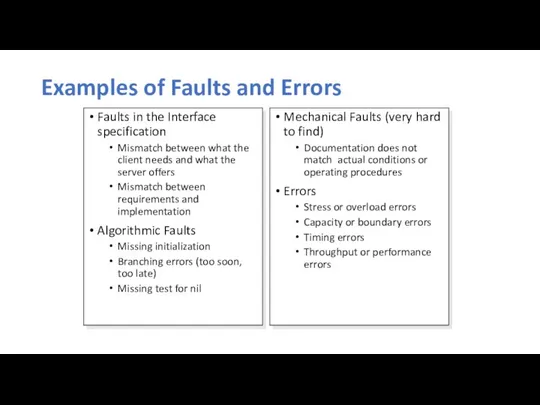

- 56. Examples of Faults and Errors Faults in the Interface specification Mismatch between what the client needs

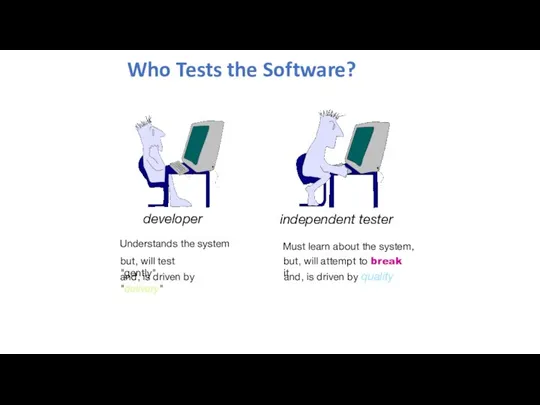

- 57. Who Tests the Software? developer independent tester Understands the system but, will test "gently" and, is

- 59. Скачать презентацию

![White-Box Testing: Loop Testing Nested Loops Concatenated Loops Unstructured Loops Simple loop [Pressman]](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/936664/slide-31.jpg)

Microsoft Office. Краткая характеристика изученных программ

Microsoft Office. Краткая характеристика изученных программ АСКЛ-Энерго

АСКЛ-Энерго Паскаль и информатика

Паскаль и информатика Автоматизированное рабочее место бухгалтера в коммерческой организации

Автоматизированное рабочее место бухгалтера в коммерческой организации Презентация на тему Рекомендации по проведению ГИА по информатике и ИКТ

Презентация на тему Рекомендации по проведению ГИА по информатике и ИКТ  Поиск профессионально значимой информации в сети интернет

Поиск профессионально значимой информации в сети интернет Информационные системы и технологии. Часть 2. Лекция 10. MES-системы

Информационные системы и технологии. Часть 2. Лекция 10. MES-системы Более совершенная графика с модулем Tkinter

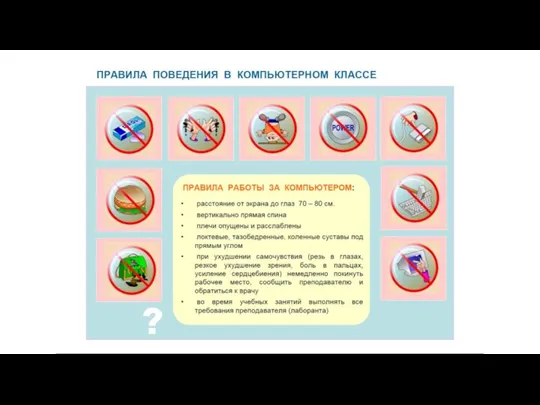

Более совершенная графика с модулем Tkinter Техника безопасности в компьютерном классе

Техника безопасности в компьютерном классе Виды склеек

Виды склеек Правила оформления заданий

Правила оформления заданий Daemon Tools Lite

Daemon Tools Lite Варианты получения информации

Варианты получения информации База данных SQLite. Лекция 12

База данных SQLite. Лекция 12 Создание мультимедийной презентации

Создание мультимедийной презентации Lektsia_GOST_R_ISO_MEK_12207_Osnovnye_protsessy_i_vzaimosvyaz_mezhdu_dokumentami_v_informatsionnoy_sisteme_soglasno_standartam

Lektsia_GOST_R_ISO_MEK_12207_Osnovnye_protsessy_i_vzaimosvyaz_mezhdu_dokumentami_v_informatsionnoy_sisteme_soglasno_standartam Параллельное программирование для ресурсоёмких задач численного моделирования в физике

Параллельное программирование для ресурсоёмких задач численного моделирования в физике Стандарты в области информационной безопасности в РФ

Стандарты в области информационной безопасности в РФ Рецепты для всех. Концепция проекта

Рецепты для всех. Концепция проекта Внутренние устройства компьютера

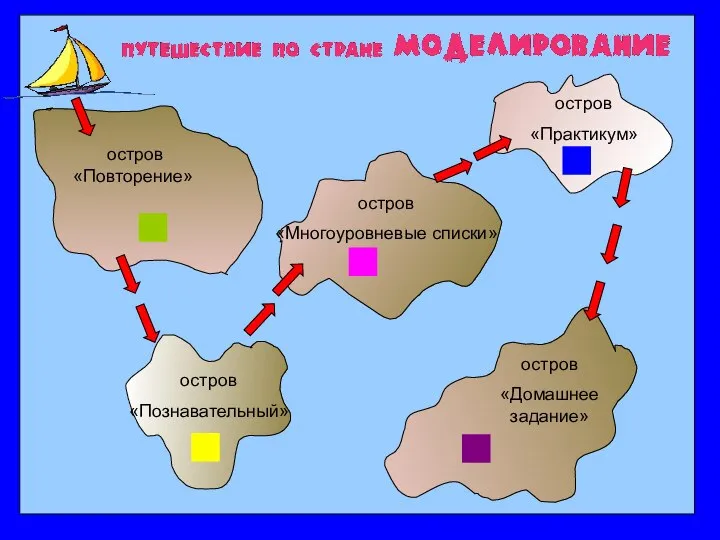

Внутренние устройства компьютера Путешествие по стране моделирование

Путешествие по стране моделирование Программная работа лр 130305 01 сд.уп.04 09 12

Программная работа лр 130305 01 сд.уп.04 09 12 Языки программирования

Языки программирования Применение табличного процессора Excel для расчета по имеющимся формулам

Применение табличного процессора Excel для расчета по имеющимся формулам Пройди сам и расскажи другим: как сделать эколого-краеведческий путеводитель

Пройди сам и расскажи другим: как сделать эколого-краеведческий путеводитель Основные этапы программирования как науки

Основные этапы программирования как науки Вплив пандемії COVID-19 на розвиток фінтеху

Вплив пандемії COVID-19 на розвиток фінтеху Изучение партнёрских программ в социальных сетях

Изучение партнёрских программ в социальных сетях