Содержание

- 2. 我们会成功 We will succeed ! У нас все получится [U nas vse poluchitsya ] ! Без

- 3. . Lecture3. Data preproccessing and machine learning with Scikit-learn

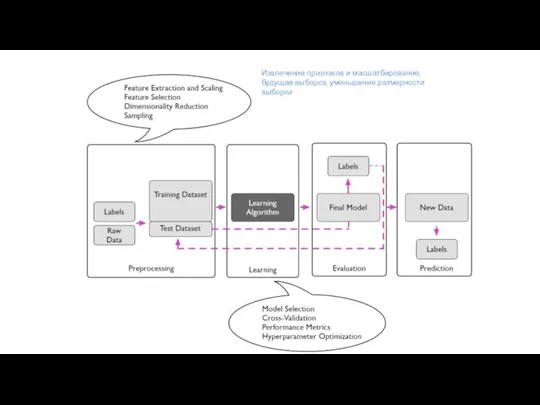

- 4. Извлечение признаков и масшатбирование, будущая выборка, уменьшение размерности выборки

- 5. Training set and testing set Machine learning is about learning some properties of a data set

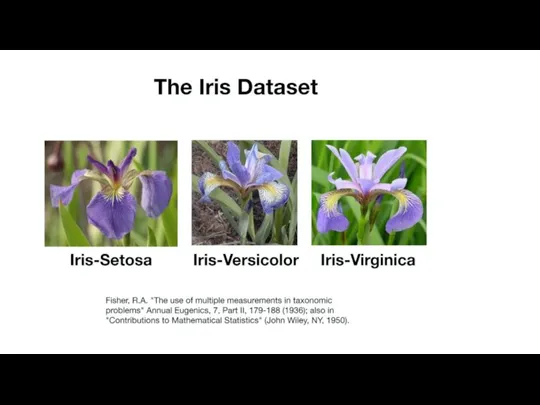

- 6. Reading a Dataset

- 8. Data Description : Attribute Information: 1. sepal length in cm 2. sepal width in cm 3.

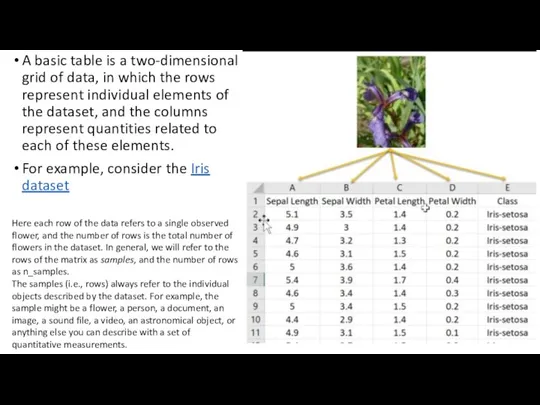

- 9. A basic table is a two-dimensional grid of data, in which the rows represent individual elements

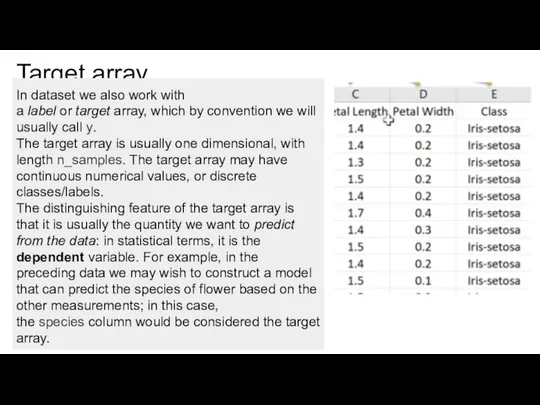

- 10. Target array In dataset we also work with a label or target array, which by convention

- 11. Basic Data Analysis : The dataset provided has 150 rows Dependent Variables : Sepal length.Sepal Width,Petal

- 12. The dataset is divided into Train and Test data with 80:20 split ratio where 80% data

- 13. Each training point belongs to one of N different classes. The goal is to construct a

- 14. What is scikit-learn? The scikit-learn library provides an implementation of a range of algorithms for Supervised

- 15. You can watch the Pandas and scikit-learn features documentation on this site. https://pandas.pydata.org/pandas-docs/stable/ https://scikit-learn.org/stable/documentation.html

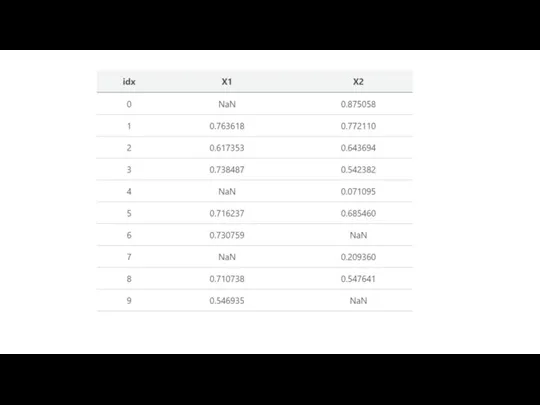

- 16. Preprocessing Data: missing data Real world data is filled with missing values. You will often need

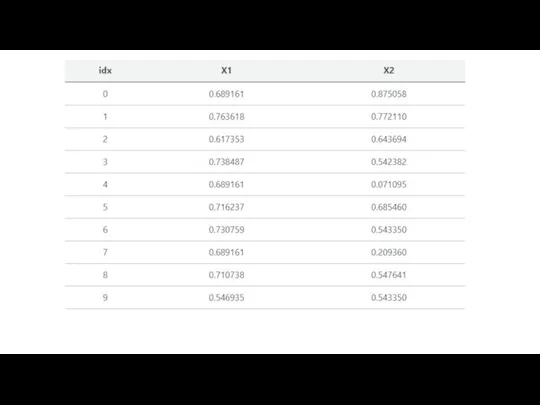

- 18. Method 1: Mean or Median A common method of imputation with numeric features is to replace

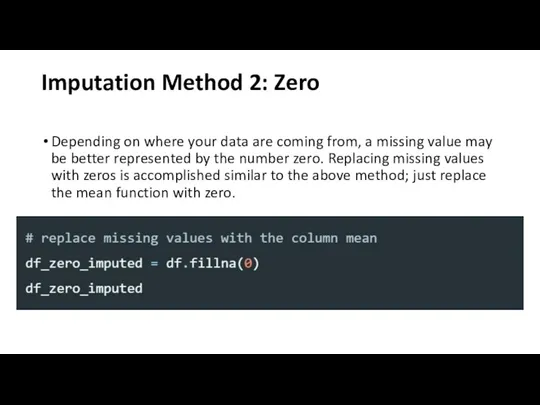

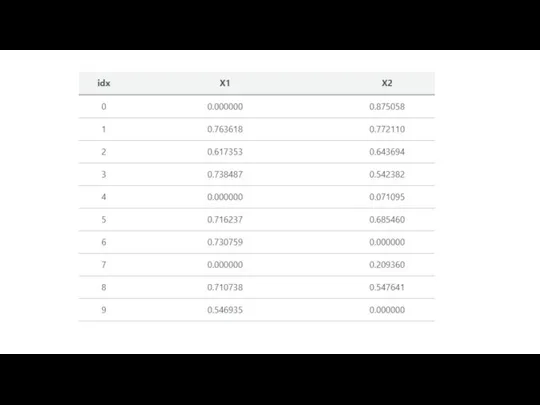

- 20. Imputation Method 2: Zero Depending on where your data are coming from, a missing value may

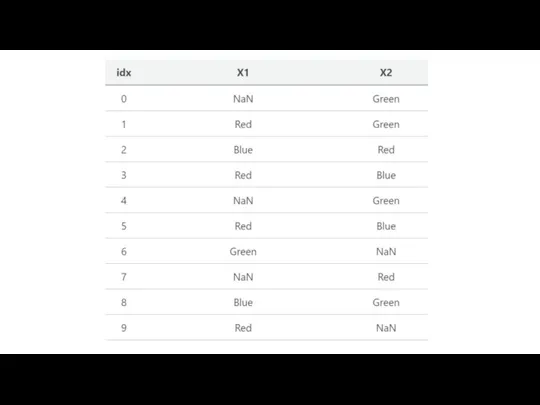

- 22. Imputation for Categorical Data For categorical features, using mean, median, or zero-imputation doesn’t make much sense.

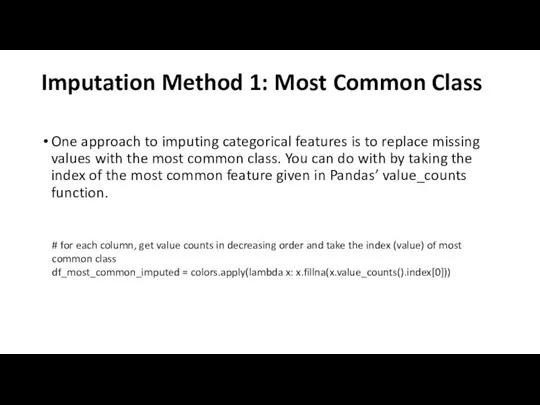

- 24. Imputation Method 1: Most Common Class One approach to imputing categorical features is to replace missing

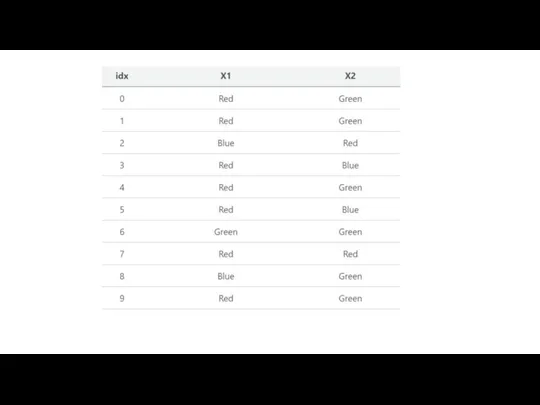

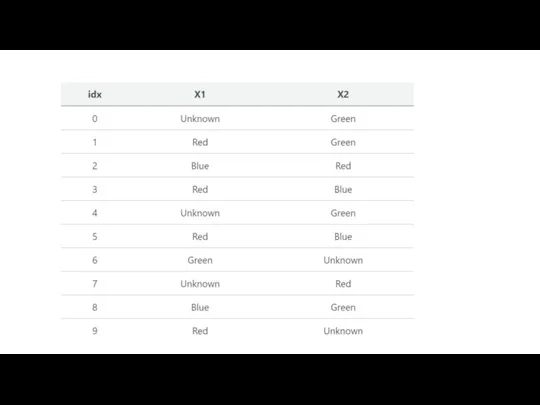

- 26. Imputation Method 2: “Unknown” Class Similar to how it’s sometimes most appropriate to impute a missing

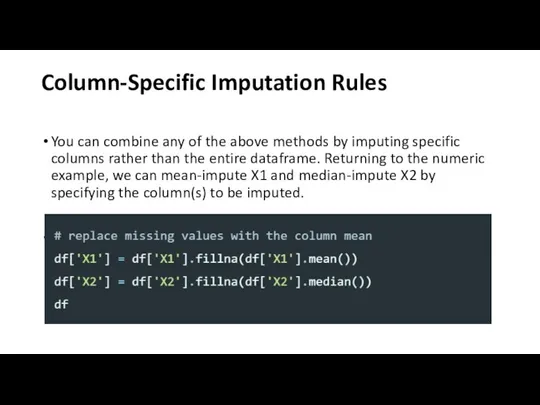

- 28. Column-Specific Imputation Rules You can combine any of the above methods by imputing specific columns rather

- 29. Preprocessing Data If data set are strings We saw in our initial exploration that most of

- 30. The image below represents a dataframe that has one column named ‘color’ and three records ‘Red’,

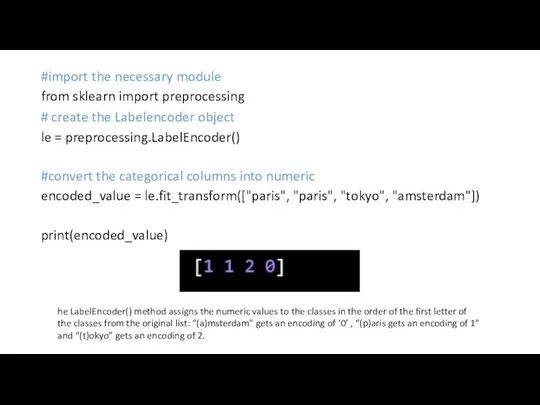

- 31. #import the necessary module from sklearn import preprocessing # create the Labelencoder object le = preprocessing.LabelEncoder()

- 32. Training Set & Test Set A Machine Learning algorithm needs to be trained on a set

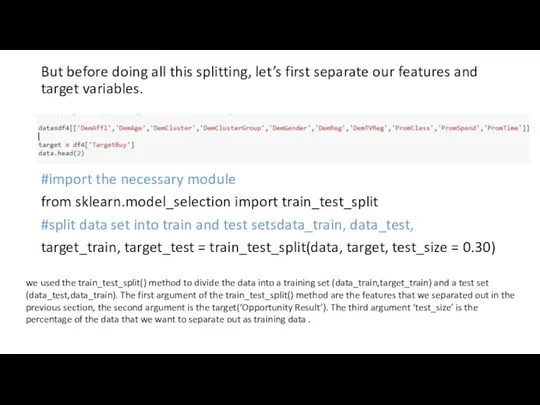

- 33. But before doing all this splitting, let’s first separate our features and target variables. #import the

- 34. Watch subtitled video https://www.coursera.org/lecture/machine-learning/what-is-machine-learning-Ujm7v

- 38. Скачать презентацию

Рыба и рыбные продукты

Рыба и рыбные продукты NEFCO Целевые экологические программы

NEFCO Целевые экологические программы Основы законодательства РФ об охране здоровья гражданина

Основы законодательства РФ об охране здоровья гражданина 3.Essen und trinken

3.Essen und trinken Оценка эффективности проекта

Оценка эффективности проекта Анализ материалов

Анализ материалов Отчет по рекламе для студии воздушного фитнеса и йоги Притяжение за март 2019

Отчет по рекламе для студии воздушного фитнеса и йоги Притяжение за март 2019 Cистемная сорганизация бизнес-процессов

Cистемная сорганизация бизнес-процессов муниципальное бюджетное общеобразовательное учреждение "Начальная общеобразовательная школа № 41"

муниципальное бюджетное общеобразовательное учреждение "Начальная общеобразовательная школа № 41" Проектное бюро 3D плюс. Строительство зданий на основе визуализации в 3D формате объемнопланировочных решений

Проектное бюро 3D плюс. Строительство зданий на основе визуализации в 3D формате объемнопланировочных решений Внутреннее и внешнее устройство храма и правила поведения в храме

Внутреннее и внешнее устройство храма и правила поведения в храме Электроустановочные устройства квартиной электросети

Электроустановочные устройства квартиной электросети Рассказ А.И.Солженицына «Один день из жизни Ивана Денисовича»

Рассказ А.И.Солженицына «Один день из жизни Ивана Денисовича» Коза-заяц

Коза-заяц Виды кормов Ландор для кошек

Виды кормов Ландор для кошек Новая линейка мелкой бытовой техники Анна Фендрих Октябрь, 2 2009

Новая линейка мелкой бытовой техники Анна Фендрих Октябрь, 2 2009 Опорные схемы учебно – воспитательного курса «Основы религиозных культур и светской этики».

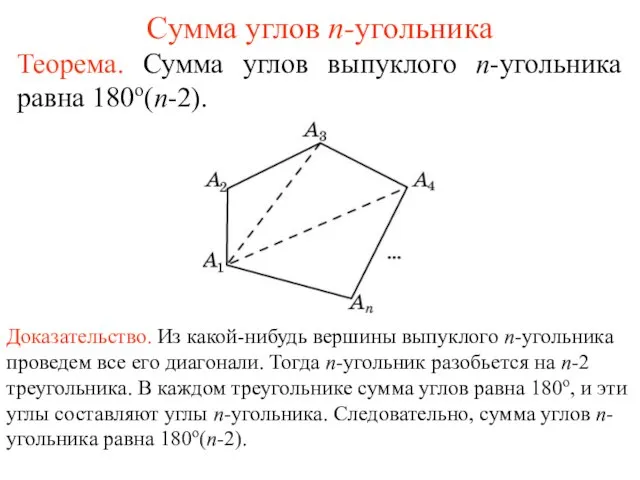

Опорные схемы учебно – воспитательного курса «Основы религиозных культур и светской этики». Сумма углов n-угольника

Сумма углов n-угольника Презентация группы компаний «НД»: «Автоматический платежный терминал для АЗС» «Холодная» АЗС в украинских реалиях: законодатель

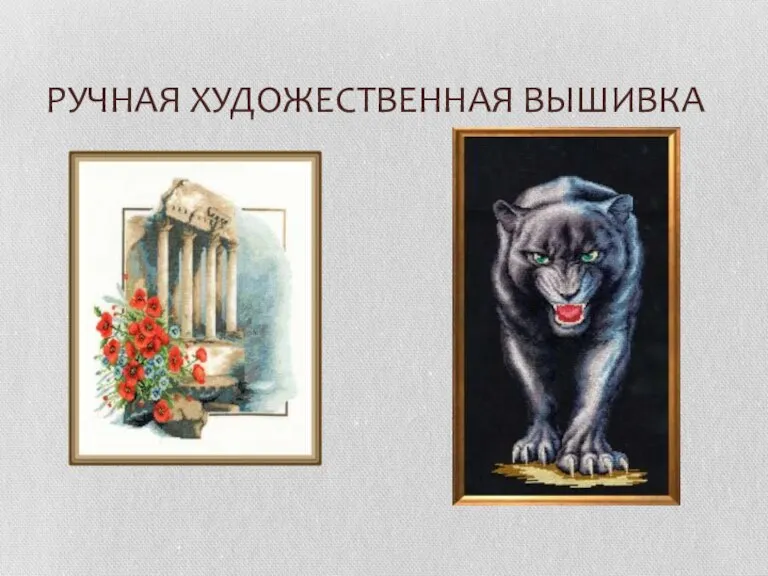

Презентация группы компаний «НД»: «Автоматический платежный терминал для АЗС» «Холодная» АЗС в украинских реалиях: законодатель Ручная художественная вышивка

Ручная художественная вышивка УП «РЕКЛАМНАЯ КУХНЯ»

УП «РЕКЛАМНАЯ КУХНЯ» Презентация на тему Восклицательные предложения

Презентация на тему Восклицательные предложения Храмы России

Храмы России Картина изохром

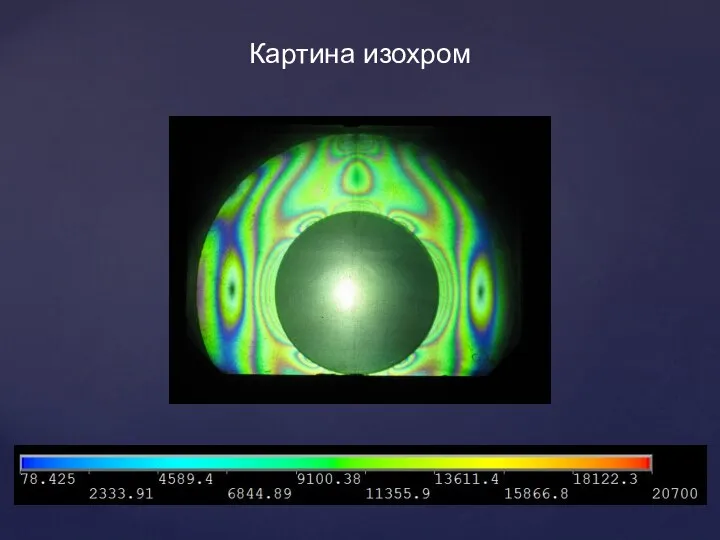

Картина изохром Самый лучший спортсмен. Федор Чудинов

Самый лучший спортсмен. Федор Чудинов Игры к празднику Масленицы

Игры к празднику Масленицы Упражнения для мозга

Упражнения для мозга Последний император Николай II

Последний император Николай II