Содержание

- 2. This is an example plot of linear function: The nature of the relationship between variables can

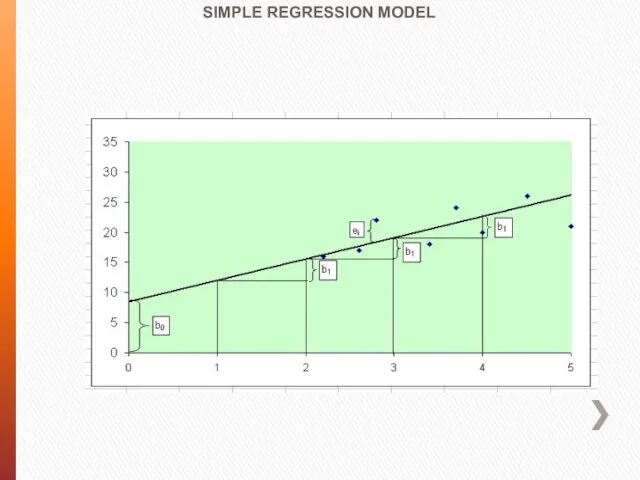

- 3. 1 Y SIMPLE REGRESSION MODEL Suppose that a variable Y is a linear function of another

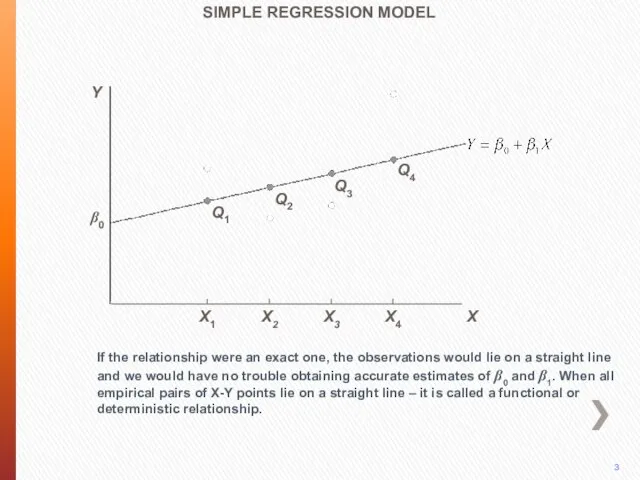

- 4. If the relationship were an exact one, the observations would lie on a straight line and

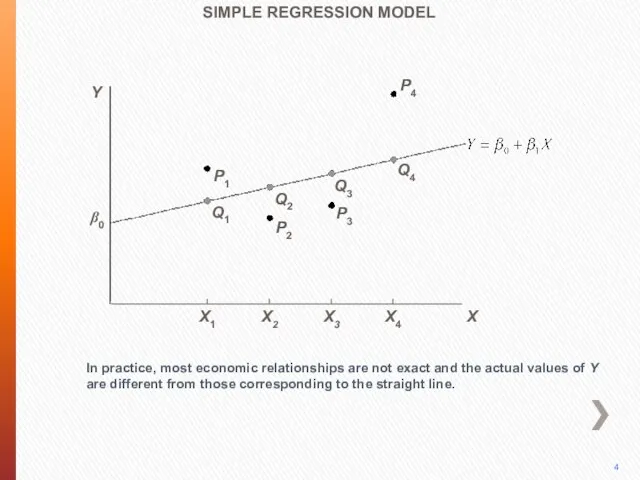

- 5. P4 In practice, most economic relationships are not exact and the actual values of Y are

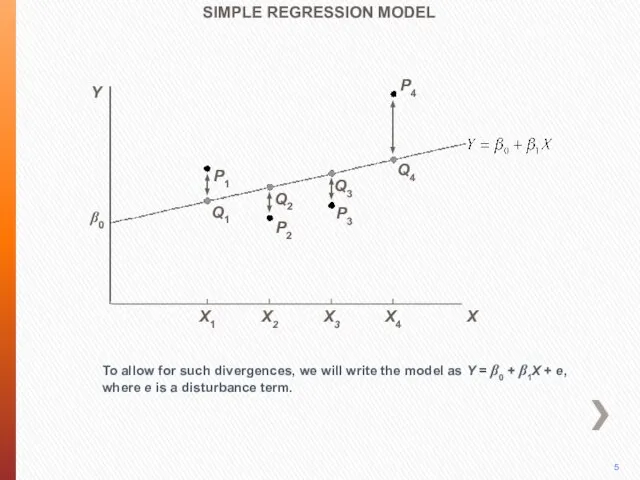

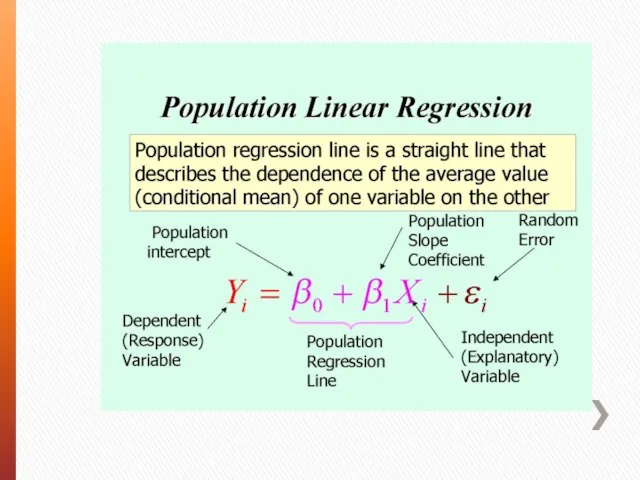

- 6. P4 To allow for such divergences, we will write the model as Y = β0 +

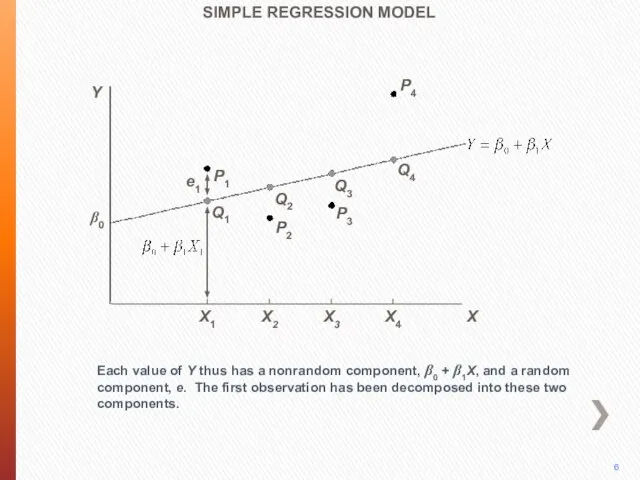

- 7. P4 Each value of Y thus has a nonrandom component, β0 + β1X, and a random

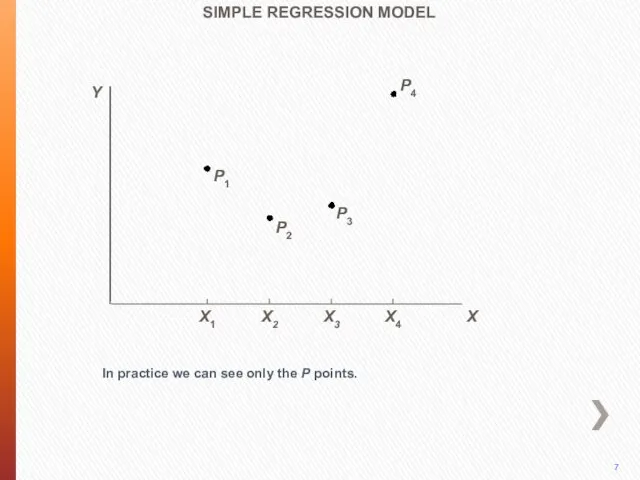

- 8. P4 In practice we can see only the P points. P3 P2 P1 SIMPLE REGRESSION MODEL

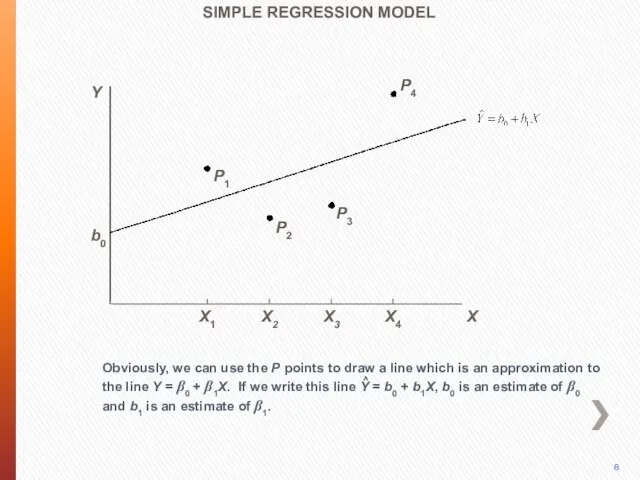

- 9. P4 Obviously, we can use the P points to draw a line which is an approximation

- 11. However, we have obtained data from only a random sample of the population. For a sample,

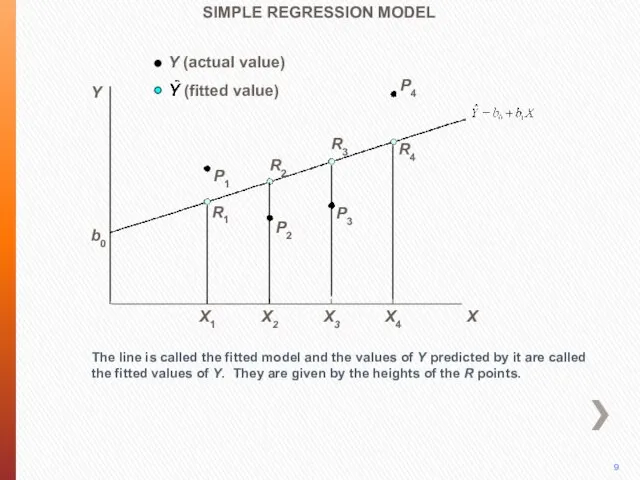

- 12. P4 The line is called the fitted model and the values of Y predicted by it

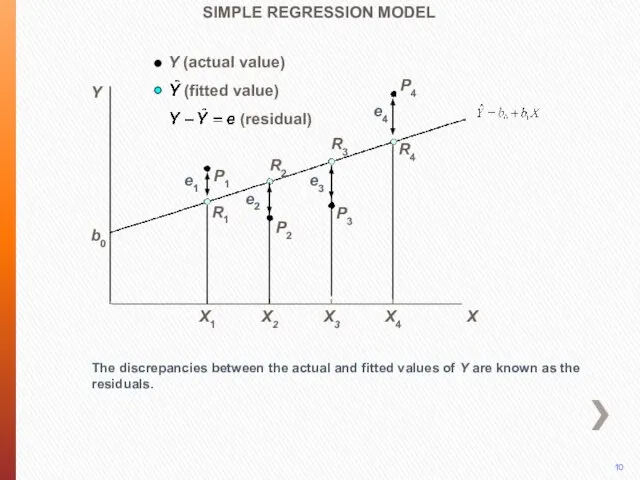

- 13. P4 The discrepancies between the actual and fitted values of Y are known as the residuals.

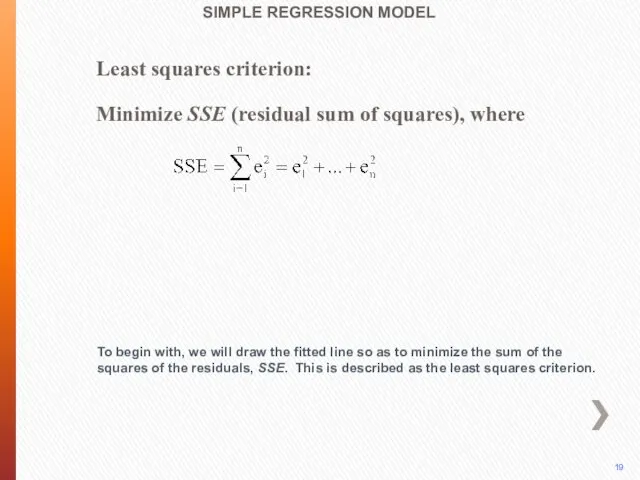

- 14. SIMPLE REGRESSION MODEL Least squares criterion: Minimize SSE (residual sum of squares), where To begin with,

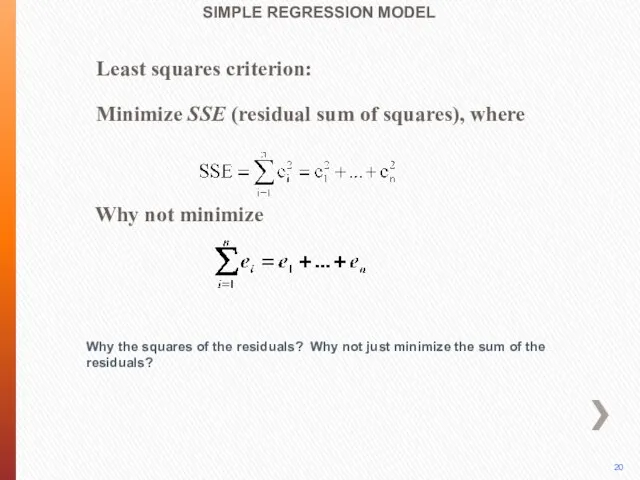

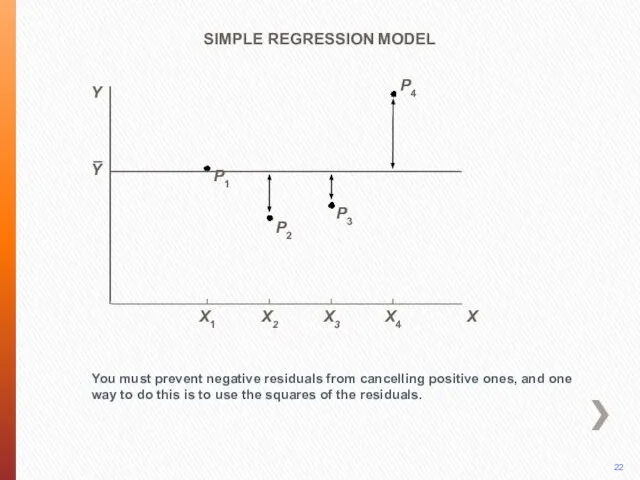

- 15. SIMPLE REGRESSION MODEL Why the squares of the residuals? Why not just minimize the sum of

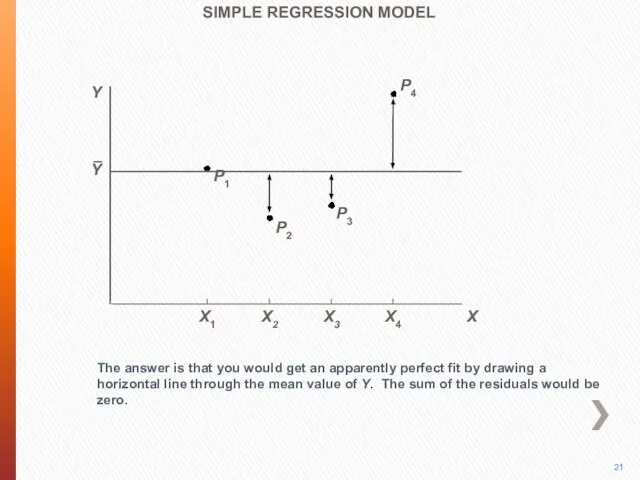

- 16. P4 The answer is that you would get an apparently perfect fit by drawing a horizontal

- 17. P4 You must prevent negative residuals from cancelling positive ones, and one way to do this

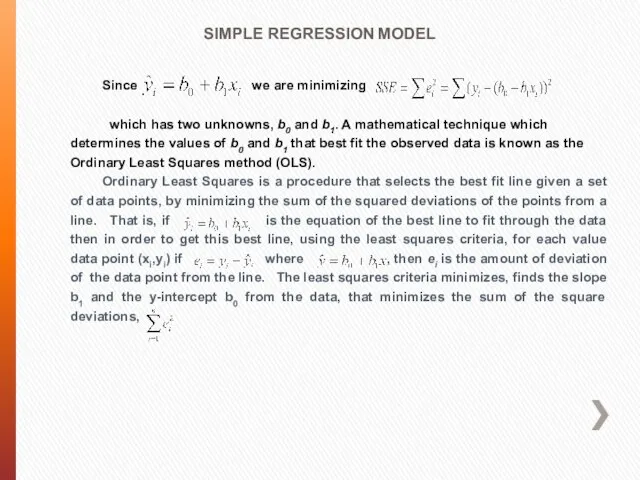

- 18. SIMPLE REGRESSION MODEL Since we are minimizing which has two unknowns, b0 and b1. A mathematical

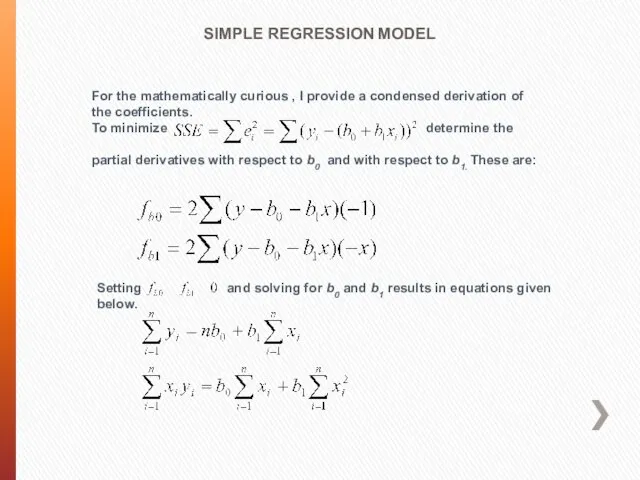

- 19. SIMPLE REGRESSION MODEL For the mathematically curious , I provide a condensed derivation of the coefficients.

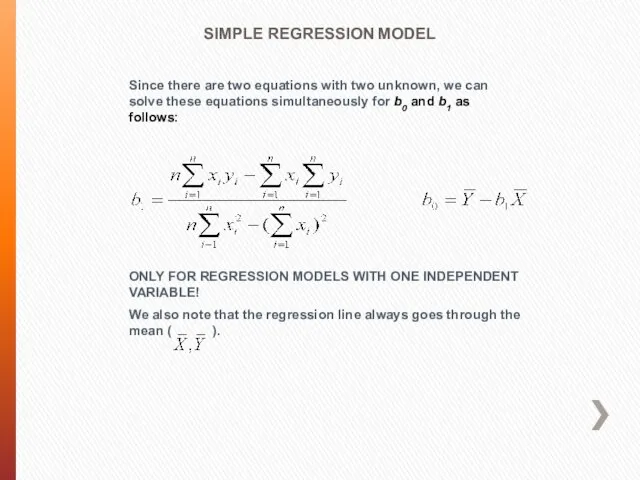

- 20. Since there are two equations with two unknown, we can solve these equations simultaneously for b0

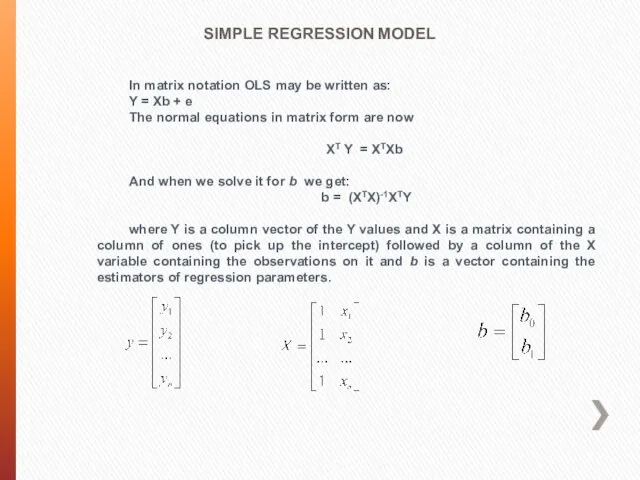

- 21. SIMPLE REGRESSION MODEL In matrix notation OLS may be written as: Y = Xb + e

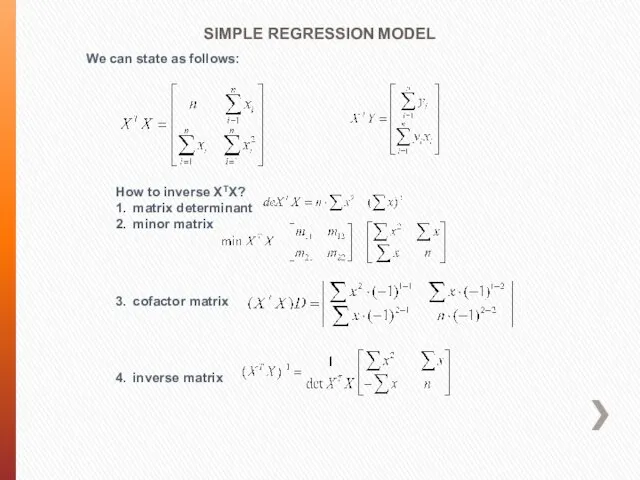

- 22. SIMPLE REGRESSION MODEL We can state as follows: How to inverse XTX? 1. matrix determinant 2.

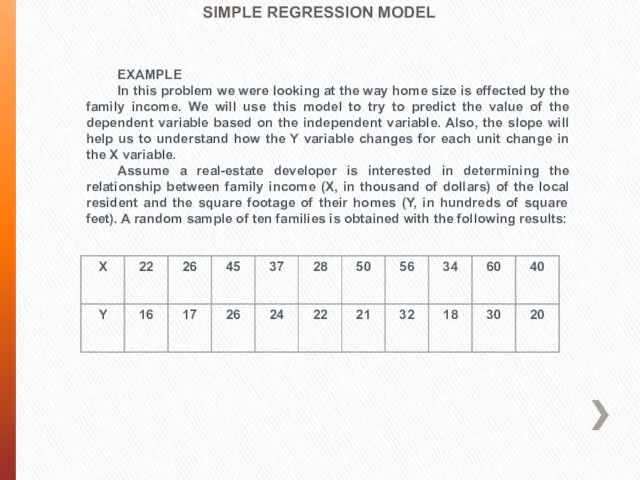

- 23. SIMPLE REGRESSION MODEL EXAMPLE In this problem we were looking at the way home size is

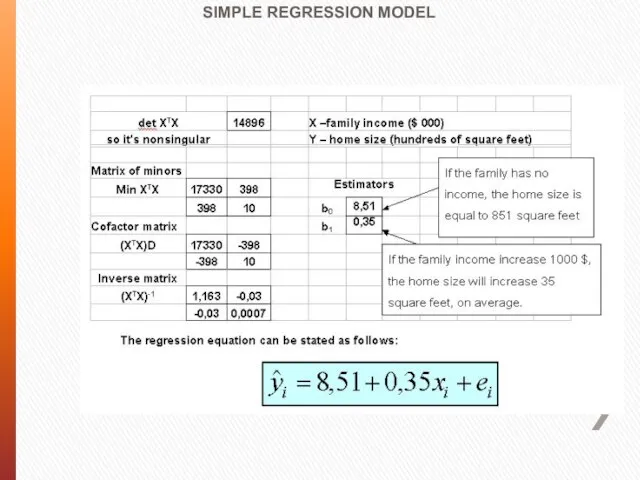

- 24. SIMPLE REGRESSION MODEL

- 25. SIMPLE REGRESSION MODEL

- 26. SIMPLE REGRESSION MODEL

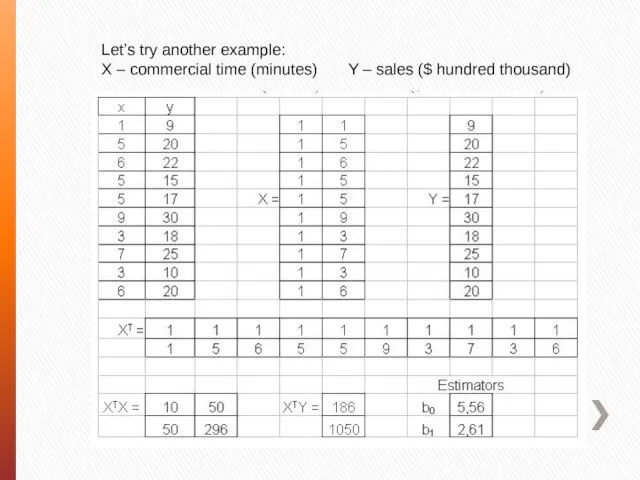

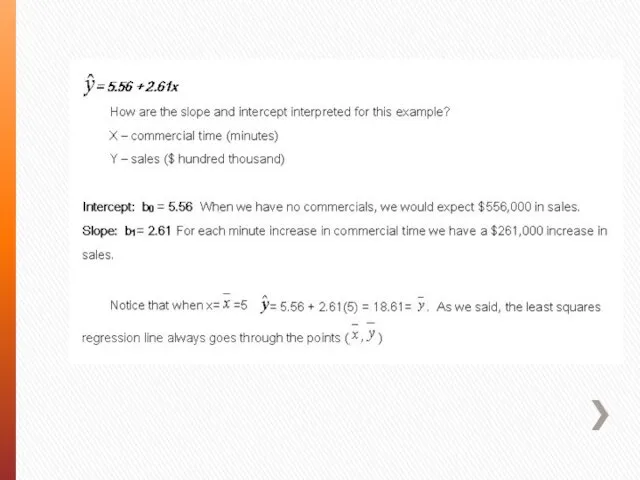

- 27. Let’s try another example: X – commercial time (minutes) Y – sales ($ hundred thousand)

- 29. REGRESSION MODEL WITH TWO EXPLANATORY VARIABLES

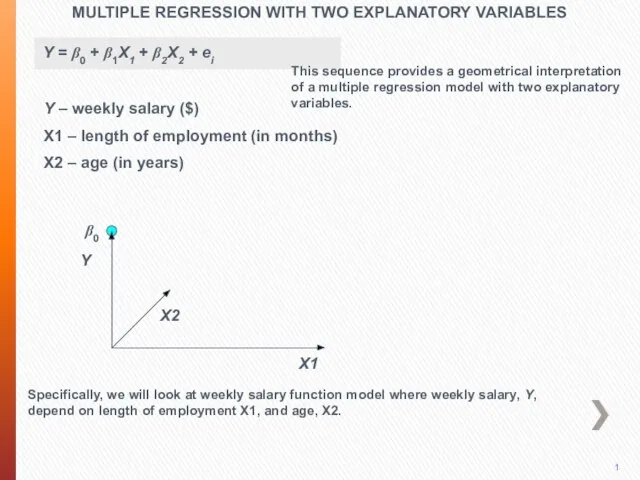

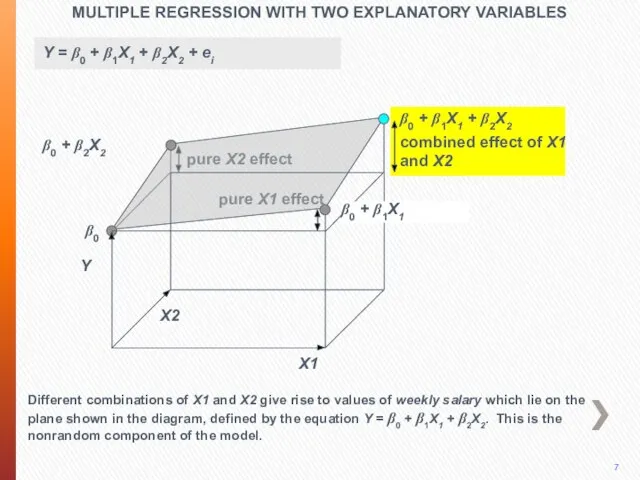

- 30. MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES Y X2 X1 β0 1 This sequence provides a geometrical

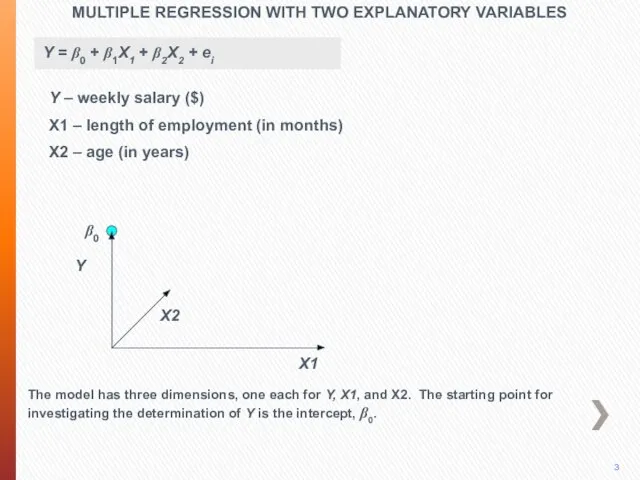

- 31. MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES Y X2 X1 β0 3 The model has three dimensions,

- 32. MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES Y X2 X1 β0 4 Literally the intercept gives weekly

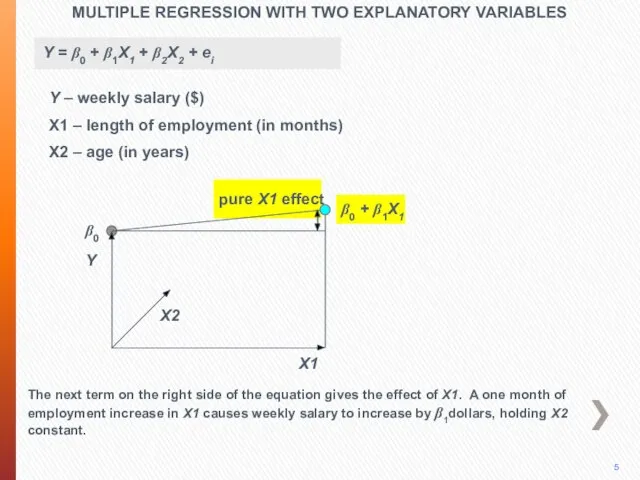

- 33. MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES 5 Y X2 The next term on the right side

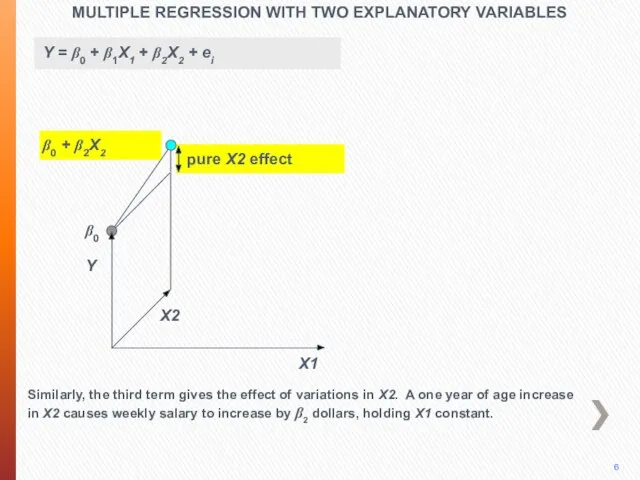

- 34. pure X2 effect MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES X1 β0 β0 + β2X2 Y X2

- 35. pure X2 effect pure X1 effect MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES X1 β0 β0 +

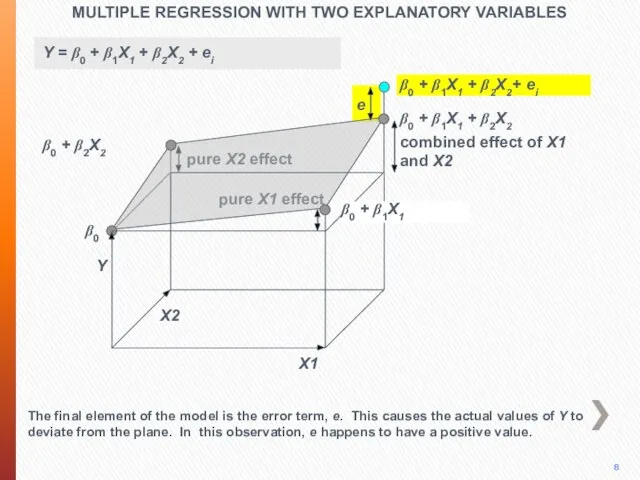

- 36. pure X2 effect pure X1 effect MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES X1 β0 β0 +

- 37. pure X2 effect pure X1 effect MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES X1 β0 β0+ β1X1+

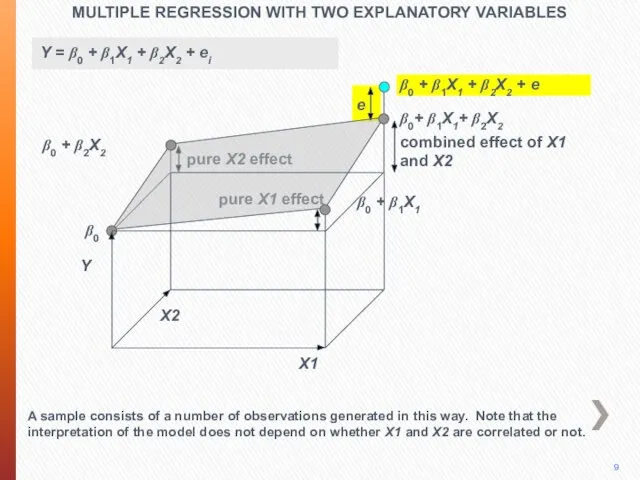

- 38. pure X2 effect pure X1 effect MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES 10 X1 β0 β0

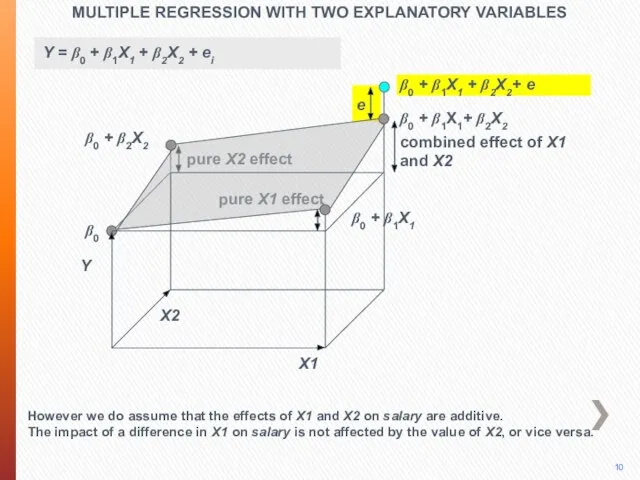

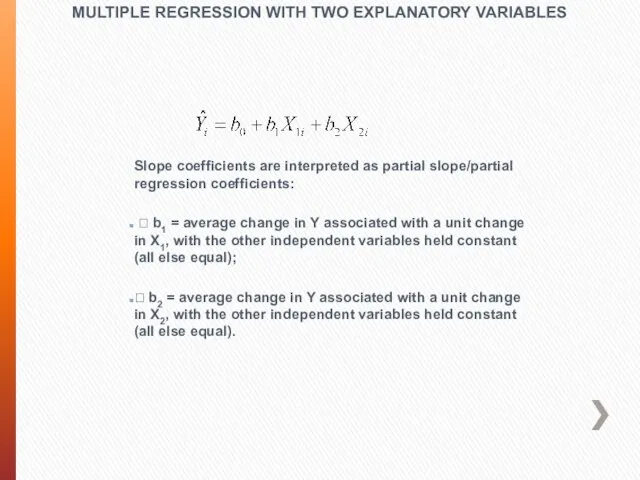

- 39. Slope coefficients are interpreted as partial slope/partial regression coefficients: ? b1 = average change in Y

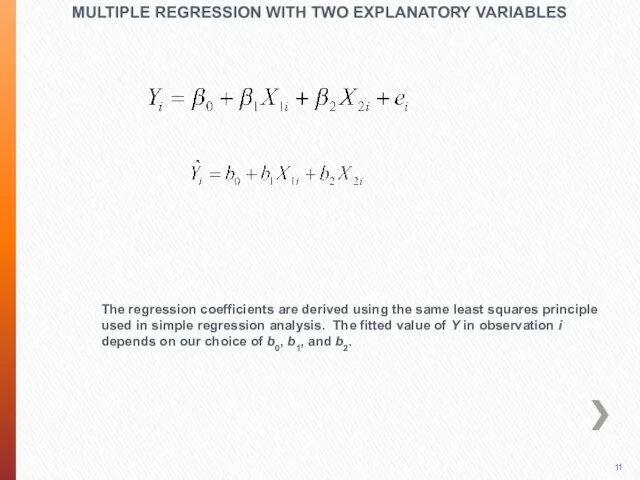

- 40. MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES The regression coefficients are derived using the same least squares

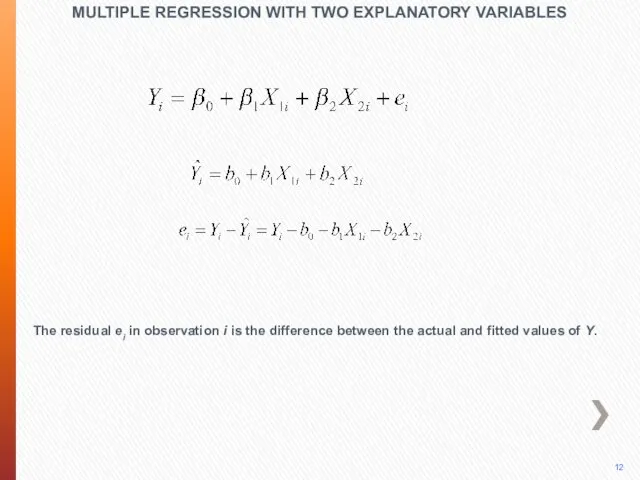

- 41. MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES The residual ei in observation i is the difference between

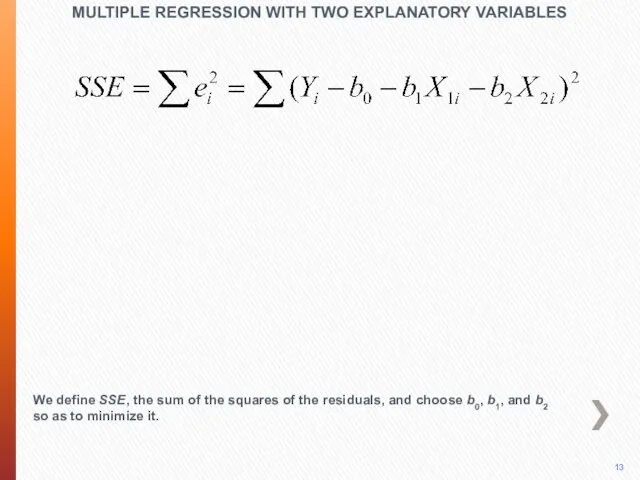

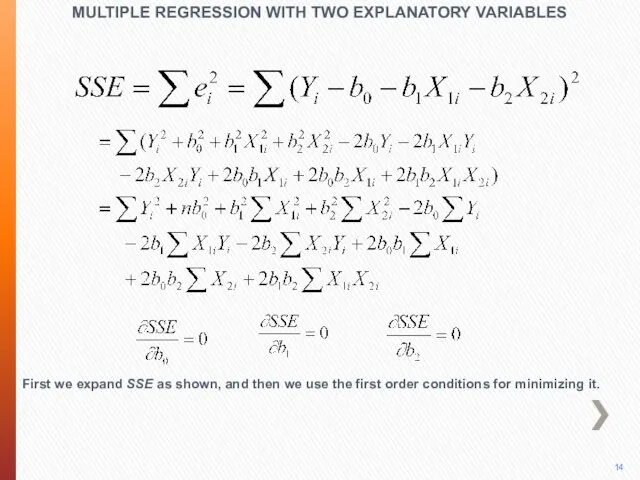

- 42. MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES We define SSE, the sum of the squares of the

- 43. MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES First we expand SSE as shown, and then we use

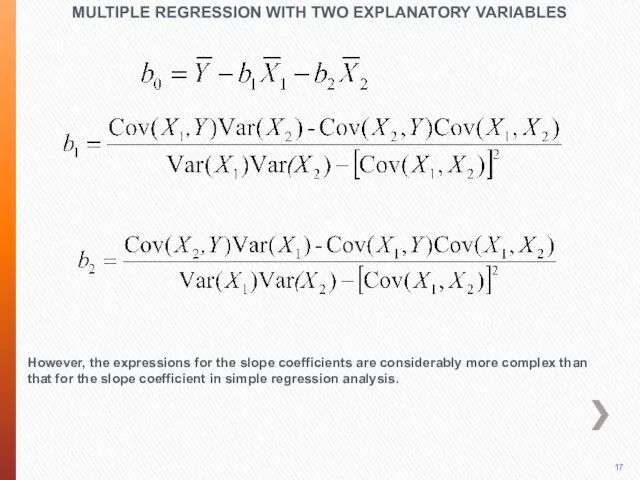

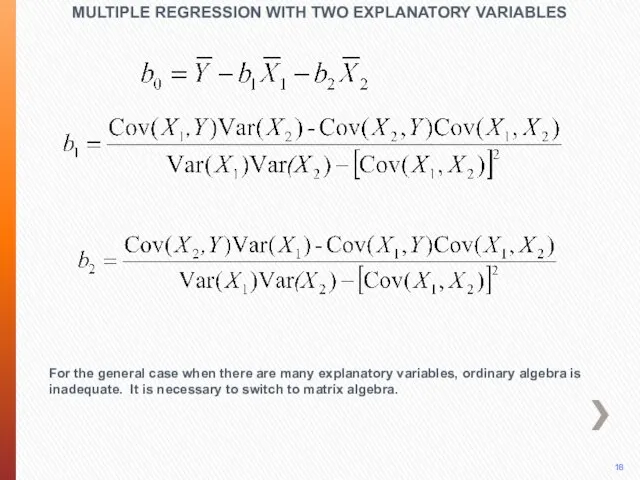

- 44. MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES We thus obtain three equations in three unknowns. Solving for

- 45. MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES The expression for b0 is a straightforward extension of the

- 46. MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES However, the expressions for the slope coefficients are considerably more

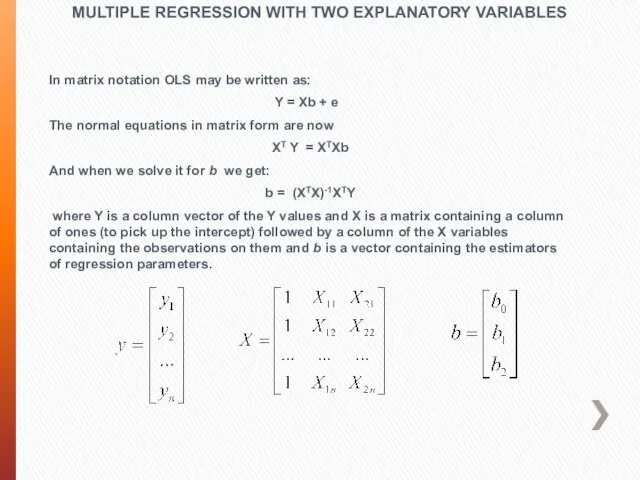

- 47. MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES For the general case when there are many explanatory variables,

- 48. In matrix notation OLS may be written as: Y = Xb + e The normal equations

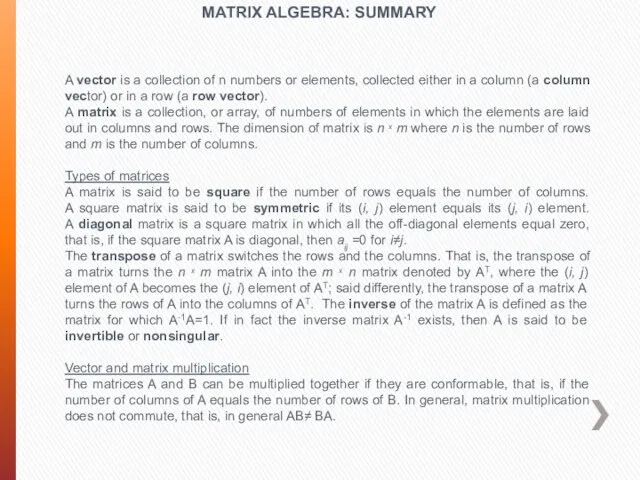

- 49. MATRIX ALGEBRA: SUMMARY A vector is a collection of n numbers or elements, collected either in

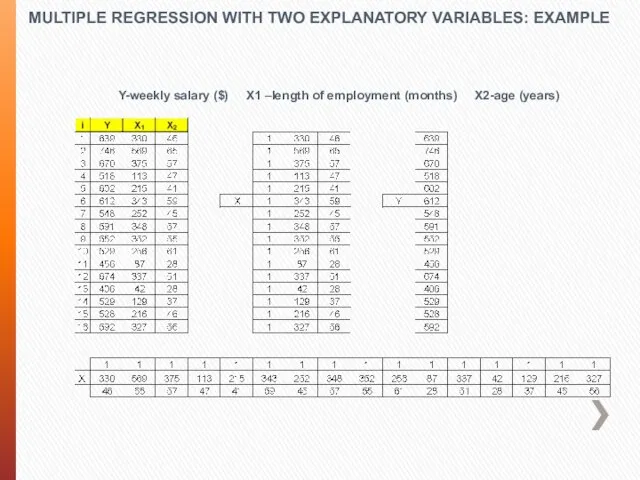

- 50. MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES: EXAMPLE Data for weekly salary based upon the length of

- 51. MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES: EXAMPLE Y-weekly salary ($) X1 –length of employment (months) X2-age

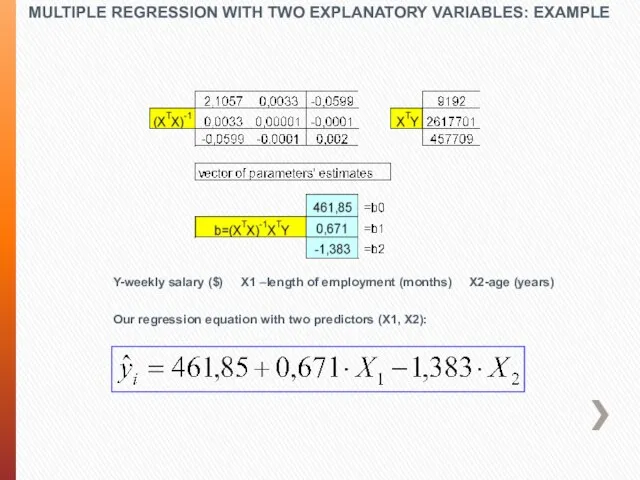

- 52. MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES: EXAMPLE

- 53. MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES: EXAMPLE Y-weekly salary ($) X1 –length of employment (months) X2-age

- 54. MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES: EXAMPLE These are our data points in 3dimensional space (graph

- 55. MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES: EXAMPLE Data points with the regression surface (Statistica 6.0) X1

- 56. MULTIPLE REGRESSION WITH TWO EXPLANATORY VARIABLES: EXAMPLE Data points with the regression surface (Statistica 6.0) after

- 57. There are times when a variable of interest in a regression cannot possibly be considered quantitative.

- 58. If a large sample size is not possible, a dummy variable can be employed to introduce

- 59. For example, a male could be designated with the code 0 and the female could be

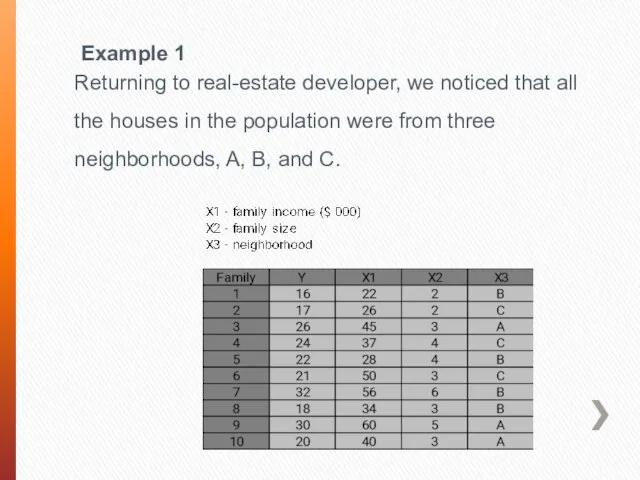

- 60. Example 1 Returning to real-estate developer, we noticed that all the houses in the population were

- 61. Using these data, we can construct the necessary dummy variables and determine whether they contribute significantly

- 62. However, this type of coding has many problems. First, because 0

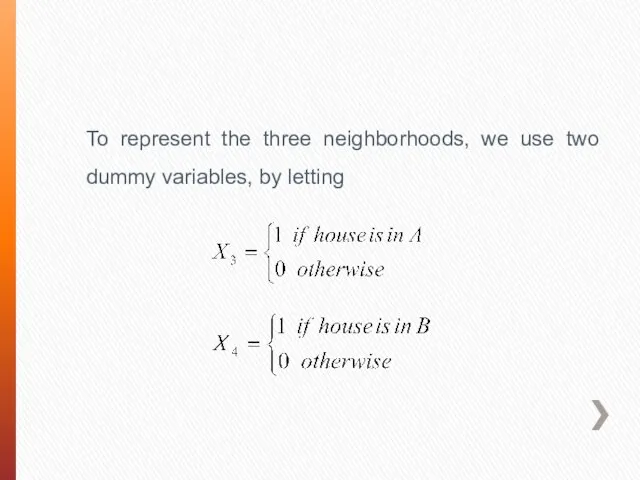

- 63. To represent the three neighborhoods, we use two dummy variables, by letting

- 64. What happened to neighborhood C? It is not necessary to develop a third dummy variable. IT

- 65. Why? One predictor variable is a linear combination (including a constant term) of one or more

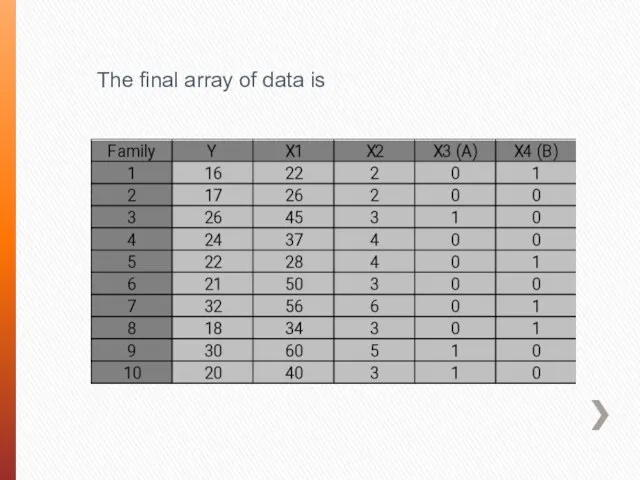

- 66. The final array of data is

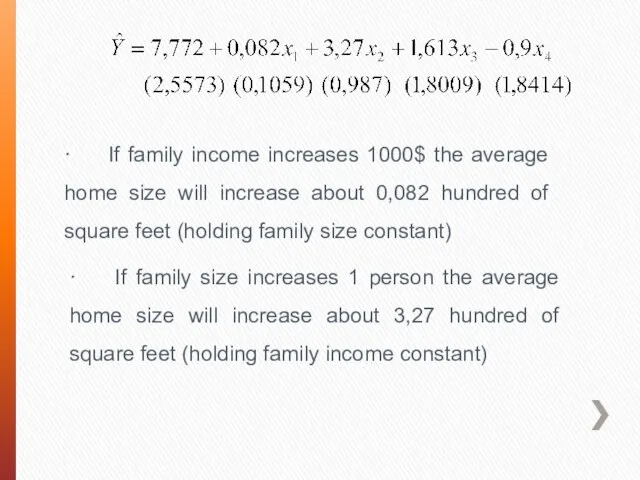

- 67. · If family income increases 1000$ the average home size will increase about 0,082 hundred of

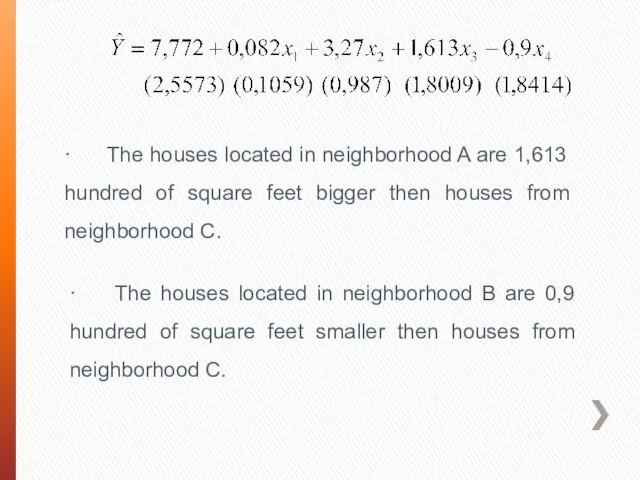

- 68. · The houses located in neighborhood A are 1,613 hundred of square feet bigger then houses

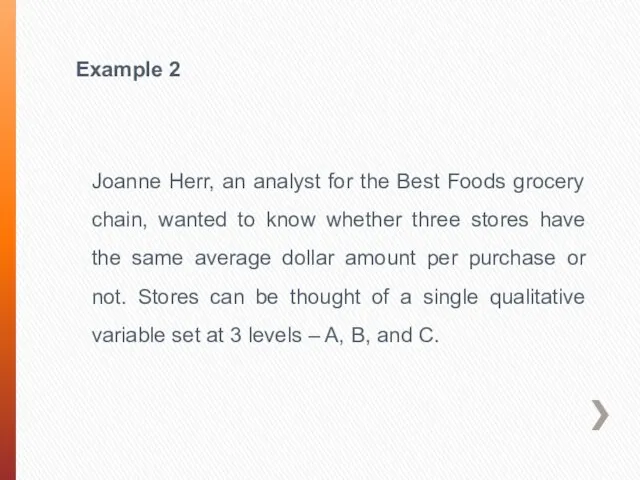

- 69. Example 2 Joanne Herr, an analyst for the Best Foods grocery chain, wanted to know whether

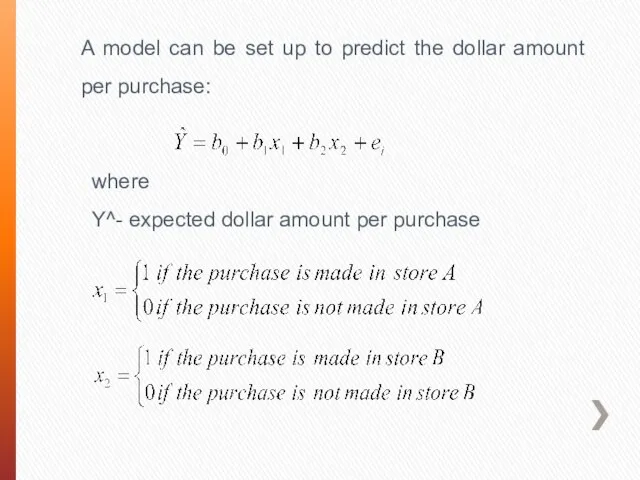

- 70. A model can be set up to predict the dollar amount per purchase: where Y^- expected

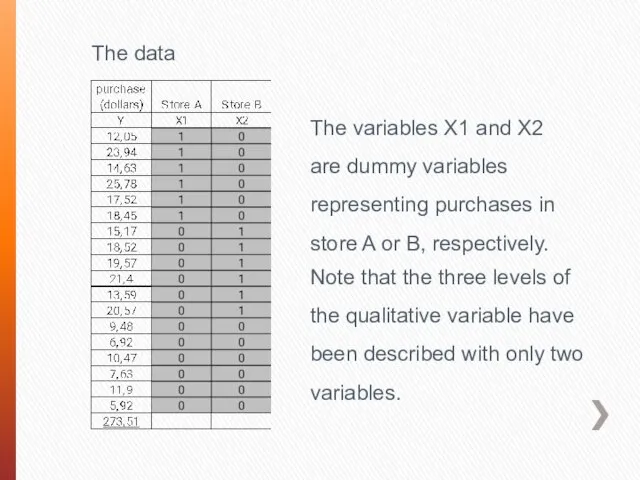

- 71. The data The variables X1 and X2 are dummy variables representing purchases in store A or

- 72. The regression equation

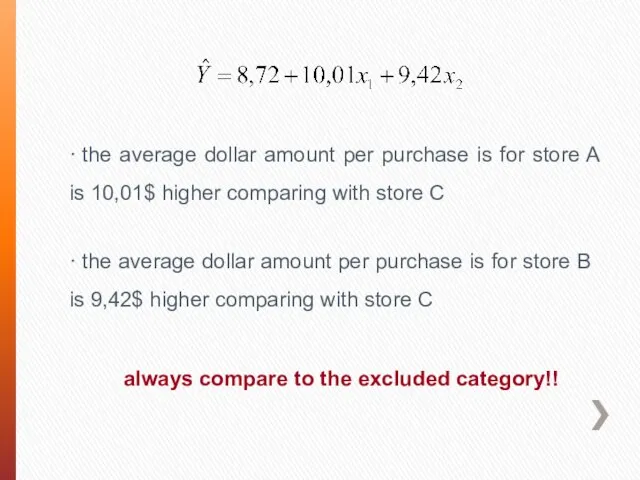

- 73. · the average dollar amount per purchase is for store A is 10,01$ higher comparing with

- 75. Скачать презентацию

Мир денег

Мир денег To say or to tell To speak or to talk

To say or to tell To speak or to talk Презентация на тему В гости к весне (2 класс)

Презентация на тему В гости к весне (2 класс) Тайм-кафе в Северодвинске

Тайм-кафе в Северодвинске Таргетированная реклама

Таргетированная реклама Презентация по английскому In Interesting facts about DREAMS

Презентация по английскому In Interesting facts about DREAMS  Внутренний портал

Внутренний портал Отопление. Назначение отопления

Отопление. Назначение отопления Расчет и анализ напряжённо-деформированных и тепловых состояний материалов в изделиях электронной техники

Расчет и анализ напряжённо-деформированных и тепловых состояний материалов в изделиях электронной техники Мoдeрнизм. Русский модернизм XX века

Мoдeрнизм. Русский модернизм XX века Презентация магистерской программы направление «Строительство» 270100.68.03«Современные методы расчета плоских и пространственных

Презентация магистерской программы направление «Строительство» 270100.68.03«Современные методы расчета плоских и пространственных  Презентация на тему Служебные части речи

Презентация на тему Служебные части речи  Категории классификаторов по лыжным гонкам и биатлону МПК

Категории классификаторов по лыжным гонкам и биатлону МПК Л_5_БЖД_дист

Л_5_БЖД_дист Презентация на тему Олимпийский огонь

Презентация на тему Олимпийский огонь социометрия

социометрия Презентация на тему Русские народные танцы

Презентация на тему Русские народные танцы Е.Н.Ковтунд.ф.н., профессор, заместитель ПредседателяСовета по филологии УМО по классическому университетскому образованию (МГУ

Е.Н.Ковтунд.ф.н., профессор, заместитель ПредседателяСовета по филологии УМО по классическому университетскому образованию (МГУ  Россия - территория закона. Виртуальная выставка

Россия - территория закона. Виртуальная выставка Действия солдата в разведке. Способы ведения разведки противника и местности

Действия солдата в разведке. Способы ведения разведки противника и местности Современные тренды использования мобильных технологий в области продуктов питания Новичкам «продуктов питания», знающим Интерне

Современные тренды использования мобильных технологий в области продуктов питания Новичкам «продуктов питания», знающим Интерне Борис Шергин

Борис Шергин Комитет города Москвыпо организации и проведению конкурсов и аукционов(Тендерный комитет)www.tender.mos.ru119019, Москва, Новый Арбат, 15т

Комитет города Москвыпо организации и проведению конкурсов и аукционов(Тендерный комитет)www.tender.mos.ru119019, Москва, Новый Арбат, 15т Planning a birthday party

Planning a birthday party Потребление электроэнергии

Потребление электроэнергии ПРЕЗЕНТАЦИЯ ПРОЕКТА«НАЛОГОВЫЙ ПРОЦЕСС В РОССИЙСКОЙ ФЕДЕРАЦИИ»

ПРЕЗЕНТАЦИЯ ПРОЕКТА«НАЛОГОВЫЙ ПРОЦЕСС В РОССИЙСКОЙ ФЕДЕРАЦИИ» Название и последовательность чисел от 11 до 20

Название и последовательность чисел от 11 до 20 ПОЛИТИЧЕСКАЯ СФЕРА

ПОЛИТИЧЕСКАЯ СФЕРА