Содержание

- 2. Dave Lewis, Ph.D. President, David D. Lewis Consulting Co-founder TREC Legal Track Testifying expert in Kleen

- 3. Kara M. Kirkeby, Esq. Manager of Document Review Services for Kroll Ontrack Previously managed document reviews

- 4. Discussion Overview What is Technology Assisted Review (TAR)? Document Evaluation Putting TAR into Practice Conclusion

- 5. What is Technology Assisted Review?

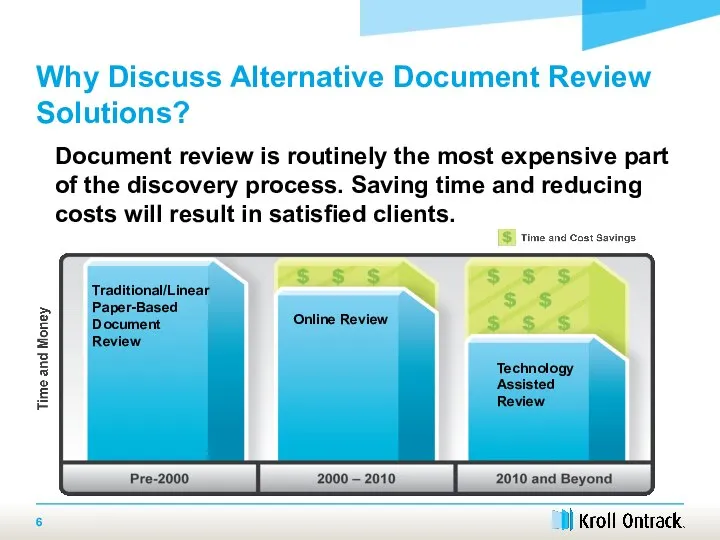

- 6. Why Discuss Alternative Document Review Solutions? Document review is routinely the most expensive part of the

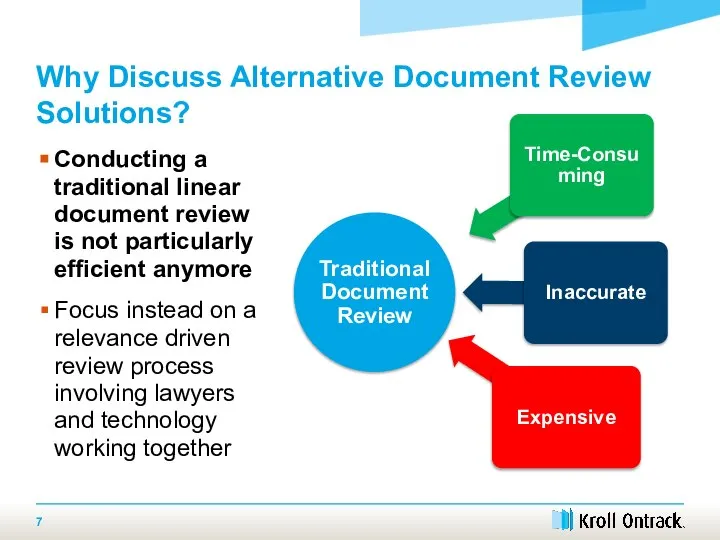

- 7. Why Discuss Alternative Document Review Solutions? Conducting a traditional linear document review is not particularly efficient

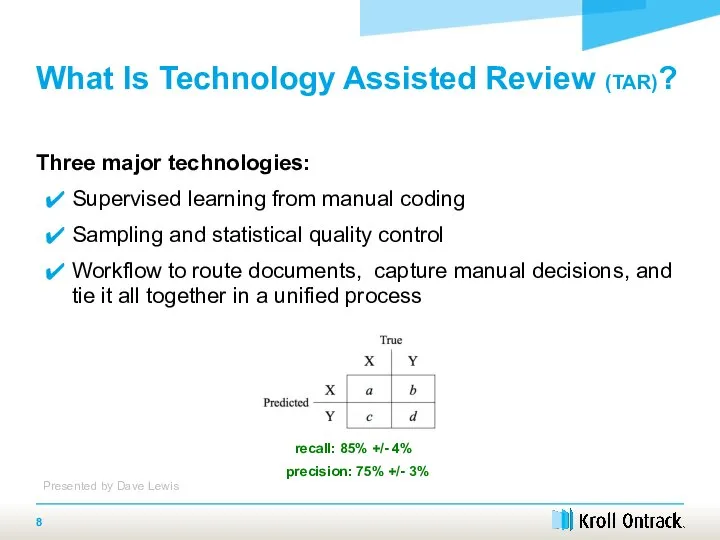

- 8. What Is Technology Assisted Review (TAR)? Three major technologies: Supervised learning from manual coding Sampling and

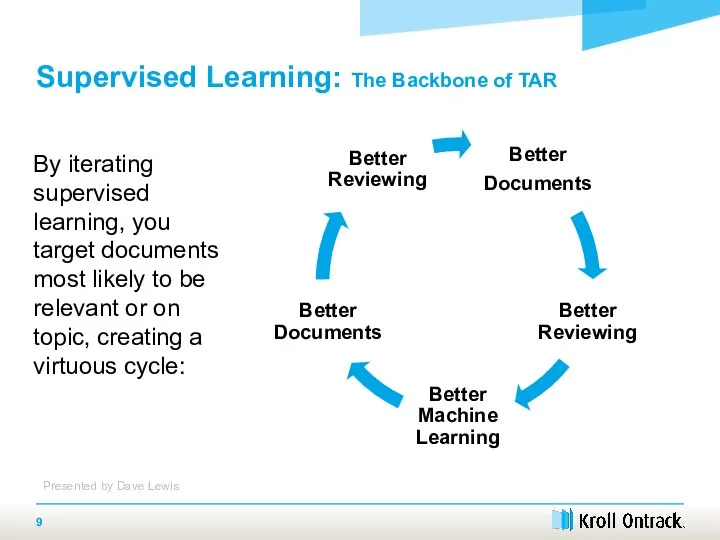

- 9. Supervised Learning: The Backbone of TAR By iterating supervised learning, you target documents most likely to

- 10. Software learns to imitate human actions For e-discovery, this means learning of classifiers by imitating human

- 11. Text REtrieval Conference (“TREC”), hosted by National Institute of Standards and Technology (“NIST”) since 1992 Evaluations

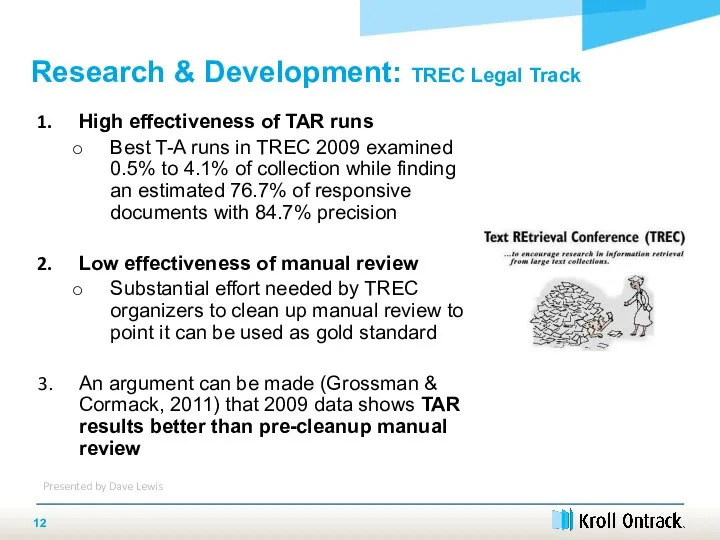

- 12. High effectiveness of TAR runs Best T-A runs in TREC 2009 examined 0.5% to 4.1% of

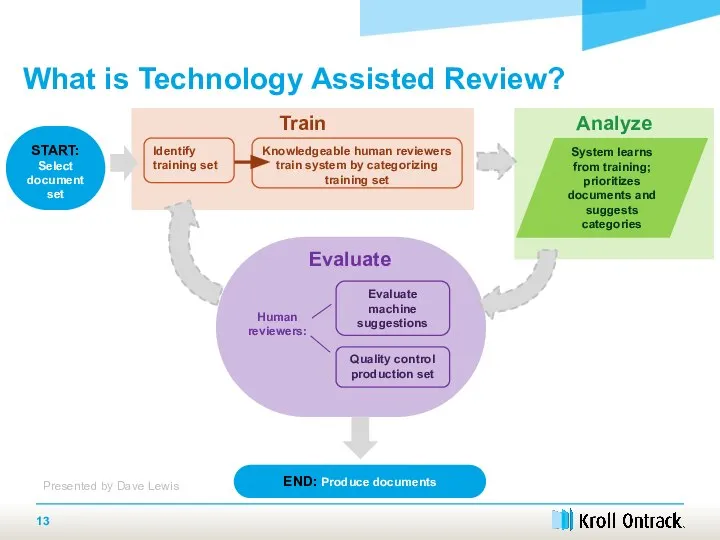

- 13. Analyze What is Technology Assisted Review? Train START: Select document set Identify training set Knowledgeable human

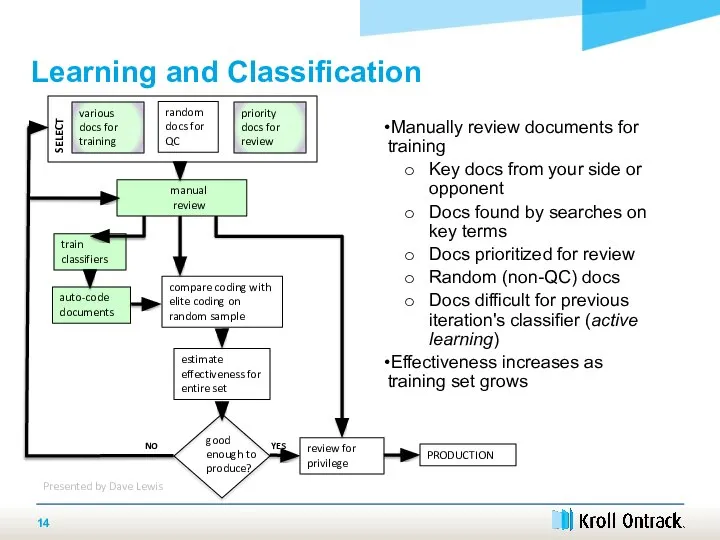

- 14. SELECT Manually review documents for training Key docs from your side or opponent Docs found by

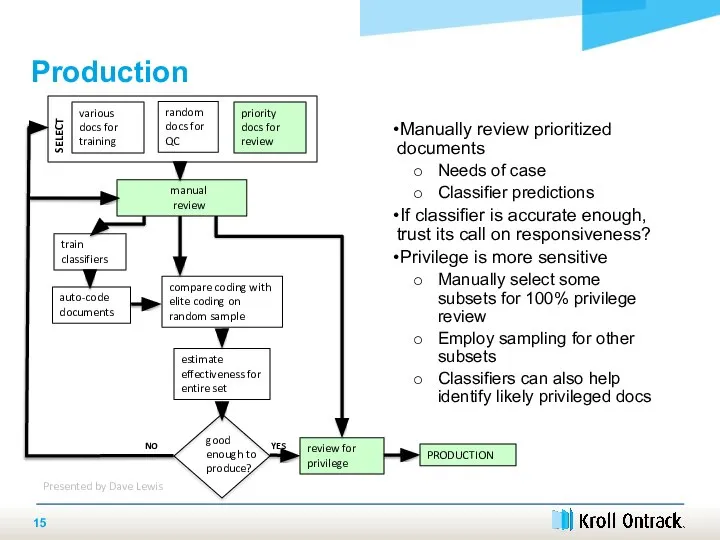

- 15. Manually review prioritized documents Needs of case Classifier predictions If classifier is accurate enough, trust its

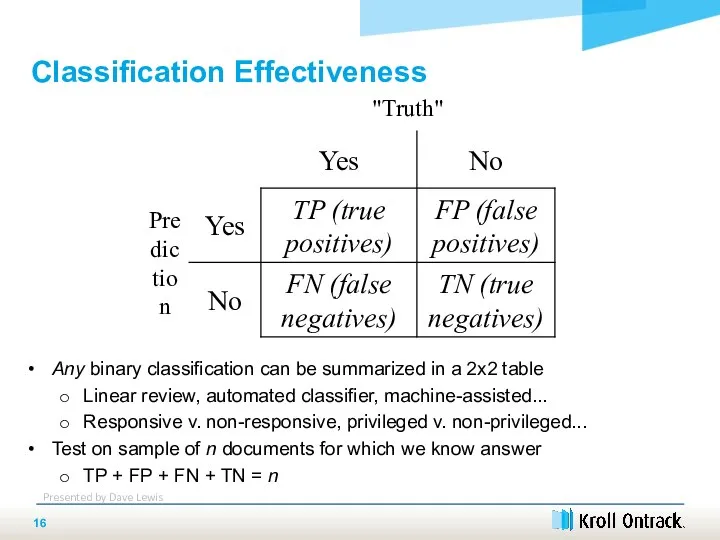

- 16. Any binary classification can be summarized in a 2x2 table Linear review, automated classifier, machine-assisted... Responsive

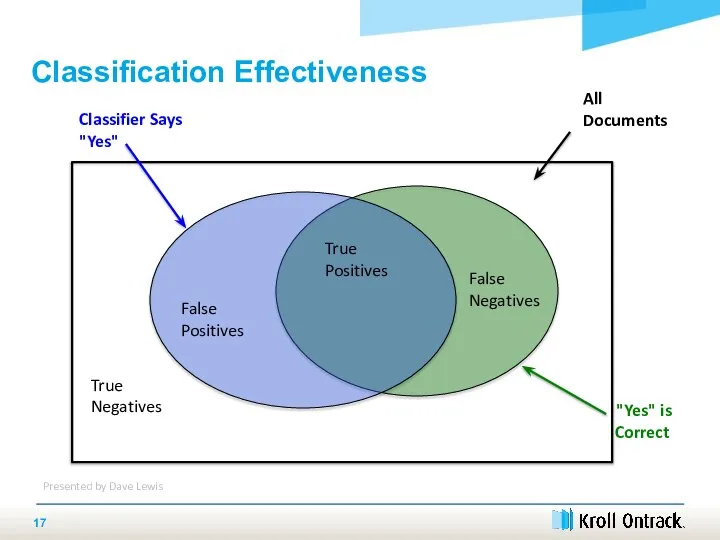

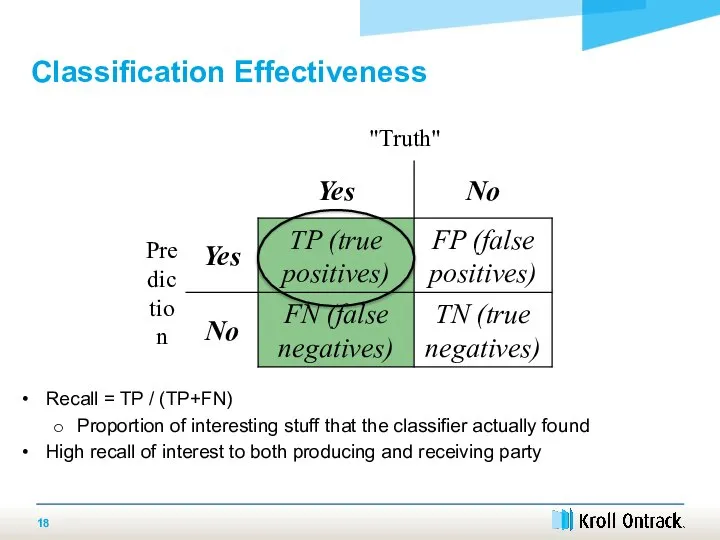

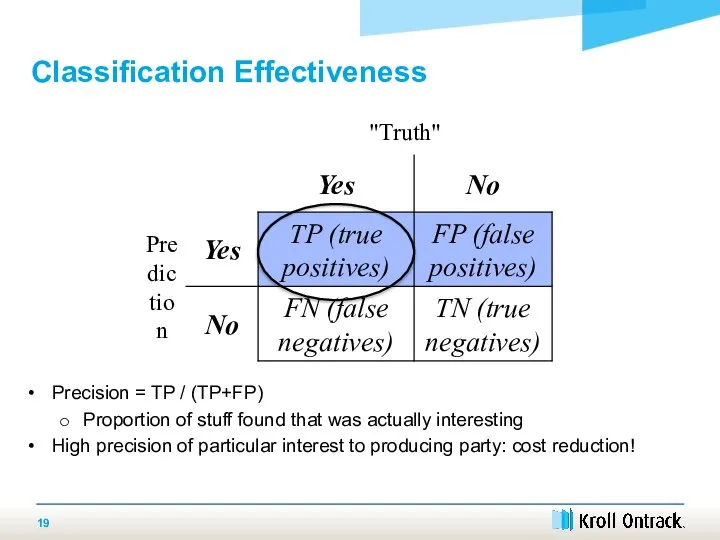

- 17. True Negatives False Positives True Positives False Negatives Classifier Says "Yes" "Yes" is Correct All Documents

- 18. Recall = TP / (TP+FN) Proportion of interesting stuff that the classifier actually found High recall

- 19. Precision = TP / (TP+FP) Proportion of stuff found that was actually interesting High precision of

- 20. Seminal 1985 study by Blair & Maron Review for documents relevant to 51 requests related to

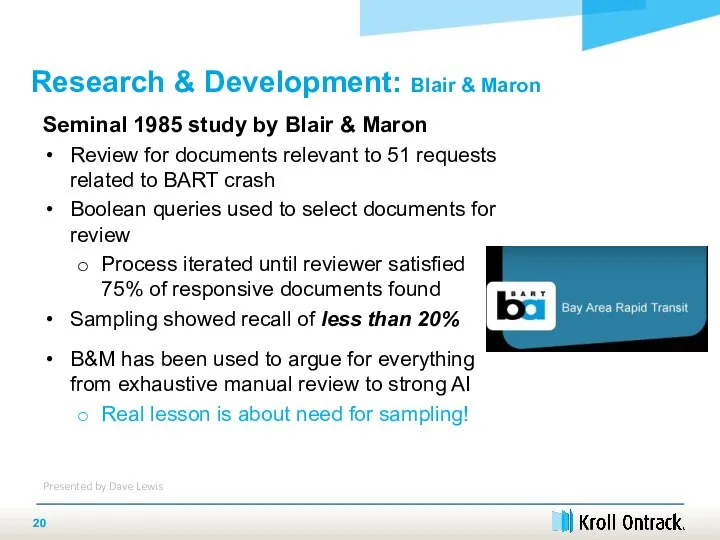

- 21. Want to know effectiveness without manually reviewing everything. So: Randomly sample the documents Manually classify the

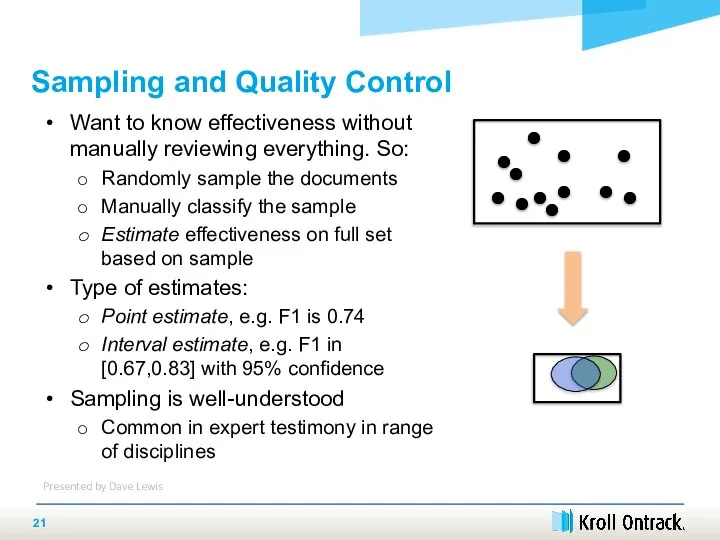

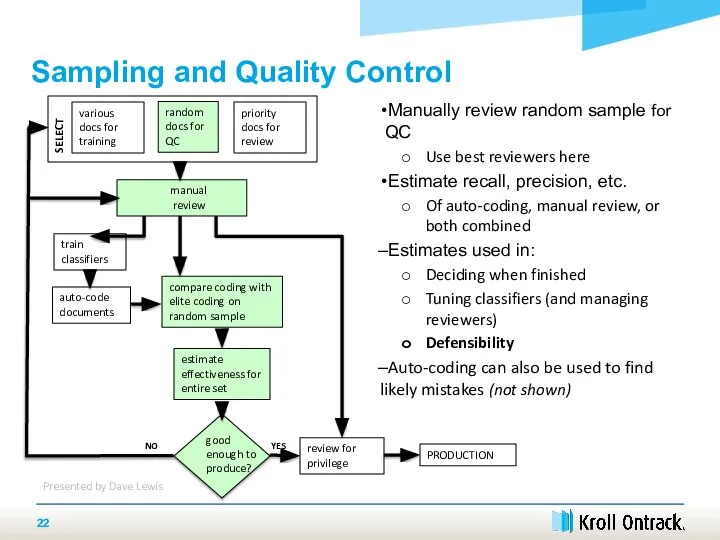

- 22. SELECT various docs for training random docs for QC priority docs for review manual review train

- 23. Putting TAR into Practice

- 24. Barriers to Widespread Adoption Industry-wide concern: Is it defensible? Concern arises from misconceptions about how the

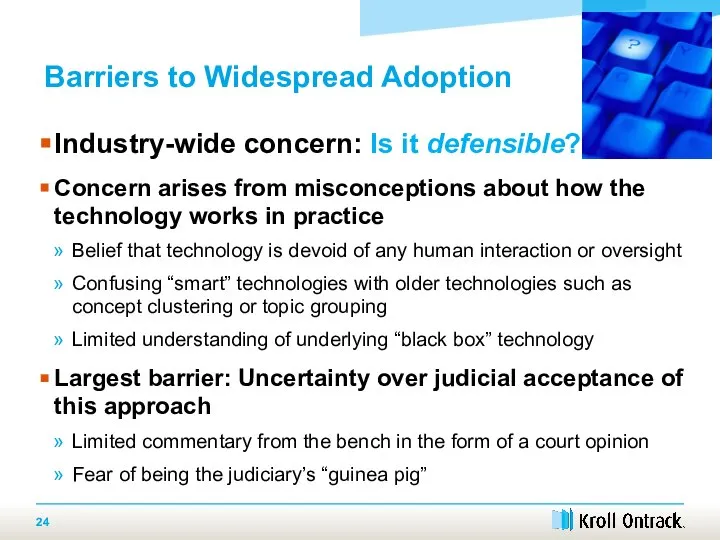

- 25. Developing TAR Case Law Da Silva Moore v. Publicis Groupe Class-action suit: parties agreed on a

- 26. Developing TAR Case Law Kleen Products v. Packaging Corporation of America Defendants had completed 99% of

- 27. Technology Assisted Review: What It Will Not Do Will not replace or mimic the nuanced expert

- 28. Technology Assisted Review: What It Can Do Reduce: Time required for document review and administration Number

- 29. TAR Accuracy TAR must be as accurate as a traditional review Studies show that computer-aided review

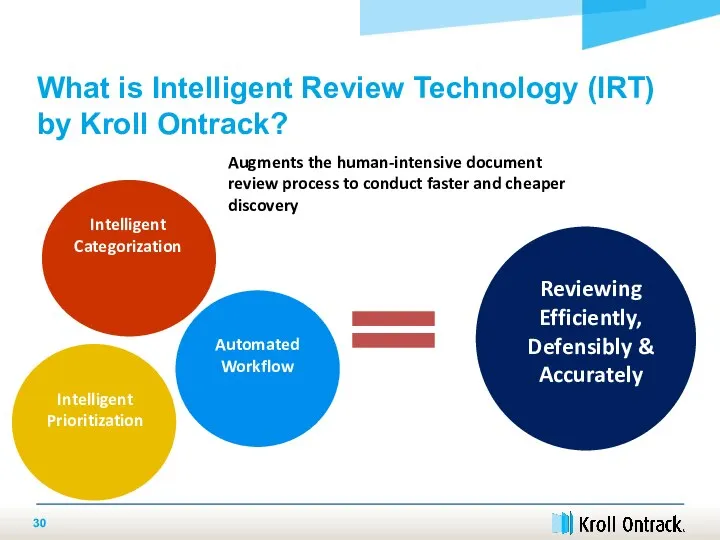

- 30. What is Intelligent Review Technology (IRT) by Kroll Ontrack? Intelligent Prioritization Intelligent Categorization Automated Workflow Reviewing

- 31. Cut off review after prioritization of documents showed marginal return of responsive documents for specific number

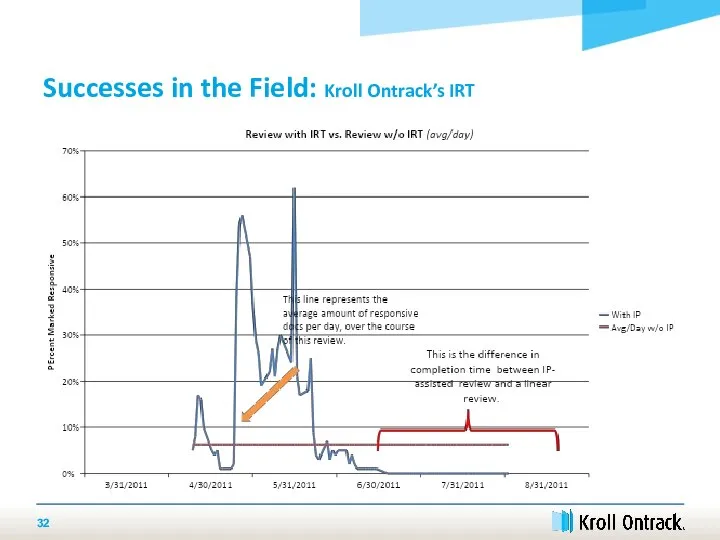

- 32. Successes in the Field: Kroll Ontrack’s IRT

- 33. Conclusion

- 34. Parting Thoughts Automated review technology helps lawyers focus on resolution – not discovery – through available

- 35. Q & A

- 37. Скачать презентацию

Find 18 differences. Game by helgabel

Find 18 differences. Game by helgabel Порядок слов в английском предложении

Порядок слов в английском предложении Презентация на тему Crown Jewels

Презентация на тему Crown Jewels  My only friend

My only friend Noun Clauses

Noun Clauses We must help people around

We must help people around How are you?

How are you? Functional diagnostics

Functional diagnostics Pseudo-words

Pseudo-words What is in the box?

What is in the box? Идеология марксизма-ленинизма

Идеология марксизма-ленинизма Resistance of materials

Resistance of materials Character. Словообразование в английском языке

Character. Словообразование в английском языке Презентация к уроку английского языка "Phrasal Verbs" -

Презентация к уроку английского языка "Phrasal Verbs" -  Устная часть ЕГЭ

Устная часть ЕГЭ Grammar class 2

Grammar class 2 Body parts

Body parts Времена года

Времена года Adjectives ending in ed and ing grammar guides

Adjectives ending in ed and ing grammar guides Hickory, dickory, dock

Hickory, dickory, dock Презентация на тему Present Simple

Презентация на тему Present Simple  Money laundering

Money laundering Предложения с местоимениями he, she. Тренировочное упражнение

Предложения с местоимениями he, she. Тренировочное упражнение Halloween words

Halloween words Sea life

Sea life Slide 1. Revision. Unit 4.2

Slide 1. Revision. Unit 4.2 Profile of village of Tishenskoye

Profile of village of Tishenskoye Belgium

Belgium