Содержание

- 2. Target Settings Process ‘group’-based systems Clouds/Datacenters Replicated servers Distributed databases Crash-stop/Fail-stop process failures

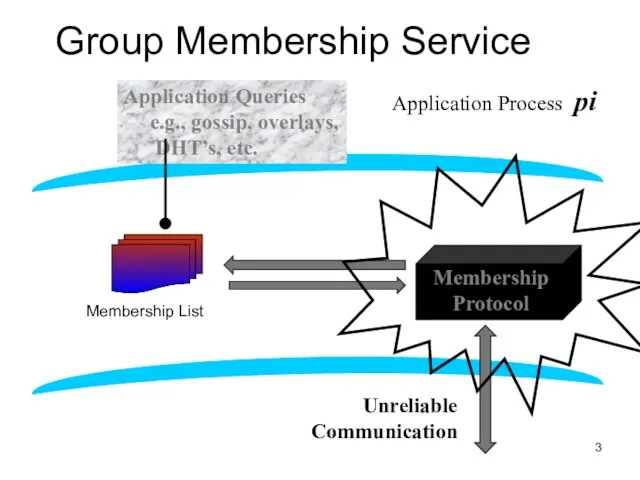

- 3. Group Membership Service Application Queries e.g., gossip, overlays, DHT’s, etc. Membership Protocol Group Membership List joins,

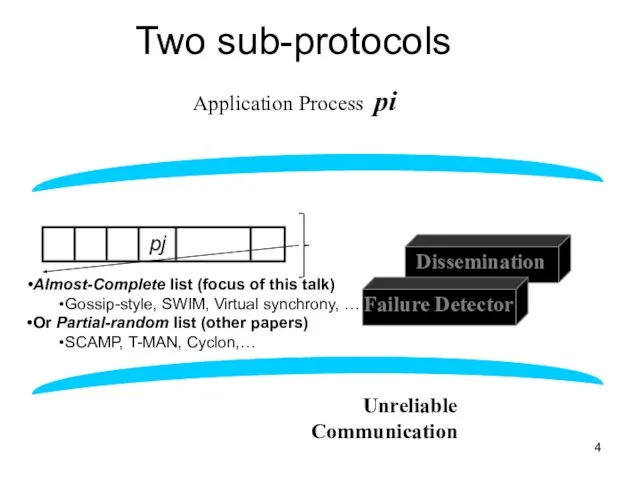

- 4. Two sub-protocols Application Process pi Group Membership List Unreliable Communication Almost-Complete list (focus of this talk)

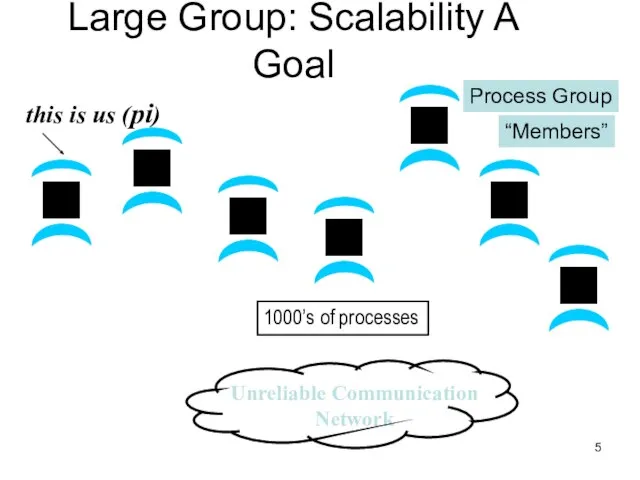

- 5. Large Group: Scalability A Goal this is us (pi) 1000’s of processes Process Group “Members”

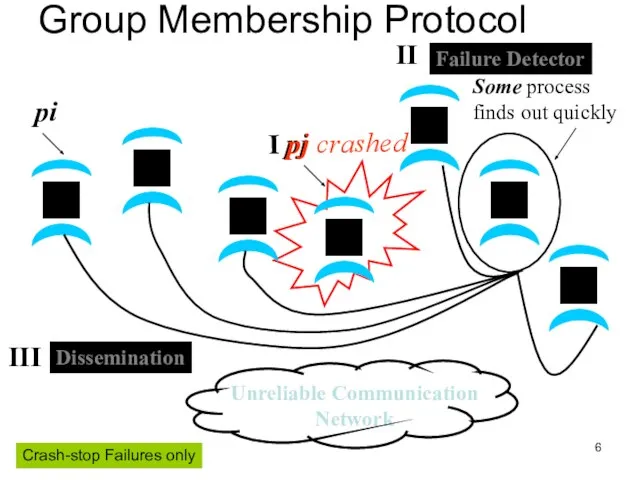

- 6. pj Group Membership Protocol Crash-stop Failures only

- 7. I. pj crashes Nothing we can do about it! A frequent occurrence Common case rather than

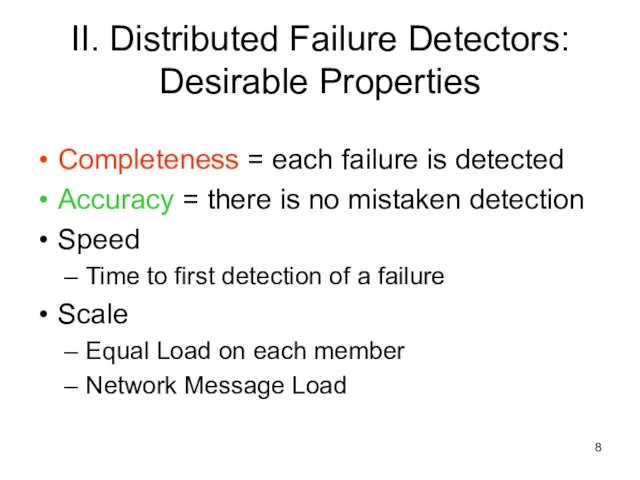

- 8. II. Distributed Failure Detectors: Desirable Properties Completeness = each failure is detected Accuracy = there is

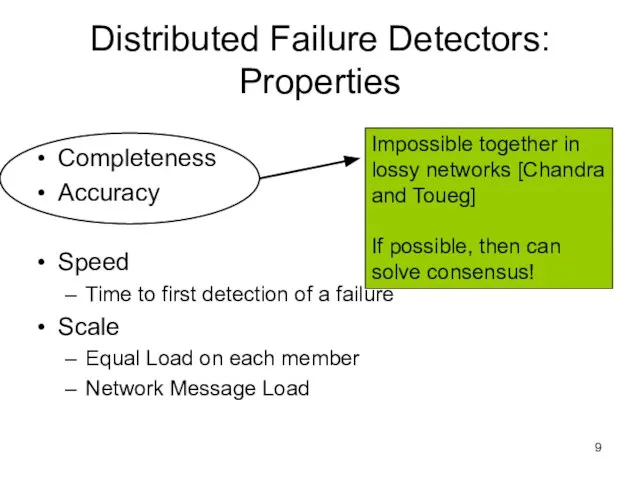

- 9. Distributed Failure Detectors: Properties Completeness Accuracy Speed Time to first detection of a failure Scale Equal

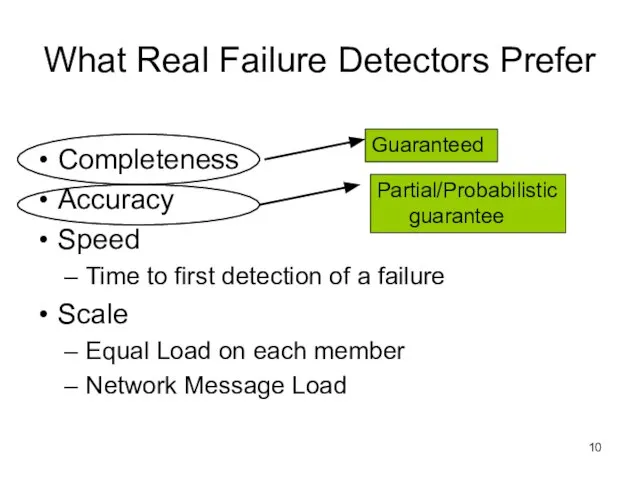

- 10. What Real Failure Detectors Prefer Completeness Accuracy Speed Time to first detection of a failure Scale

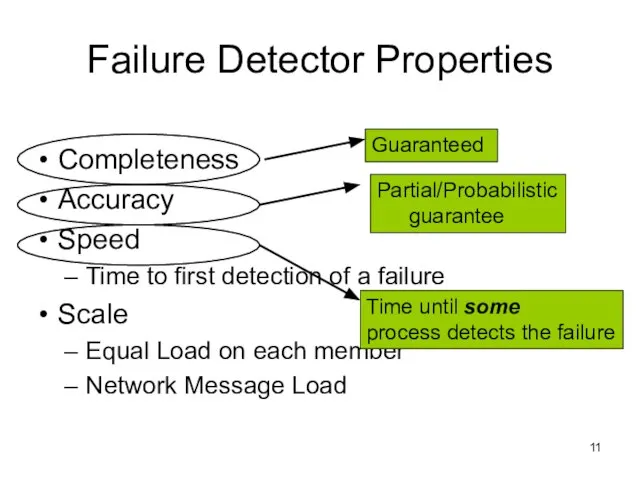

- 11. Failure Detector Properties Completeness Accuracy Speed Time to first detection of a failure Scale Equal Load

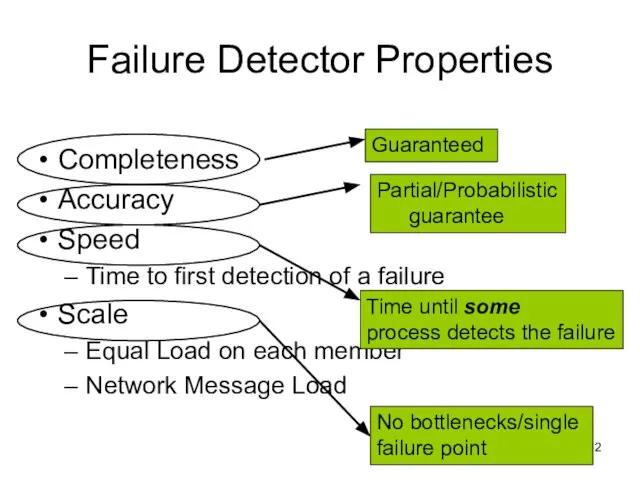

- 12. Failure Detector Properties Completeness Accuracy Speed Time to first detection of a failure Scale Equal Load

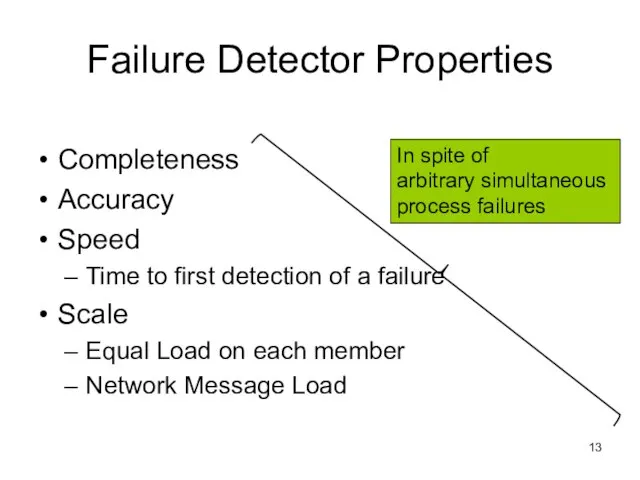

- 13. Failure Detector Properties Completeness Accuracy Speed Time to first detection of a failure Scale Equal Load

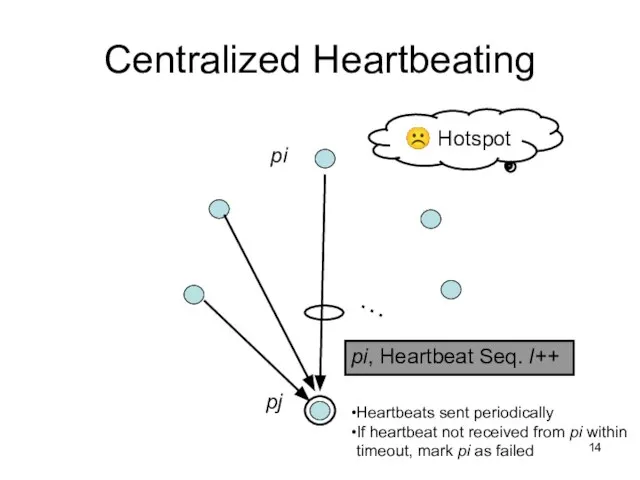

- 14. Centralized Heartbeating … pi, Heartbeat Seq. l++ pi pj Heartbeats sent periodically If heartbeat not received

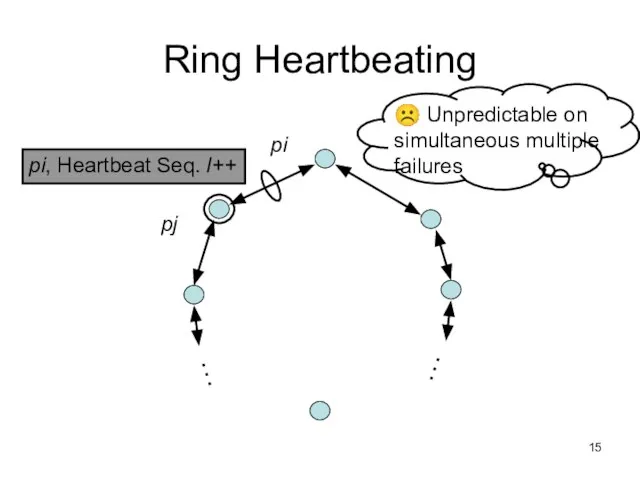

- 15. Ring Heartbeating pi, Heartbeat Seq. l++ pi … … pj

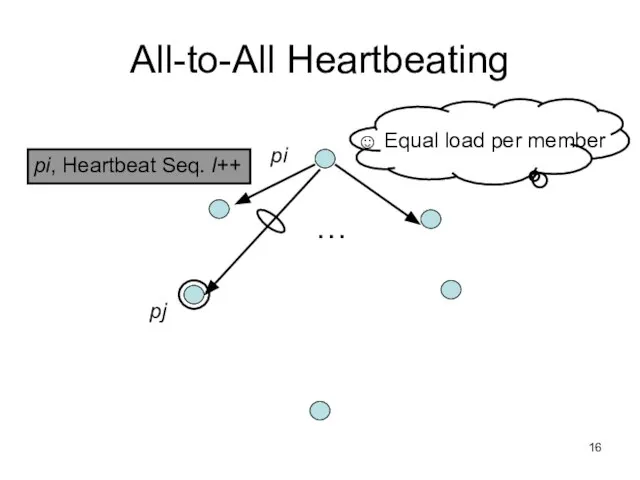

- 16. All-to-All Heartbeating pi, Heartbeat Seq. l++ … pi pj

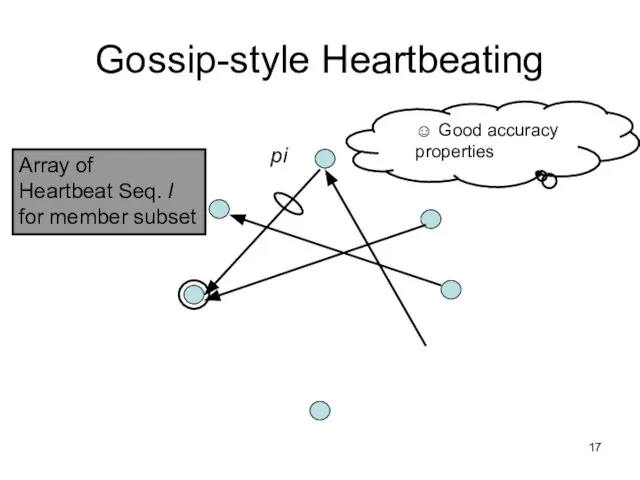

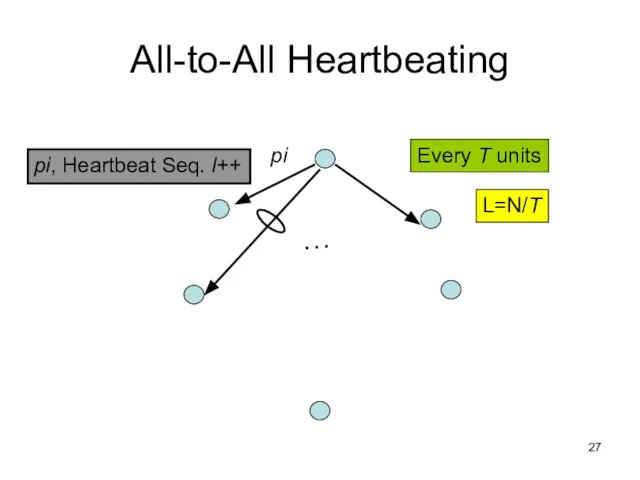

- 17. Gossip-style Heartbeating Array of Heartbeat Seq. l for member subset pi

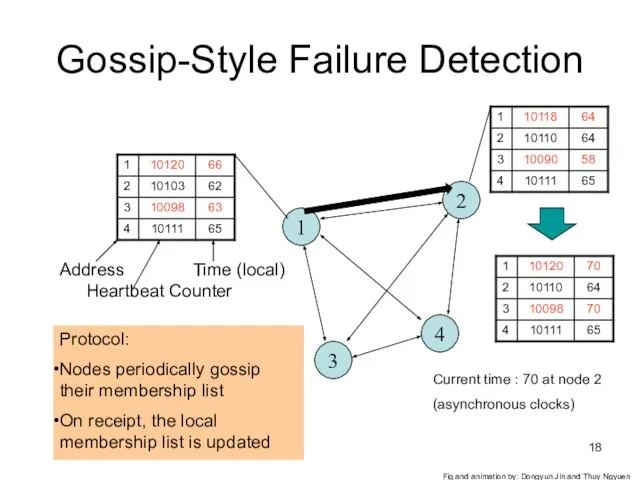

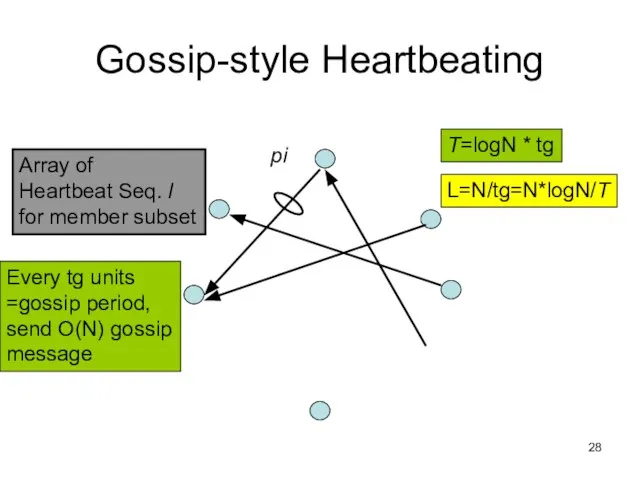

- 18. Gossip-Style Failure Detection 1 2 4 3 Protocol: Nodes periodically gossip their membership list On receipt,

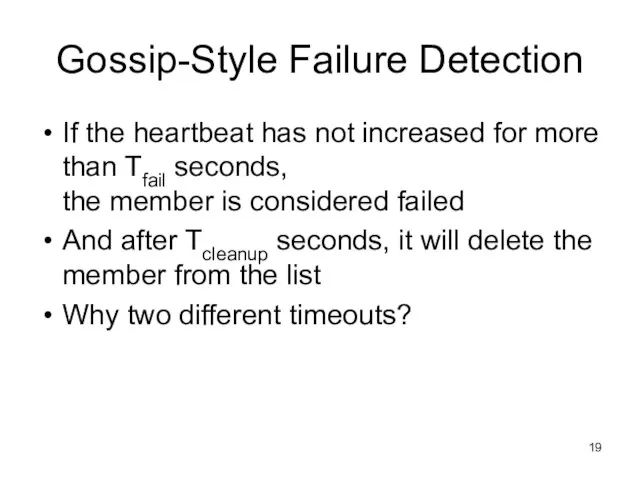

- 19. Gossip-Style Failure Detection If the heartbeat has not increased for more than Tfail seconds, the member

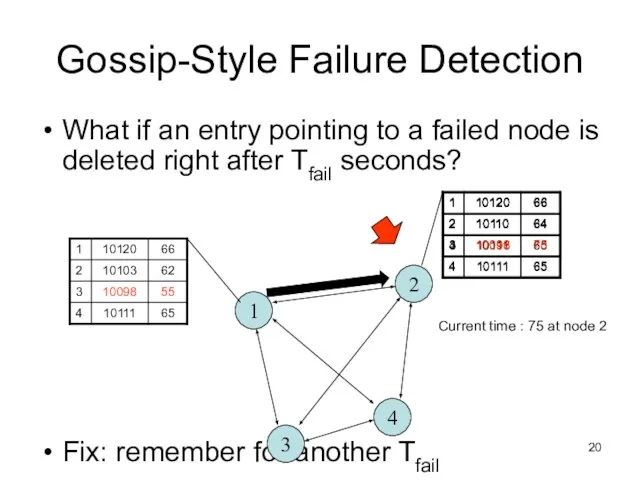

- 20. Gossip-Style Failure Detection What if an entry pointing to a failed node is deleted right after

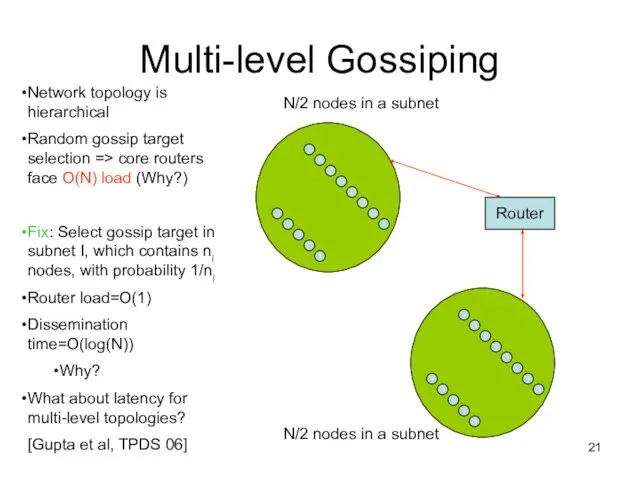

- 21. Multi-level Gossiping Network topology is hierarchical Random gossip target selection => core routers face O(N) load

- 22. Analysis/Discussion What happens if gossip period Tgossip is decreased? A single heartbeat takes O(log(N)) time to

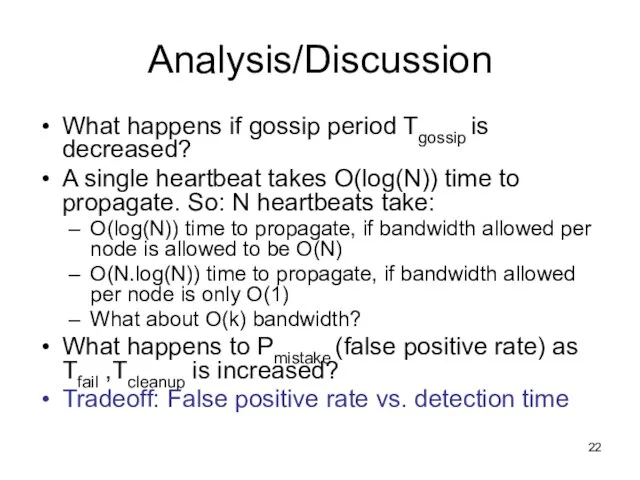

- 23. Simulations As # members increases, the detection time increases As requirement is loosened, the detection time

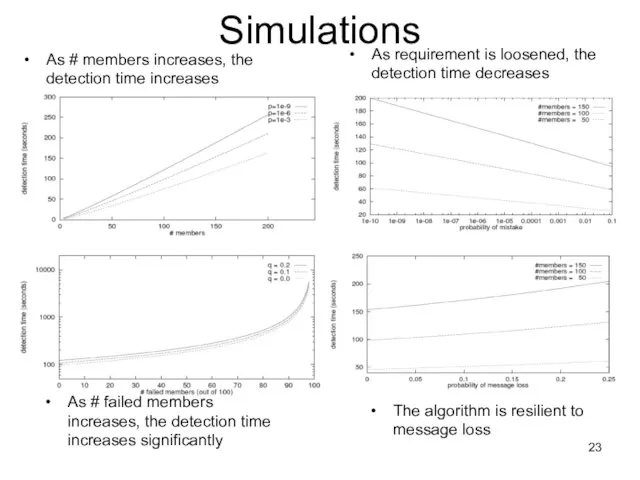

- 24. Failure Detector Properties … Completeness Accuracy Speed Time to first detection of a failure Scale Equal

- 25. …Are application-defined Requirements Completeness Accuracy Speed Time to first detection of a failure Scale Equal Load

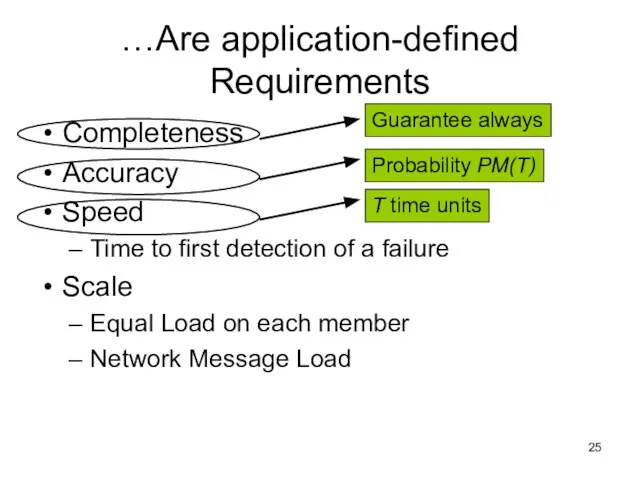

- 26. …Are application-defined Requirements Completeness Accuracy Speed Time to first detection of a failure Scale Equal Load

- 27. All-to-All Heartbeating pi, Heartbeat Seq. l++ … pi Every T units L=N/T

- 28. Gossip-style Heartbeating Array of Heartbeat Seq. l for member subset pi Every tg units =gossip period,

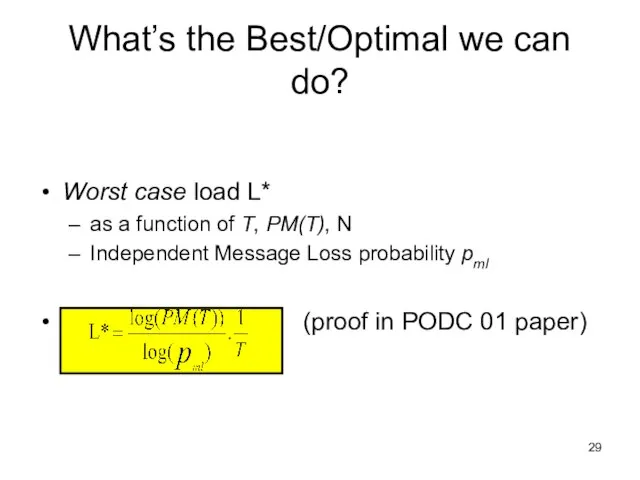

- 29. Worst case load L* as a function of T, PM(T), N Independent Message Loss probability pml

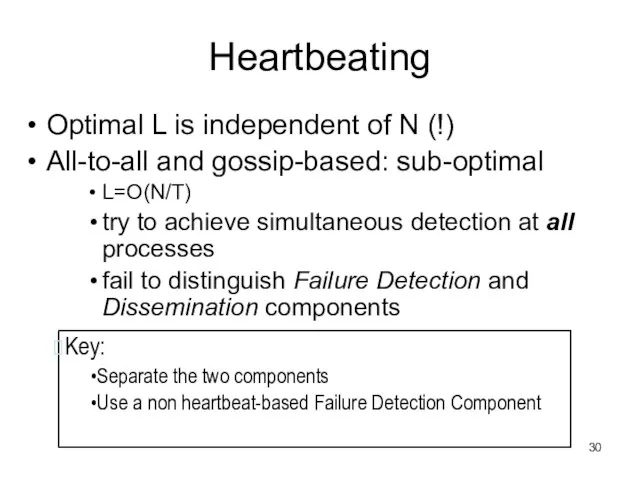

- 30. Heartbeating Optimal L is independent of N (!) All-to-all and gossip-based: sub-optimal L=O(N/T) try to achieve

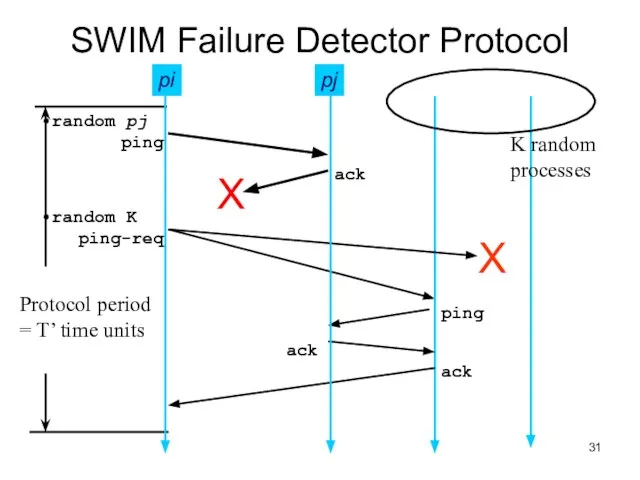

- 31. SWIM Failure Detector Protocol pj

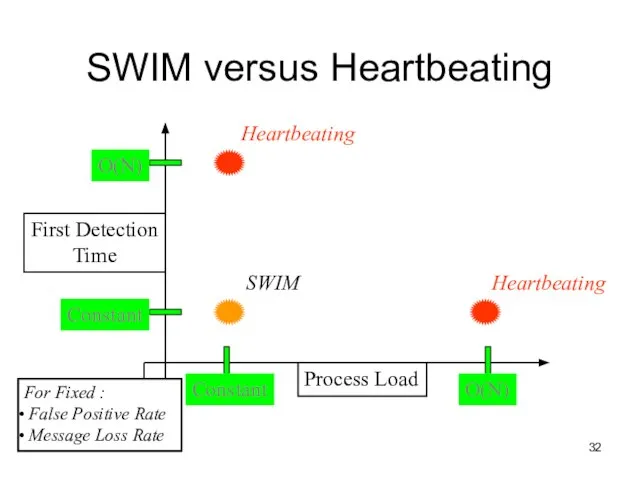

- 32. SWIM versus Heartbeating Process Load First Detection Time Constant Constant O(N) O(N) SWIM For Fixed :

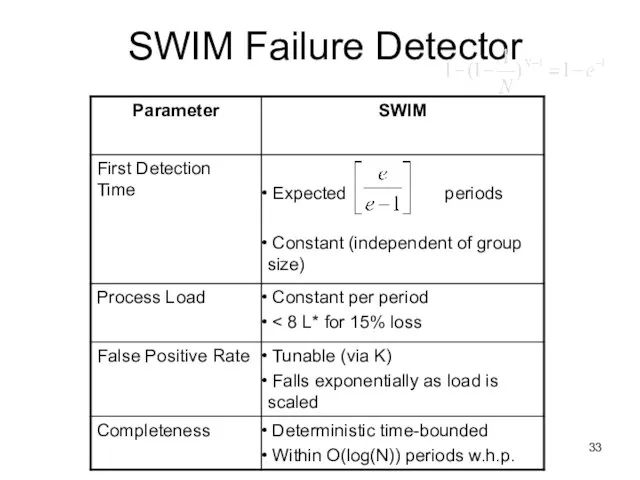

- 33. SWIM Failure Detector

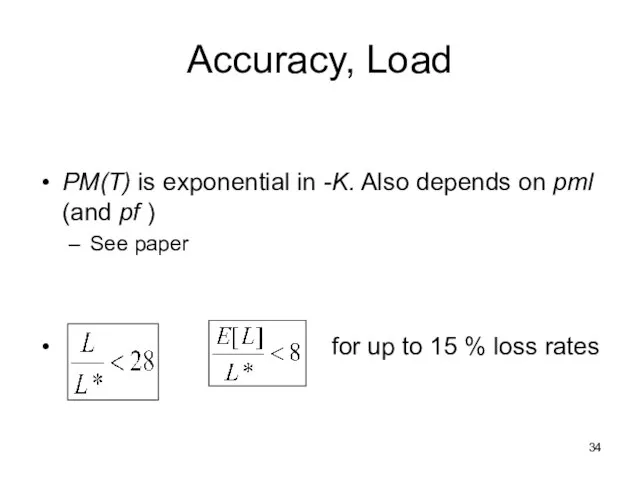

- 34. Accuracy, Load PM(T) is exponential in -K. Also depends on pml (and pf ) See paper

- 35. Prob. of being pinged in T’= E[T ] = Completeness: Any alive member detects failure Eventually

- 36. III. Dissemination HOW ?

- 37. Dissemination Options Multicast (Hardware / IP) unreliable multiple simultaneous multicasts Point-to-point (TCP / UDP) expensive Zero

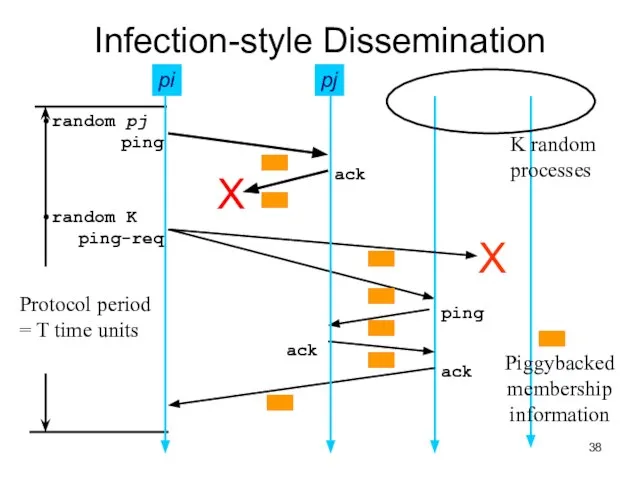

- 38. Infection-style Dissemination pj K random processes

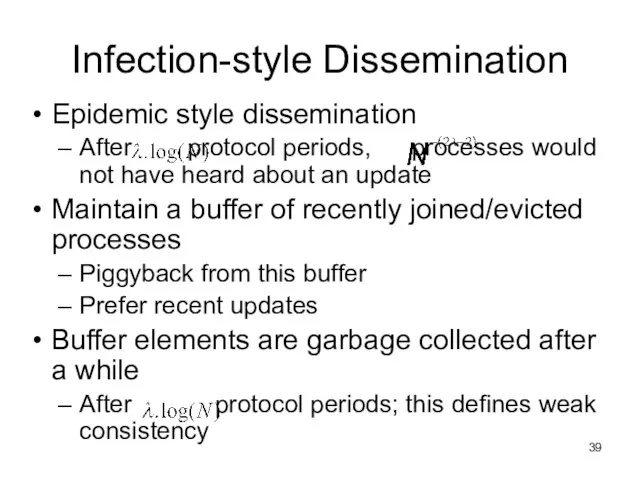

- 39. Infection-style Dissemination Epidemic style dissemination After protocol periods, processes would not have heard about an update

- 40. Suspicion Mechanism False detections, due to Perturbed processes Packet losses, e.g., from congestion Indirect pinging may

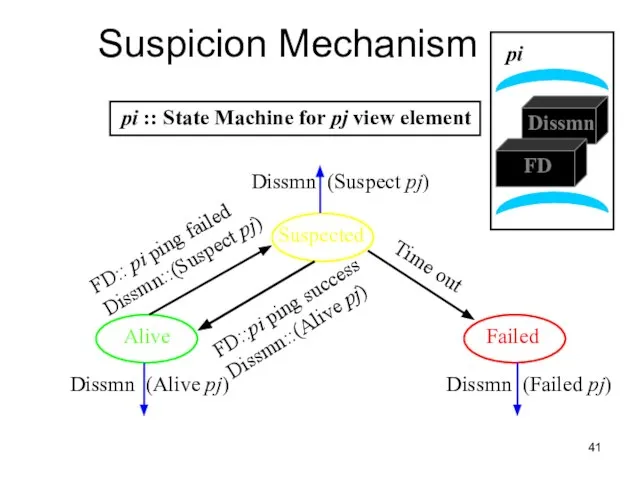

- 41. Suspicion Mechanism Alive Suspected Failed Dissmn (Suspect pj) Dissmn (Alive pj) Dissmn (Failed pj) pi ::

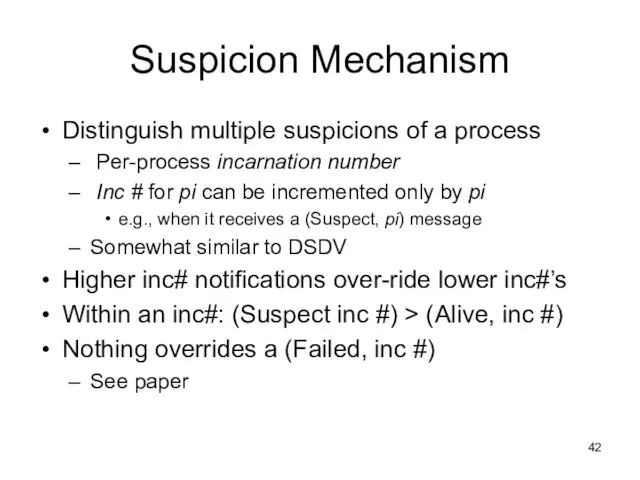

- 42. Suspicion Mechanism Distinguish multiple suspicions of a process Per-process incarnation number Inc # for pi can

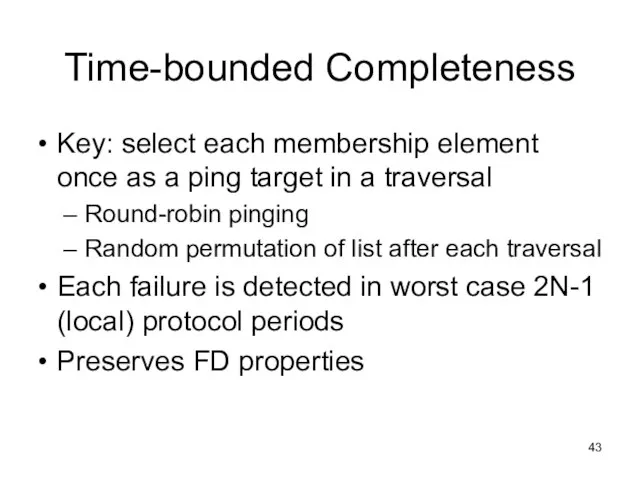

- 43. Time-bounded Completeness Key: select each membership element once as a ping target in a traversal Round-robin

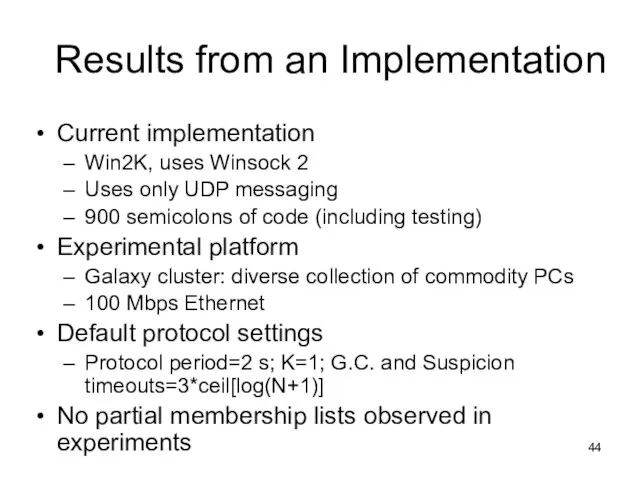

- 44. Results from an Implementation Current implementation Win2K, uses Winsock 2 Uses only UDP messaging 900 semicolons

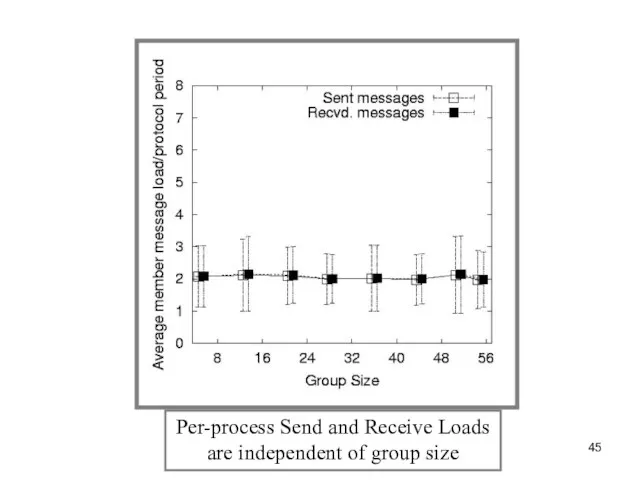

- 45. Per-process Send and Receive Loads are independent of group size

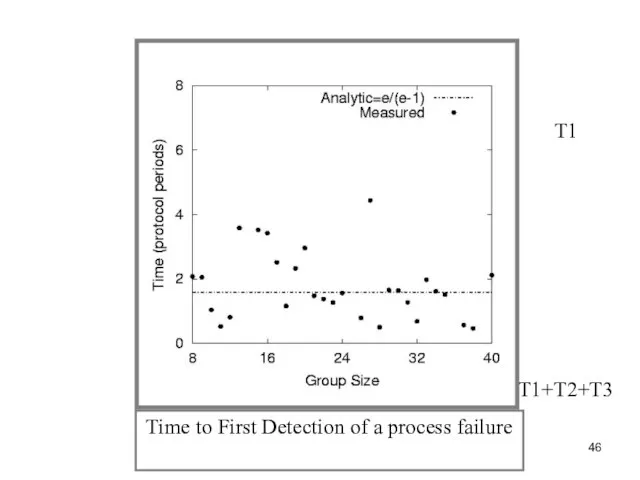

- 46. Time to First Detection of a process failure T1 T1+T2+T3

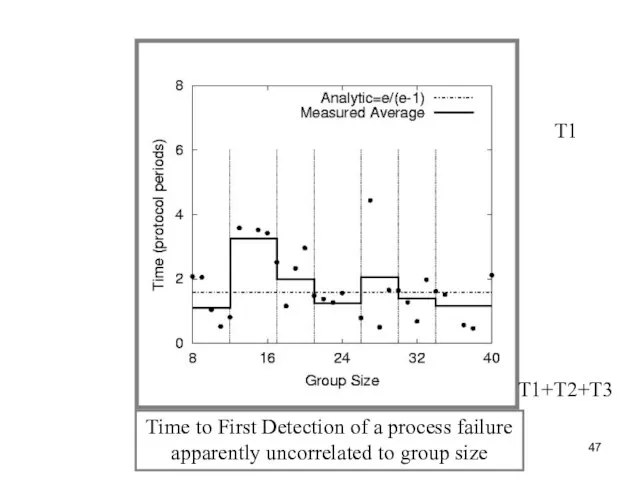

- 47. T1 Time to First Detection of a process failure apparently uncorrelated to group size T1+T2+T3

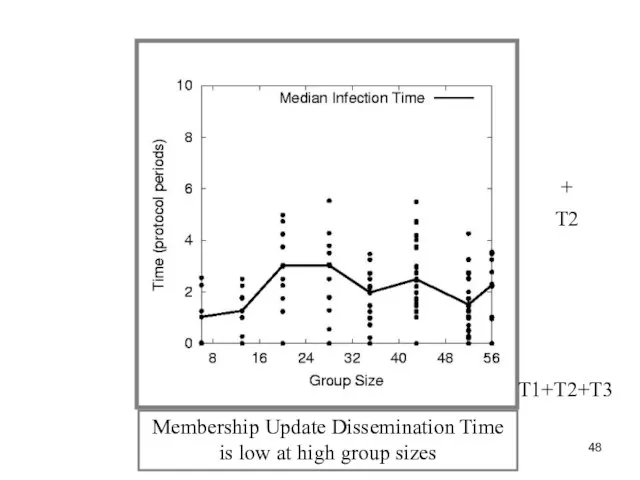

- 48. Membership Update Dissemination Time is low at high group sizes T2 + T1+T2+T3

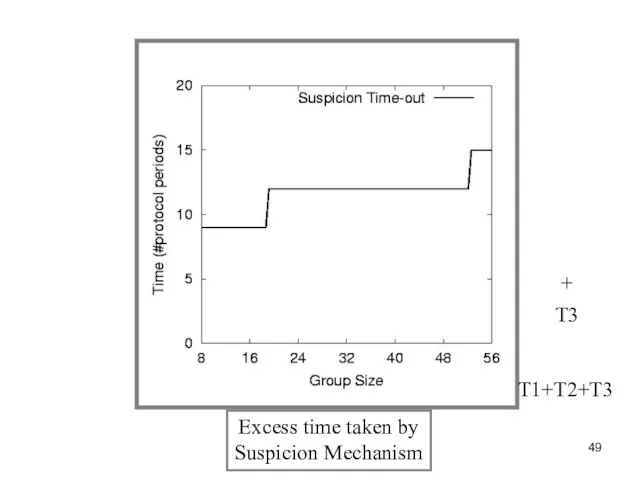

- 49. Excess time taken by Suspicion Mechanism T3 + T1+T2+T3

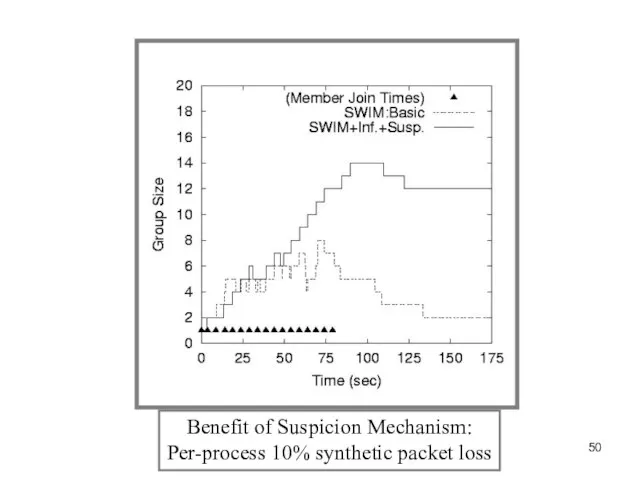

- 50. Benefit of Suspicion Mechanism: Per-process 10% synthetic packet loss

- 51. More discussion points It turns out that with a partial membership list that is uniformly random,

- 52. Reminder – Due this Sunday April 3rd at 11.59 PM Project Midterm Report due, 11.59 pm

- 54. Скачать презентацию

![Prob. of being pinged in T’= E[T ] = Completeness: Any alive](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/376450/slide-34.jpg)

Презентация подготовлена ученицей 1-б класса МОУ лицея № 1 Теплинских Софьей Руководитель: Шупкарина Е.Д.

Презентация подготовлена ученицей 1-б класса МОУ лицея № 1 Теплинских Софьей Руководитель: Шупкарина Е.Д. Бухгалтерия Вашего предприятия (или ИП) даёт сбои. У Вас возникает вопрос «Куда уходят средства?», а внятного ответа на него не нахо

Бухгалтерия Вашего предприятия (или ИП) даёт сбои. У Вас возникает вопрос «Куда уходят средства?», а внятного ответа на него не нахо Правила и процедуры УНТЦ

Правила и процедуры УНТЦ Создать плакат реклам кампании

Создать плакат реклам кампании  Валентина Быстрых учитель математики МСОШт№8 г. Красновишерск

Валентина Быстрых учитель математики МСОШт№8 г. Красновишерск ЭВРИТМИЯ

ЭВРИТМИЯ  Дымковская игрушка

Дымковская игрушка Цвет в натюрморте

Цвет в натюрморте Инфляция. Кейнсианская теория

Инфляция. Кейнсианская теория Технико-коммерческое предложение: Аппараты высокого давления ПРЕУС Е3517

Технико-коммерческое предложение: Аппараты высокого давления ПРЕУС Е3517 Школа«Гражданин 2.0»

Школа«Гражданин 2.0» МИОКАРДИТЫ

МИОКАРДИТЫ  Жемқорлық туралі түсінік

Жемқорлық туралі түсінік Презентация 8

Презентация 8 ПРАВИЛА ПОСТАНОВКИ ЦЕЛИ.

ПРАВИЛА ПОСТАНОВКИ ЦЕЛИ. Магнитное поле планет Солнечной системы

Магнитное поле планет Солнечной системы Презентация по английскому ENGLISH CUISINE

Презентация по английскому ENGLISH CUISINE  новые профессии

новые профессии О реализации органами местного самоуправления Ярославской области требований федерального закона от 27 июля 2010 года «Об организац

О реализации органами местного самоуправления Ярославской области требований федерального закона от 27 июля 2010 года «Об организац Поэзия Серебряного века 9 класс

Поэзия Серебряного века 9 класс Ярче легенды

Ярче легенды «Кенгуру» – это массовый международный математический конкурс- игра под девизом «Математика для всех». Главная цель конкурса – п

«Кенгуру» – это массовый международный математический конкурс- игра под девизом «Математика для всех». Главная цель конкурса – п ПРОЕКТПО ТЕМЕ: ДЕТСКОЕ ПЛАТЬЕ

ПРОЕКТПО ТЕМЕ: ДЕТСКОЕ ПЛАТЬЕ Проектирование:как запланировать и оценить стоимость?

Проектирование:как запланировать и оценить стоимость? Презентация на тему Человечество на Земле

Презентация на тему Человечество на Земле  Pr. Simple vs Pr.Continuous (rule + ex.) (6-7кл)

Pr. Simple vs Pr.Continuous (rule + ex.) (6-7кл) Г.А. Скребицкий "Осень"

Г.А. Скребицкий "Осень" «Некоторые аспекты развития и управления организации » (ГЛОБАЛИЗАЦИЯ & ИКТ)

«Некоторые аспекты развития и управления организации » (ГЛОБАЛИЗАЦИЯ & ИКТ)