Содержание

- 2. This presentation contains forward-looking statements regarding the SGI Altix® XE server family and roadmap, other SGI®

- 3. SGI Today Industry Leading Innovation More than 1600 employees 800+ Customer-facing employees 300+ Engineers to continue

- 4. SGI Unique Capabilities 20+ Years of expertise in solving the most demanding compute and data-intensive problems

- 5. Project Carlsbad Next-generation integrated blade platform, with breakthrough performance density and reliability. DENSITY POWER RELIABILITY

- 6. Project Carlsbad: Technology for a New Era in Computing Next generation blade platform for breakthrough scalability

- 7. System Hardware Overview

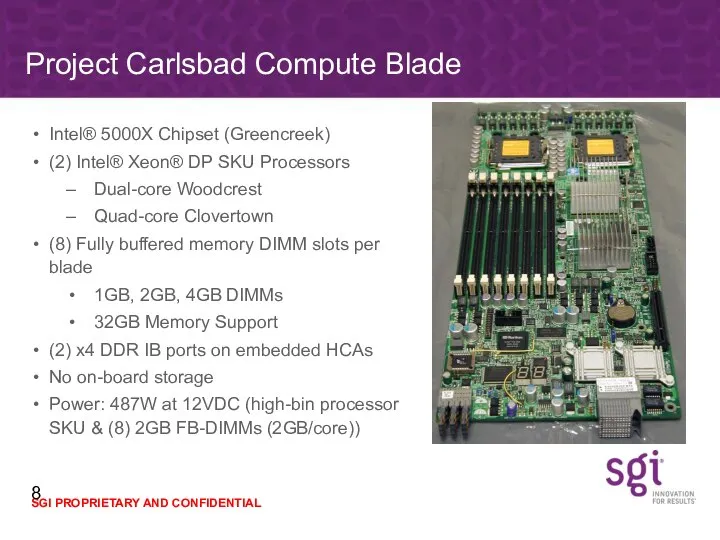

- 8. Intel® 5000X Chipset (Greencreek) (2) Intel® Xeon® DP SKU Processors Dual-core Woodcrest Quad-core Clovertown (8) Fully

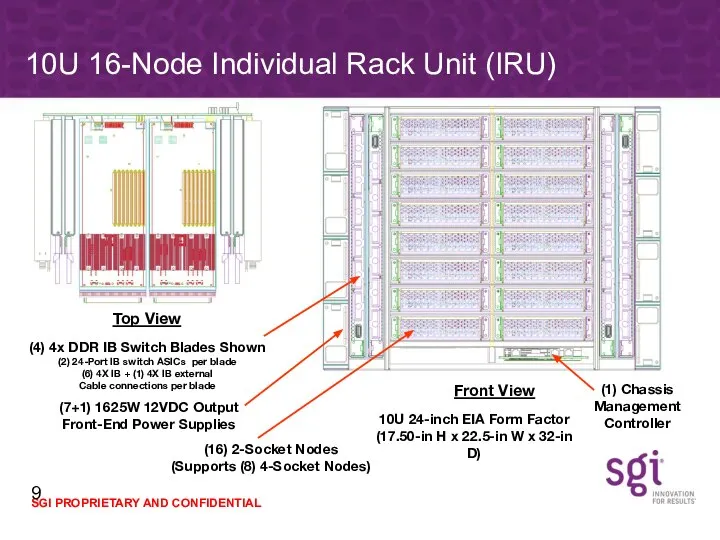

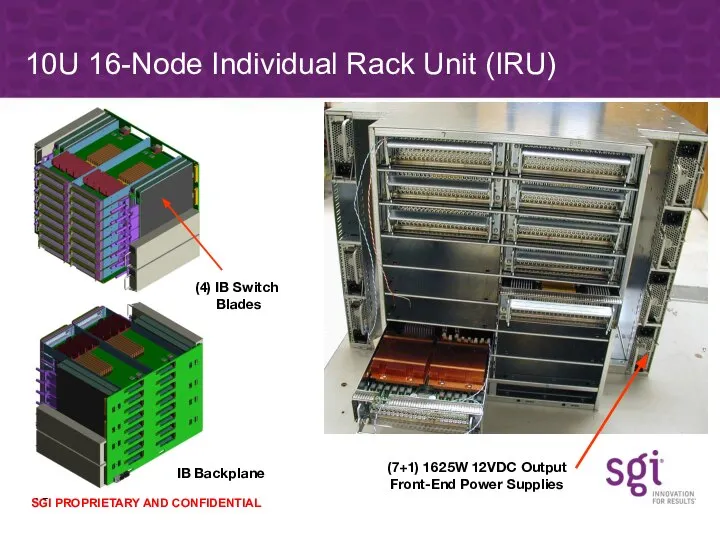

- 9. 10U 16-Node Individual Rack Unit (IRU) (16) 2-Socket Nodes (Supports (8) 4-Socket Nodes) (4) 4x DDR

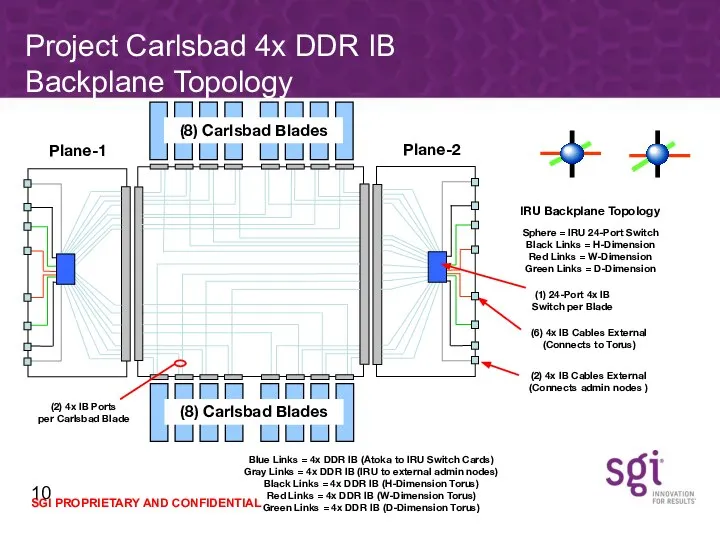

- 10. IRU Backplane Topology Sphere = IRU 24-Port Switch Black Links = H-Dimension Red Links = W-Dimension

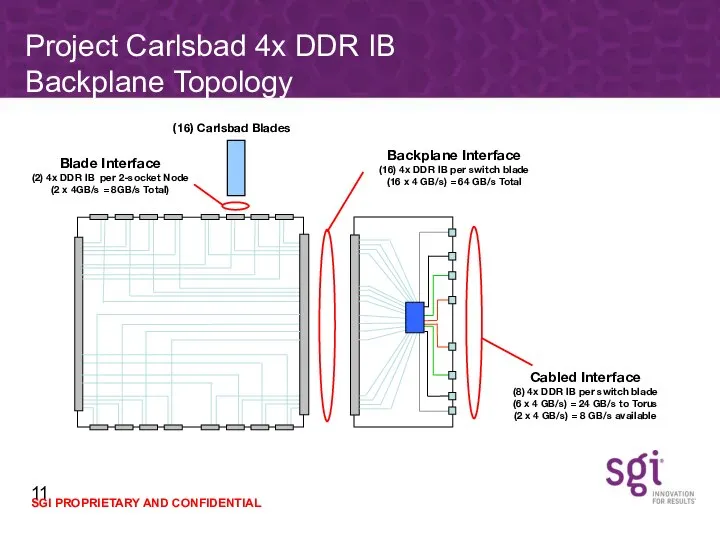

- 11. (16) Carlsbad Blades Blade Interface (2) 4x DDR IB per 2-socket Node (2 x 4GB/s =

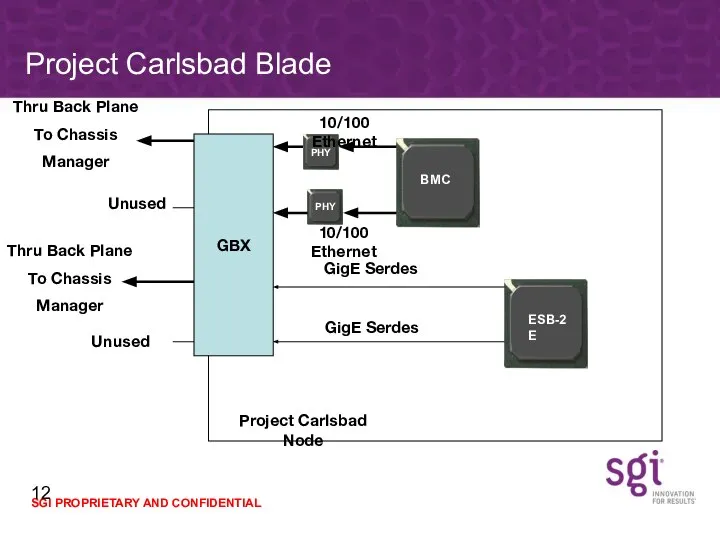

- 12. Project Carlsbad Blade GBX 10/100 Ethernet 10/100 Ethernet GigE Serdes GigE Serdes Unused Unused Thru Back

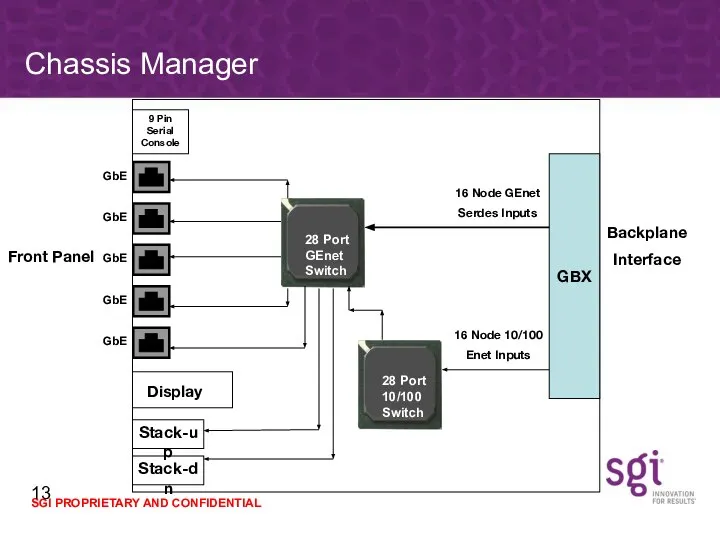

- 13. Chassis Manager Front Panel GBX 9 Pin Serial Console Stack-up Stack-dn 16 Node GEnet Serdes Inputs

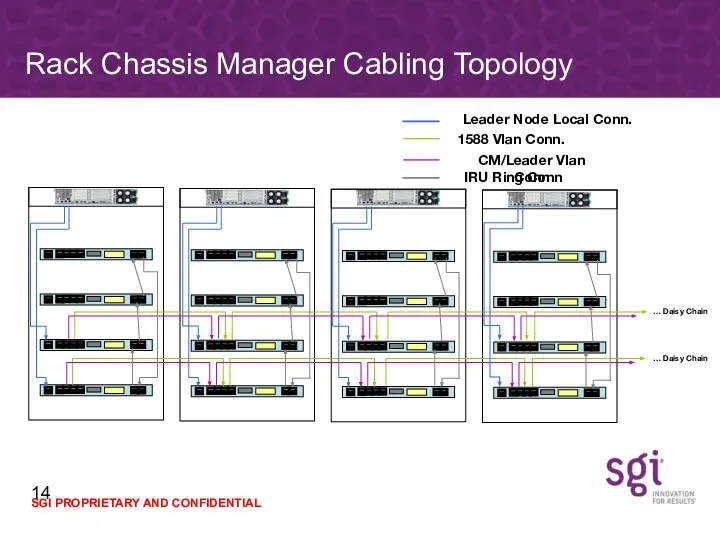

- 14. Rack Chassis Manager Cabling Topology Leader Node Local Conn. 1588 Vlan Conn. CM/Leader Vlan Conn IRU

- 15. (7+1) 1625W 12VDC Output Front-End Power Supplies IB Backplane (4) IB Switch Blades 10U 16-Node Individual

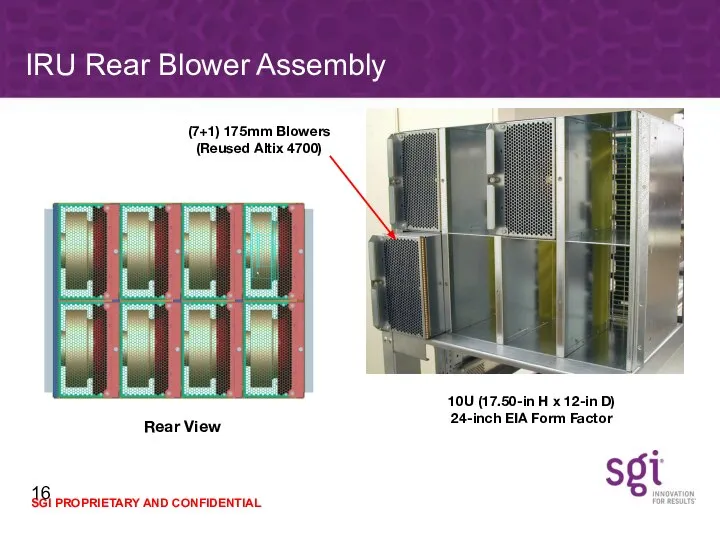

- 16. 10U (17.50-in H x 12-in D) 24-inch EIA Form Factor IRU Rear Blower Assembly Rear View

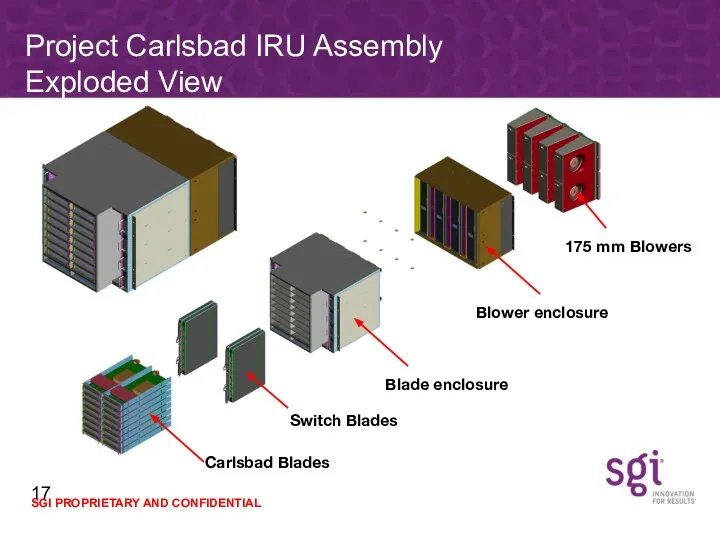

- 17. Project Carlsbad IRU Assembly Exploded View Carlsbad Blades Switch Blades Blade enclosure Blower enclosure 175 mm

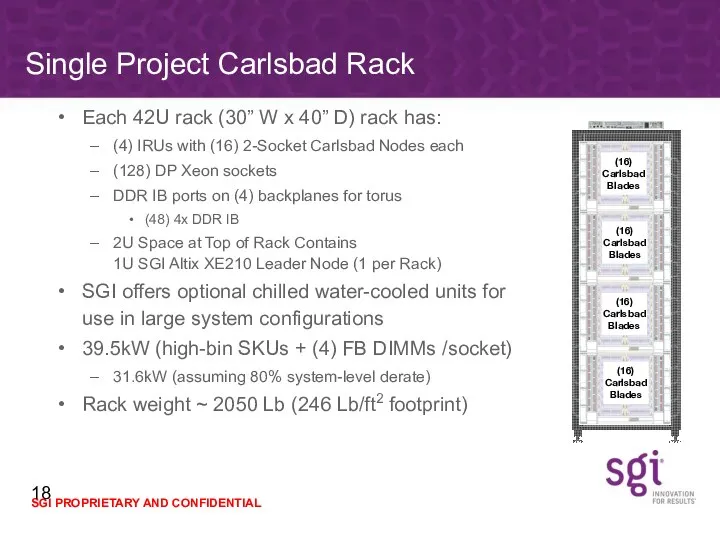

- 18. Single Project Carlsbad Rack Each 42U rack (30” W x 40” D) rack has: (4) IRUs

- 19. 19” Standard Rack Also Supported…

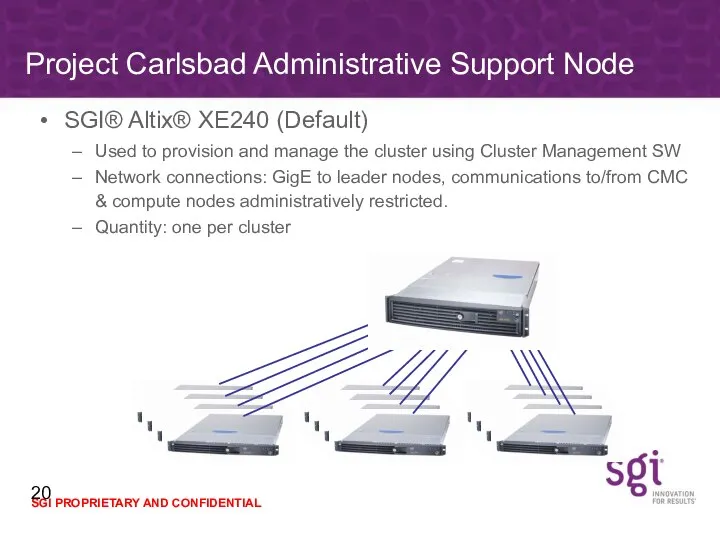

- 20. SGI® Altix® XE240 (Default) Used to provision and manage the cluster using Cluster Management SW Network

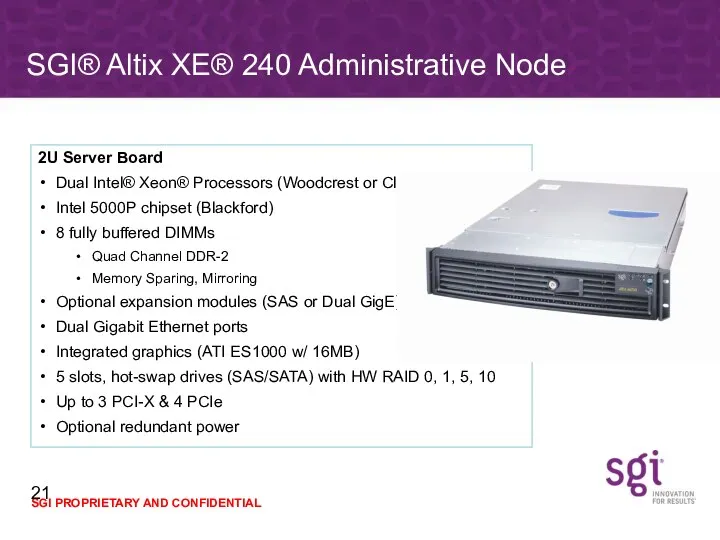

- 21. SGI® Altix XE® 240 Administrative Node 2U Server Board Dual Intel® Xeon® Processors (Woodcrest or Clovertown)

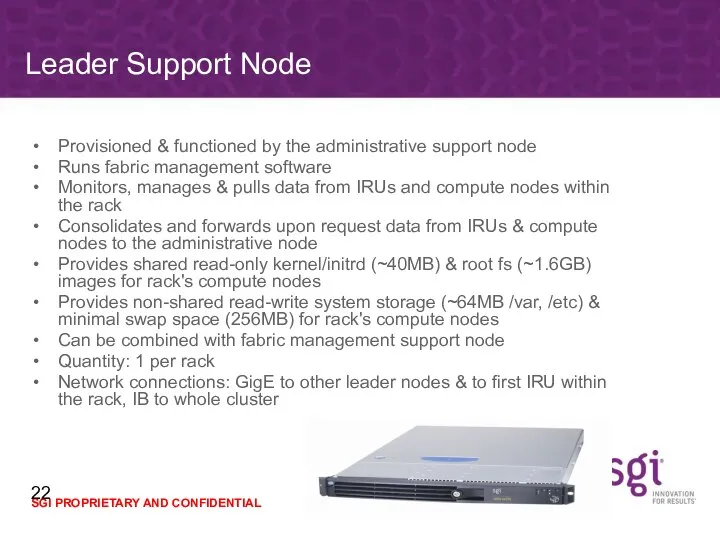

- 22. Leader Support Node Provisioned & functioned by the administrative support node Runs fabric management software Monitors,

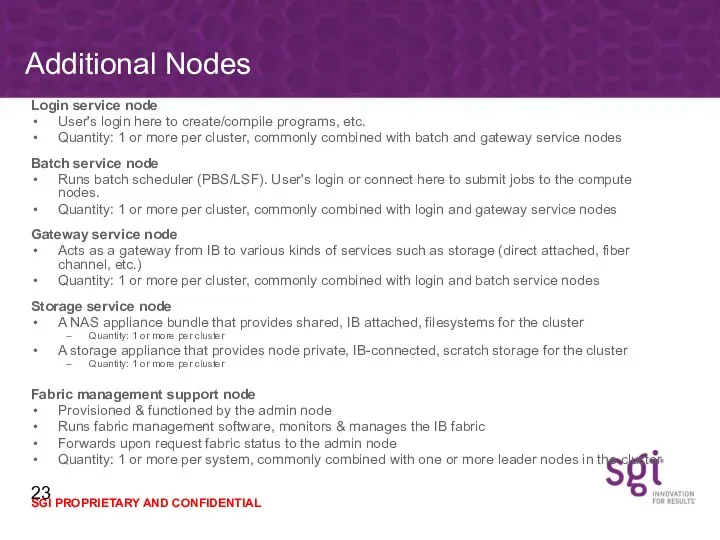

- 23. Additional Nodes Login service node User's login here to create/compile programs, etc. Quantity: 1 or more

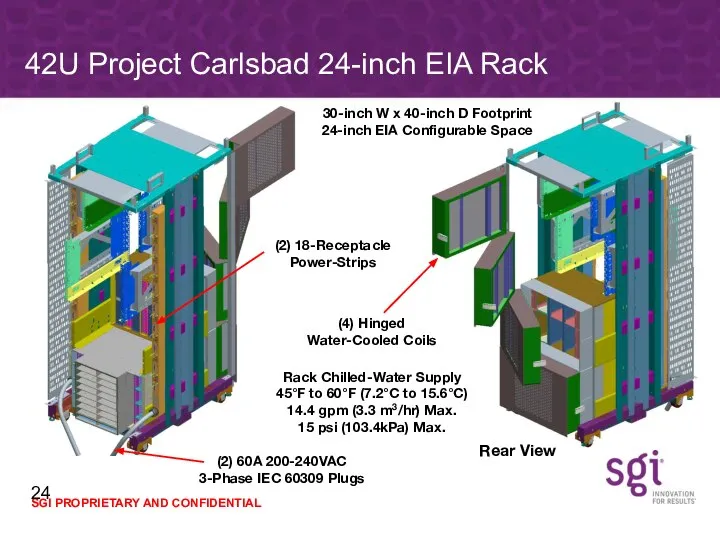

- 24. 42U Project Carlsbad 24-inch EIA Rack 30-inch W x 40-inch D Footprint 24-inch EIA Configurable Space

- 25. 42U Project Carlsbad 24-inch EIA Rack (Empty)

- 26. Concerns about Facility (Space, Weight, Power)

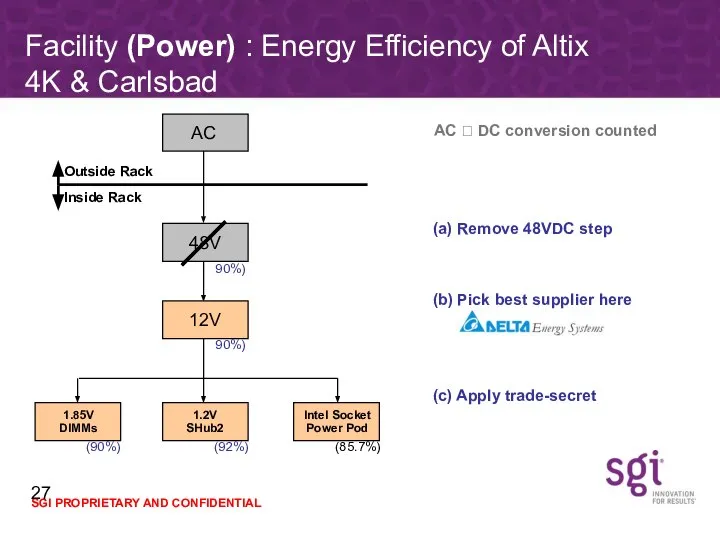

- 27. Facility (Power) : Energy Efficiency of Altix 4K & Carlsbad AC ? DC conversion counted (c)

- 28. SGI Energy Efficiency SGI® Altix® 4700 server delivers a world-class power solution High efficiency, high reliability,

- 29. Topology Overview

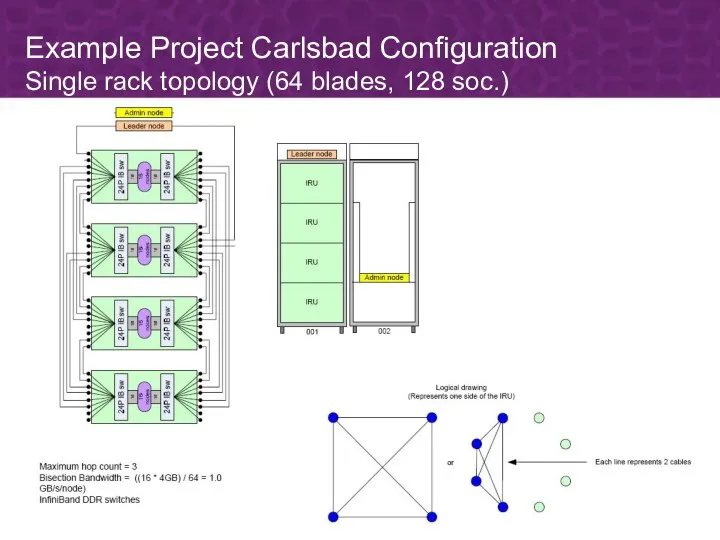

- 30. Example Project Carlsbad Configuration Single rack topology (64 blades, 128 soc.)

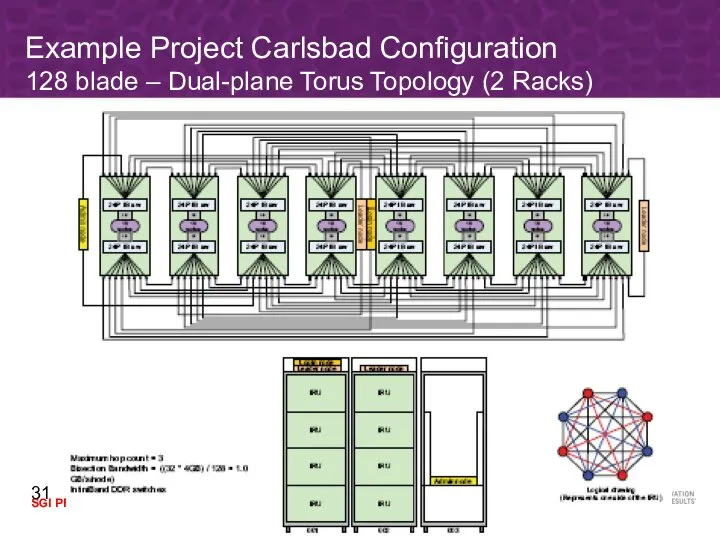

- 31. Example Project Carlsbad Configuration 128 blade – Dual-plane Torus Topology (2 Racks)

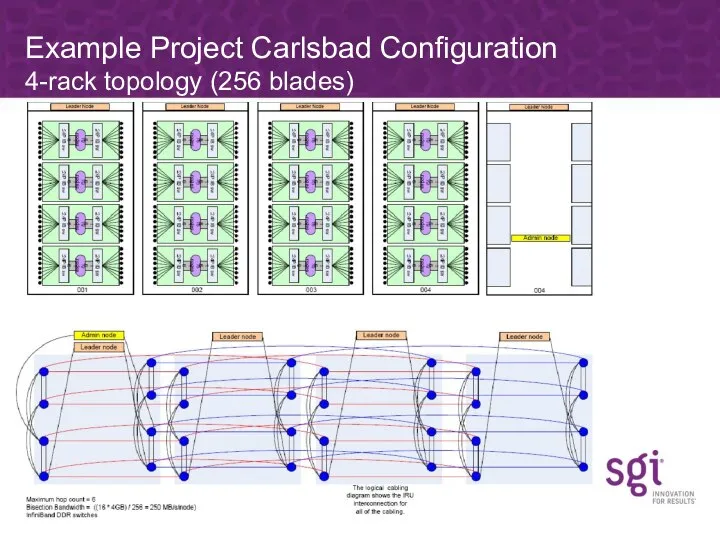

- 32. Example Project Carlsbad Configuration 4-rack topology (256 blades)

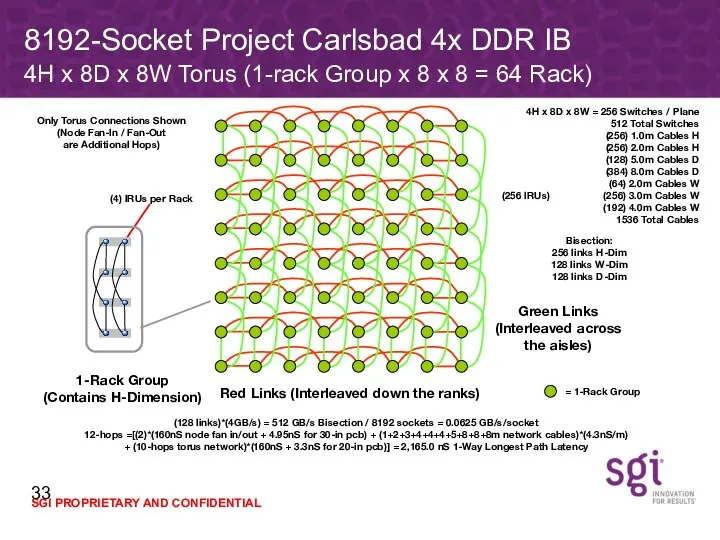

- 33. 8192-Socket Project Carlsbad 4x DDR IB 4H x 8D x 8W Torus (1-rack Group x 8

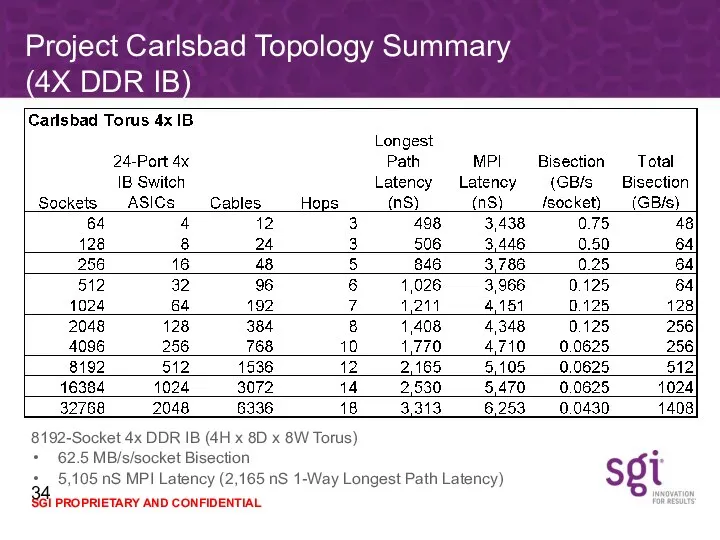

- 34. Project Carlsbad Topology Summary (4X DDR IB) 8192-Socket 4x DDR IB (4H x 8D x 8W

- 35. Software Overview

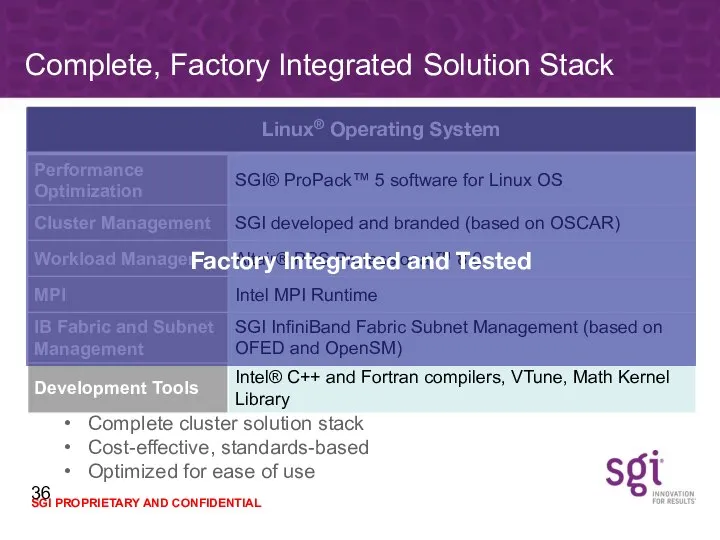

- 36. Complete, Factory Integrated Solution Stack Linux® Operating System Complete cluster solution stack Cost-effective, standards-based Optimized for

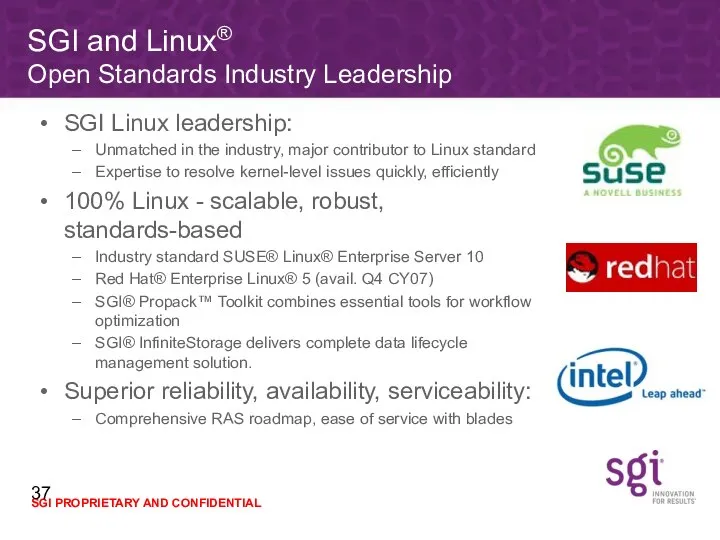

- 37. SGI and Linux® Open Standards Industry Leadership SGI Linux leadership: Unmatched in the industry, major contributor

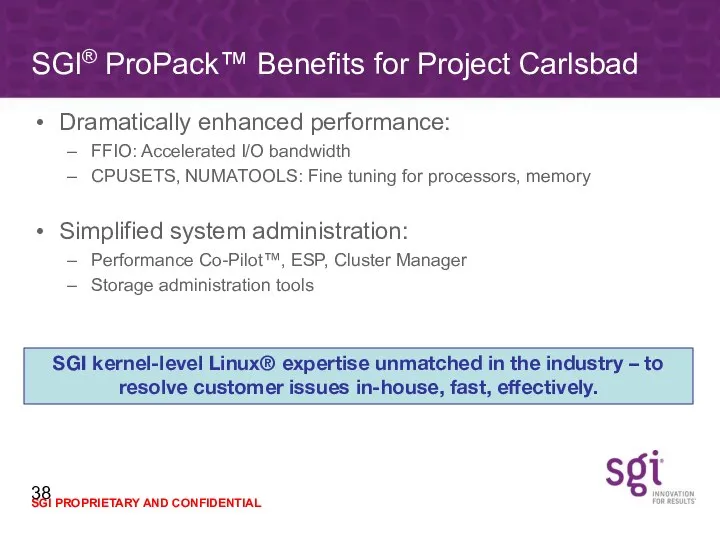

- 38. SGI® ProPack™ Benefits for Project Carlsbad Dramatically enhanced performance: FFIO: Accelerated I/O bandwidth CPUSETS, NUMATOOLS: Fine

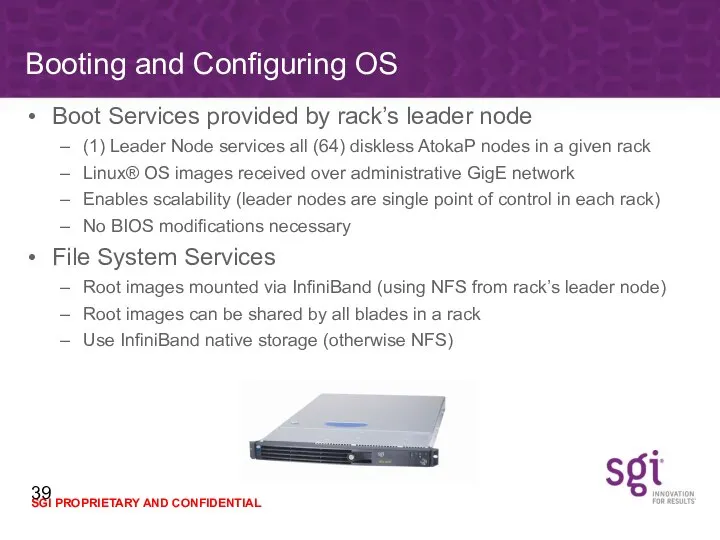

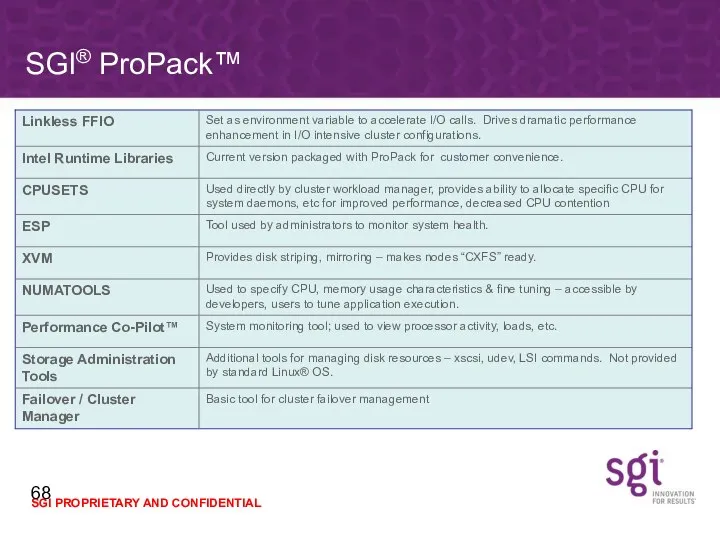

- 39. Boot Services provided by rack’s leader node (1) Leader Node services all (64) diskless AtokaP nodes

- 40. Use a standard Linux® OS distribution Use a standard kernel and remove all unnecessary RPMs Preserve

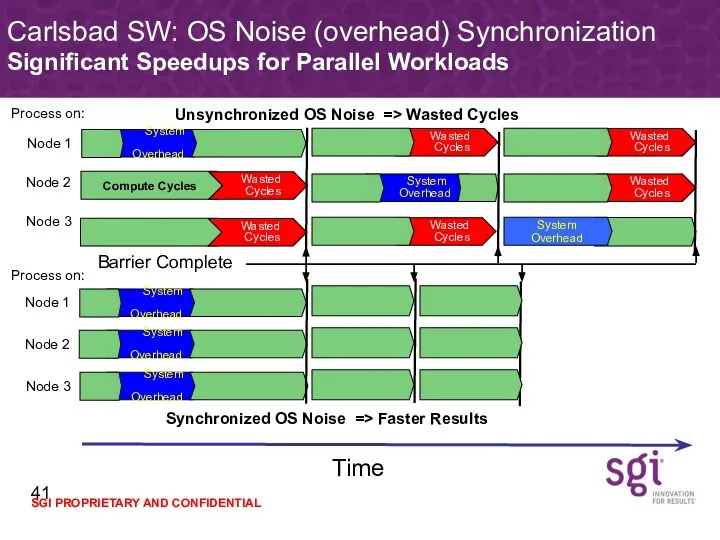

- 41. Carlsbad SW: OS Noise (overhead) Synchronization Significant Speedups for Parallel Workloads Time Barrier Complete Process on:

- 42. Node-level Baseboard Management Controller (BMC) and onboard NICs Utilize industry standard IPMI 2.0 compliant protocols Chassis

- 43. SGI Developed Solution Based on Open Source Cluster Application Resources (OSCAR) from OpenClusterGroup.org Provides centralized SW

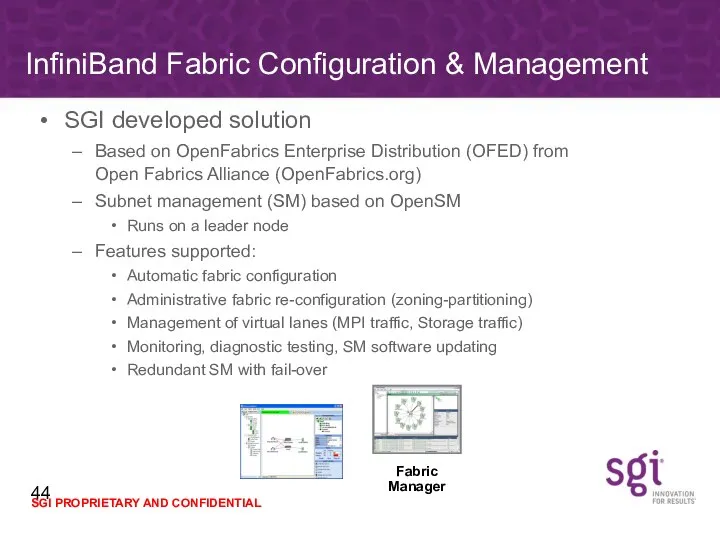

- 44. SGI developed solution Based on OpenFabrics Enterprise Distribution (OFED) from Open Fabrics Alliance (OpenFabrics.org) Subnet management

- 45. Storage Integration

- 46. Storage – Typical needs Key Types of IO (each with different IO usage patterns) Shared Systems

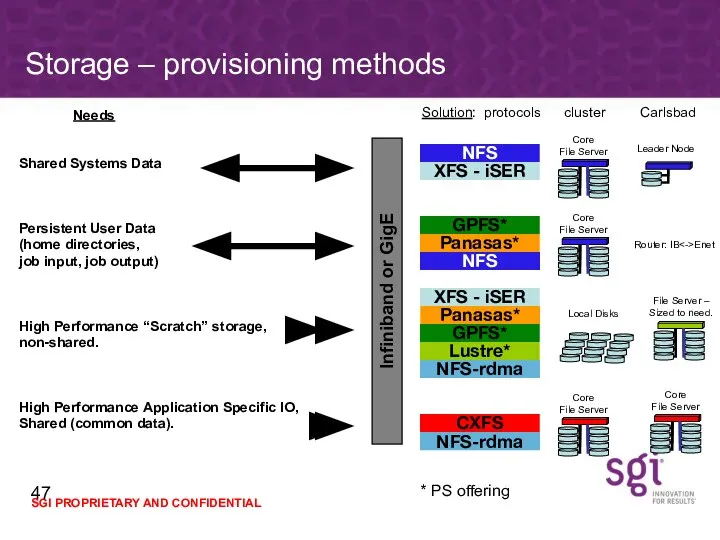

- 47. Storage – provisioning methods Needs Shared Systems Data Persistent User Data (home directories, job input, job

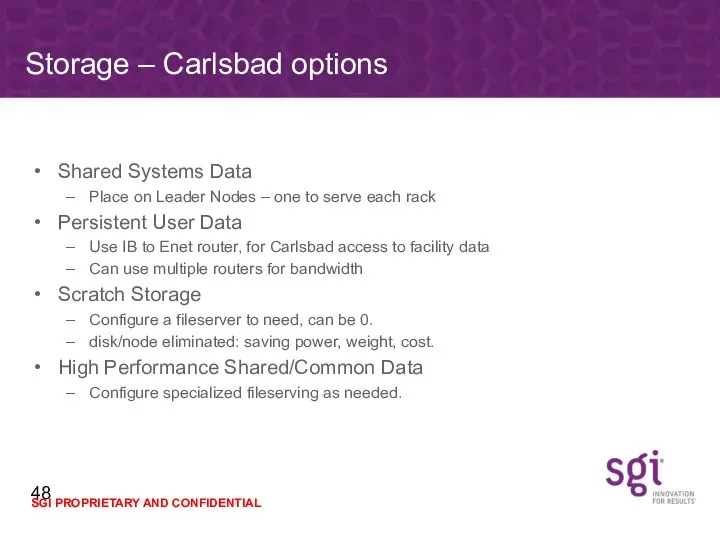

- 48. Storage – Carlsbad options Shared Systems Data Place on Leader Nodes – one to serve each

- 49. Roadmap

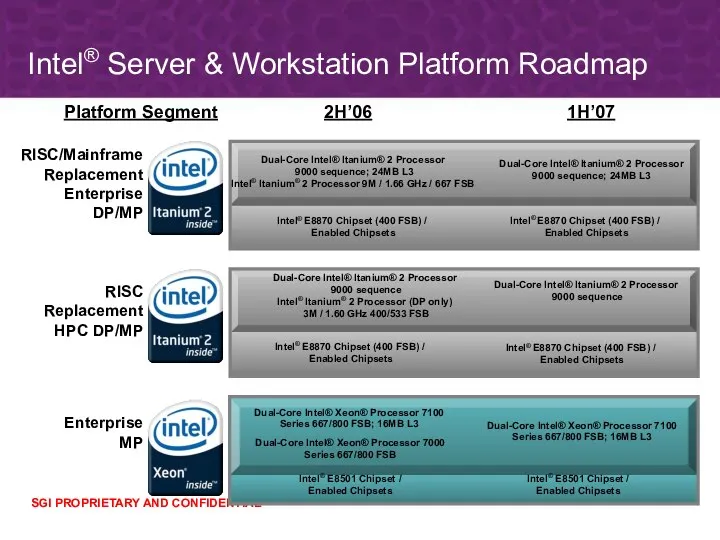

- 50. 1H’07 2H’06 Platform Segment RISC/Mainframe Replacement Enterprise DP/MP RISC Replacement HPC DP/MP Enterprise MP Intel® E8870

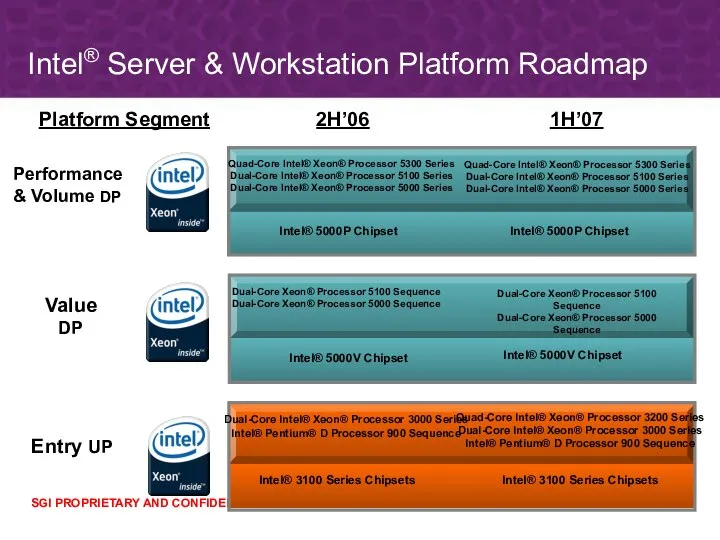

- 51. 1H’07 2H’06 Platform Segment Performance & Volume DP Value DP Entry UP Intel® 5000P Chipset Quad-Core

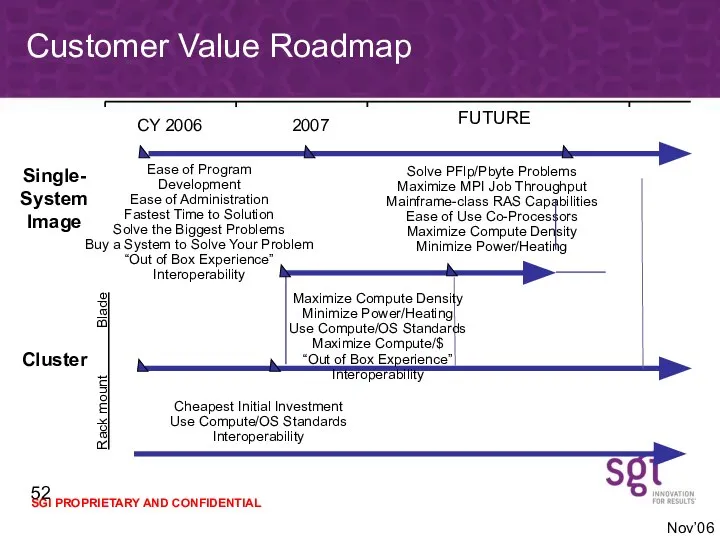

- 52. Solve PFlp/Pbyte Problems Maximize MPI Job Throughput Mainframe-class RAS Capabilities Ease of Use Co-Processors Maximize Compute

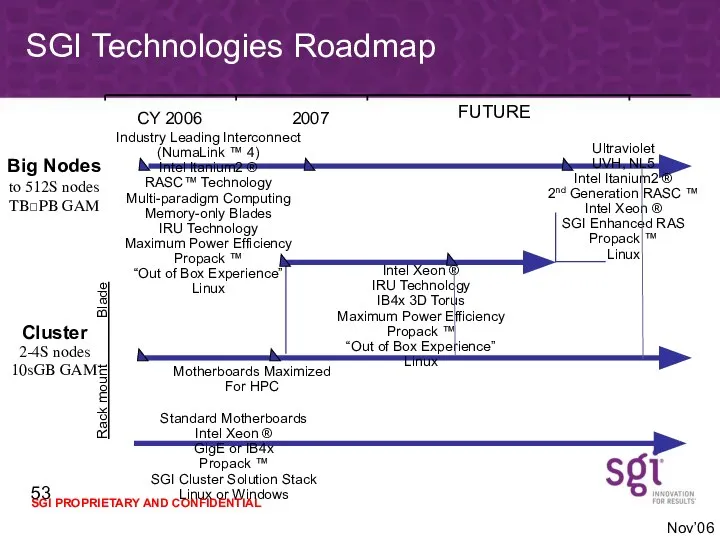

- 53. SGI Technologies Roadmap CY 2006 2007 Big Nodes to 512S nodes TBPB GAM Cluster 2-4S nodes

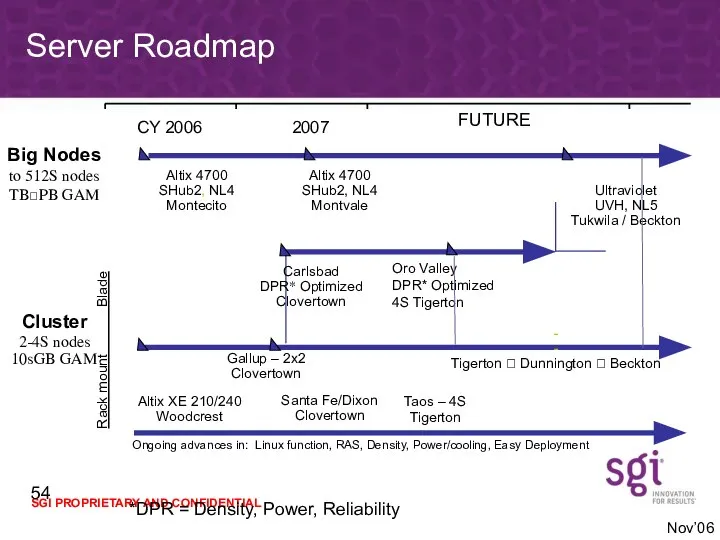

- 54. Server Roadmap CY 2006 2007 Altix 4700 SHub2, NL4 Montvale Big Nodes to 512S nodes TBPB

- 55. Summary

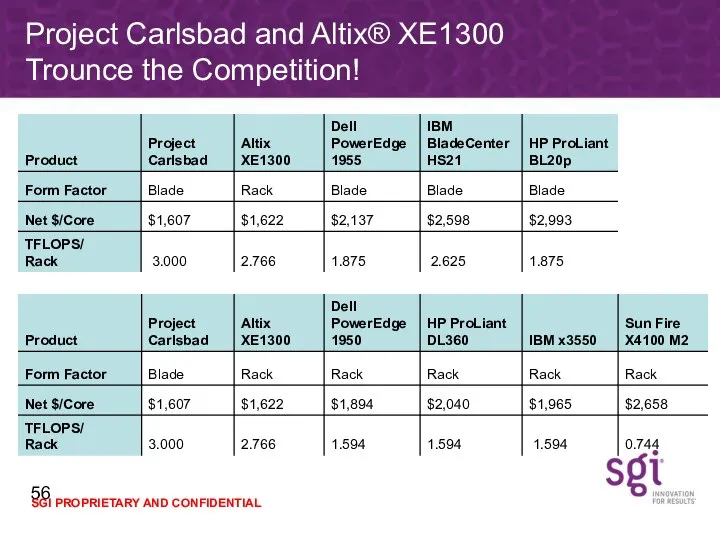

- 56. Project Carlsbad and Altix® XE1300 Trounce the Competition!

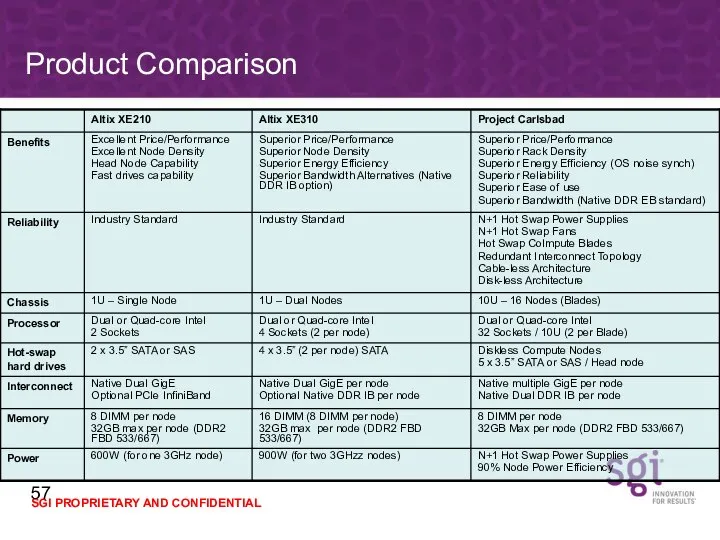

- 57. Product Comparison

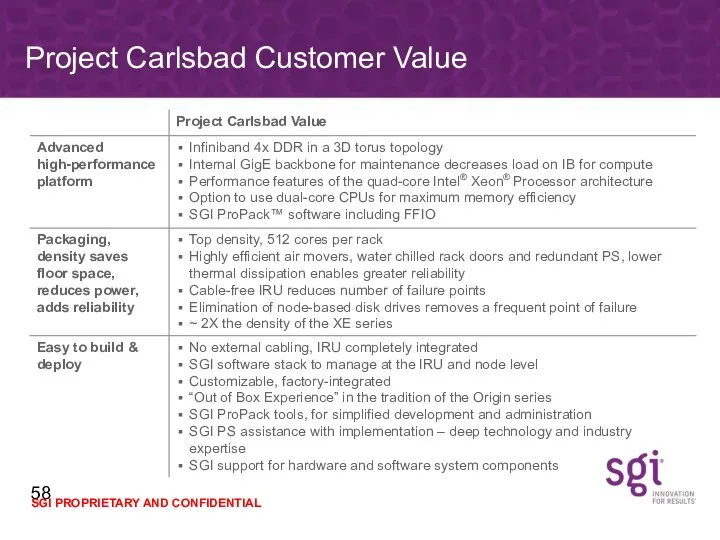

- 58. Project Carlsbad Customer Value

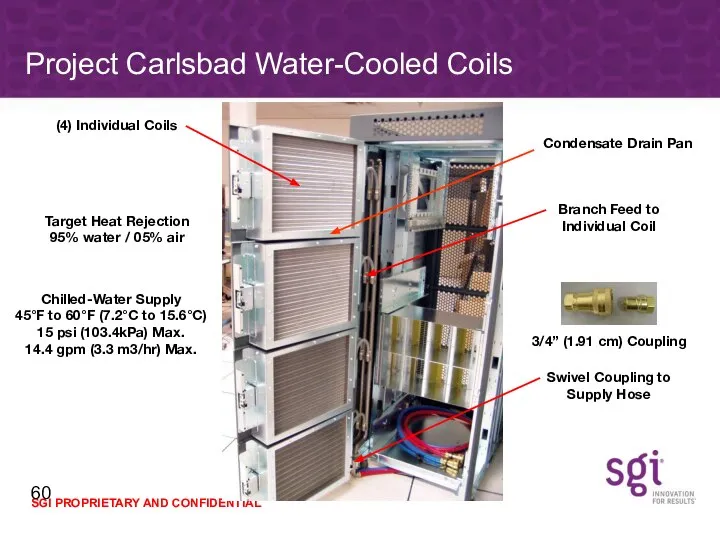

- 60. Project Carlsbad Water-Cooled Coils Target Heat Rejection 95% water / 05% air (4) Individual Coils Chilled-Water

- 61. Project Carlsbad Water-Cooled Coils

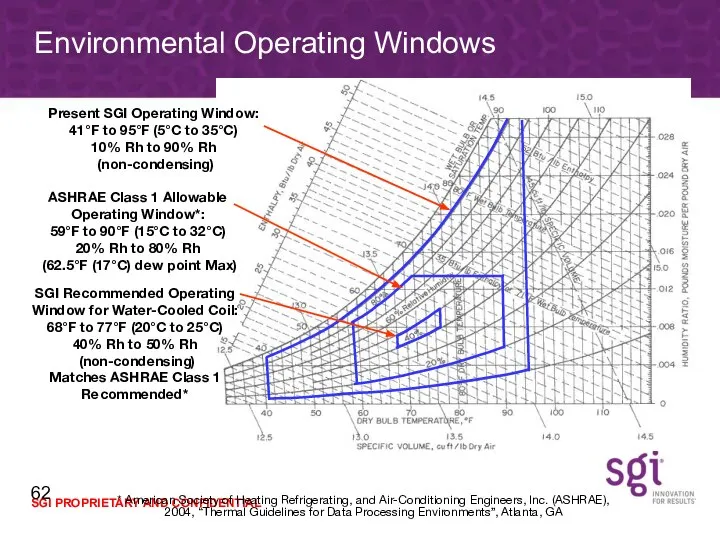

- 62. Environmental Operating Windows ASHRAE Class 1 Allowable Operating Window*: 59°F to 90°F (15°C to 32°C) 20%

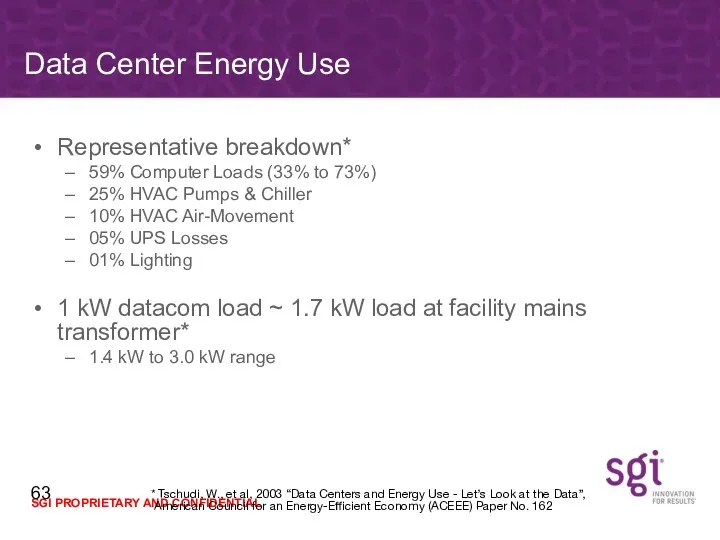

- 63. Representative breakdown* 59% Computer Loads (33% to 73%) 25% HVAC Pumps & Chiller 10% HVAC Air-Movement

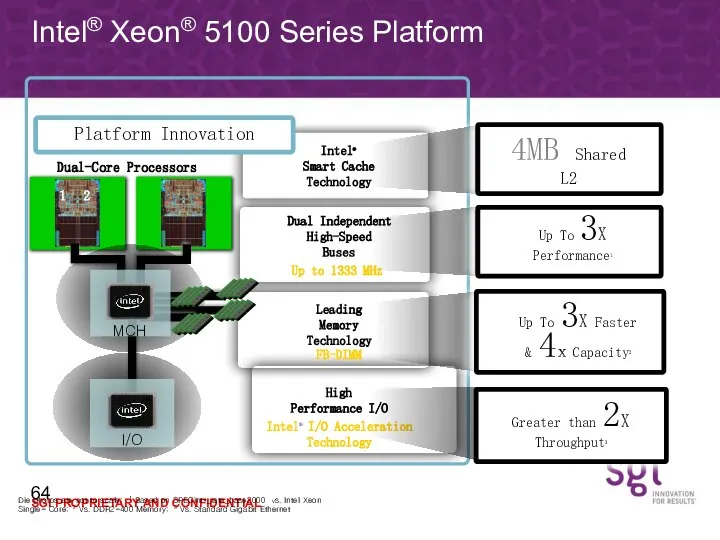

- 64. Intel® Xeon® 5100 Series Platform Die photos are not to scale; 1 Based on SPECint*_rate_base2000 vs.

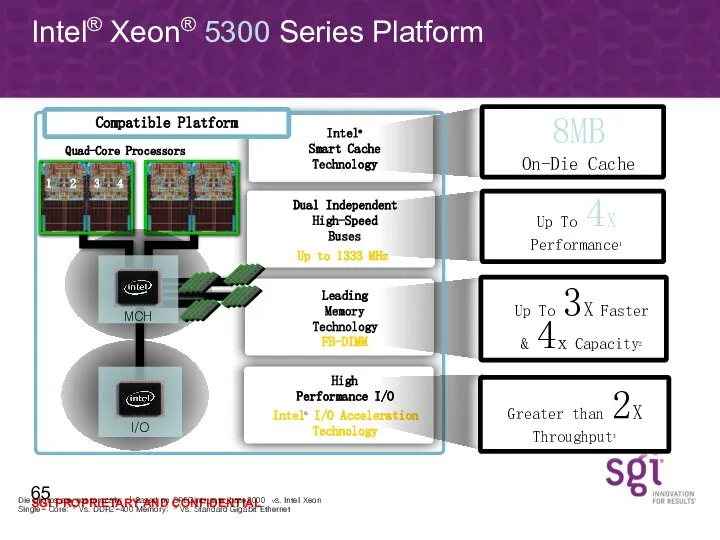

- 65. Intel® Xeon® 5300 Series Platform Die photos are not to scale; 1 Based on SPECint*_rate_base2000 vs.

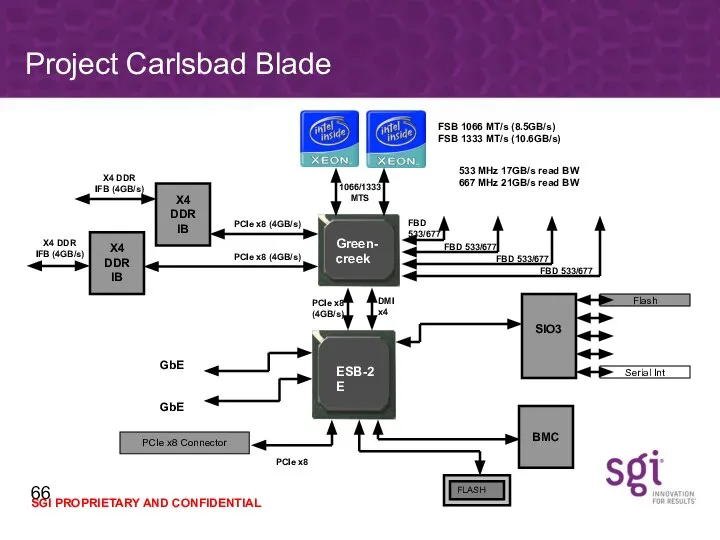

- 66. Project Carlsbad Blade Green-creek SIO3 Serial Int Flash FLASH GbE PCIe x8 (4GB/s) FBD 533/677 FBD

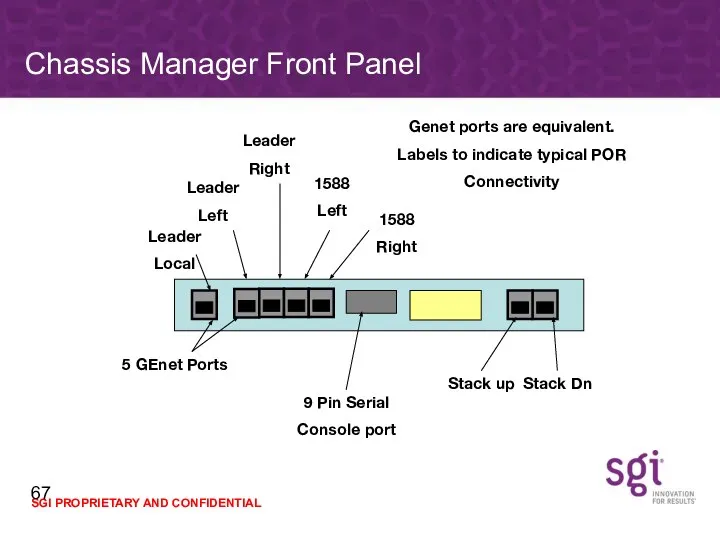

- 67. Chassis Manager Front Panel 9 Pin Serial Console port Stack up Stack Dn 5 GEnet Ports

- 68. SGI® ProPack™

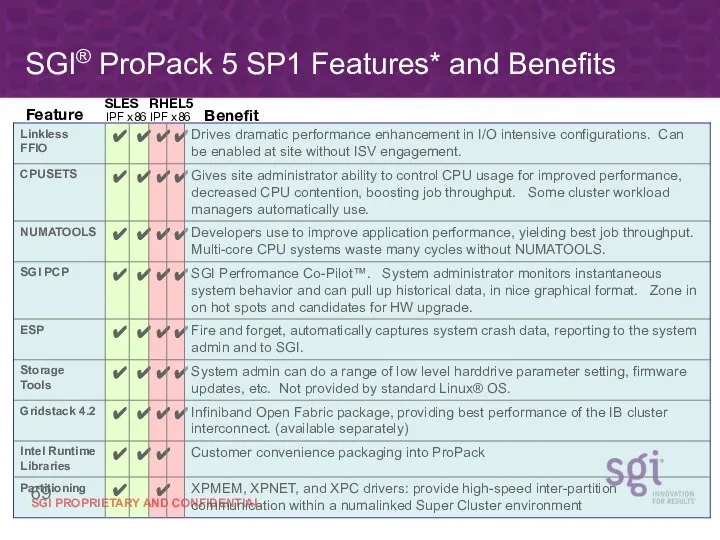

- 69. SGI® ProPack 5 SP1 Features* and Benefits IPF x86 IPF x86 SLES RHEL5 Feature Benefit

- 71. Скачать презентацию

Презентация на тему Растительный мир тундры

Презентация на тему Растительный мир тундры Псалом 134. Славы Господней

Псалом 134. Славы Господней Презентация на тему Русь в правление Ивана Грозного (1533-1584) 10 класс

Презентация на тему Русь в правление Ивана Грозного (1533-1584) 10 класс «Бабушки ON-line» социально-экономический проект

«Бабушки ON-line» социально-экономический проект История блокады Ленинграда

История блокады Ленинграда Основы экологии и экологические проблемы природопользования

Основы экологии и экологические проблемы природопользования Презентация на тему Семейство Зонтичные

Презентация на тему Семейство Зонтичные  Современные подходы в организации деятельности по развитию кадрового потенциала сотрудников

Современные подходы в организации деятельности по развитию кадрового потенциала сотрудников  "Карагайский бор"

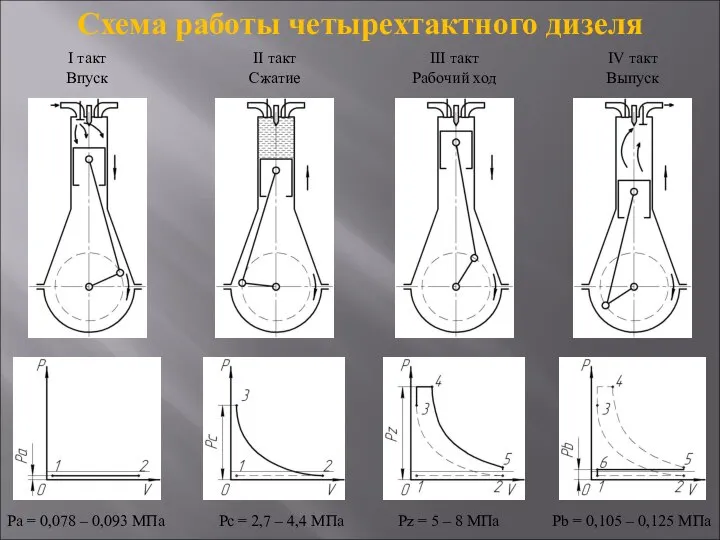

"Карагайский бор" Схема работы четырехтактного дизеля на судне. Центробежно-вихревой насос. Система управления котла-утилизатор

Схема работы четырехтактного дизеля на судне. Центробежно-вихревой насос. Система управления котла-утилизатор Историческое прошлое веера

Историческое прошлое веера Edem. Проблема

Edem. Проблема Фьючерсный контракт и его актуальность

Фьючерсный контракт и его актуальность Соединения химических элементов. Степень окисления

Соединения химических элементов. Степень окисления Москва

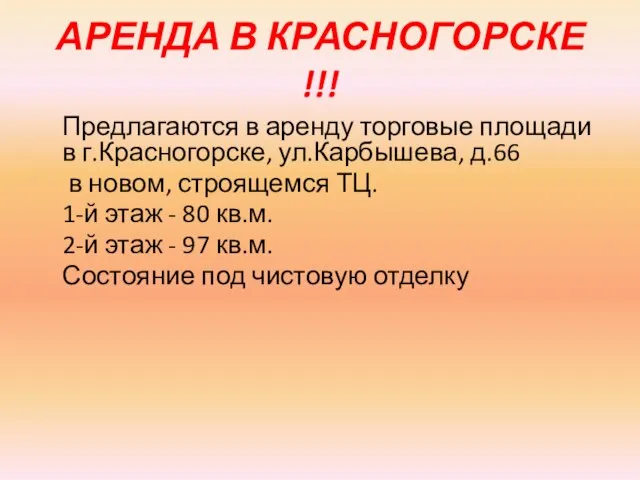

Москва АРЕНДА В КРАСНОГОРСКЕ !!!

АРЕНДА В КРАСНОГОРСКЕ !!! Пролетарская культура

Пролетарская культура Перу Республика

Перу Республика первоночальные сведения о строении вещества

первоночальные сведения о строении вещества Презентация на тему Библейские мотивы лирики А.С.Пушкина

Презентация на тему Библейские мотивы лирики А.С.Пушкина Разработка методологических основ мониторинга и прогнозирования влияния геоастрофизических факторов на характер возникновения

Разработка методологических основ мониторинга и прогнозирования влияния геоастрофизических факторов на характер возникновения  Распространенность наркомании и современные подходы к профилактике медико-социальных последствий «проблемного» потребления нар

Распространенность наркомании и современные подходы к профилактике медико-социальных последствий «проблемного» потребления нар ТМ Фрекен БОК, Россия

ТМ Фрекен БОК, Россия Краевой конкурс детского фольклорного творчества «Солнцеворот»

Краевой конкурс детского фольклорного творчества «Солнцеворот» Литературная викторина по творчеству А.П. Чехова 7 – 8 классы 2 тур

Литературная викторина по творчеству А.П. Чехова 7 – 8 классы 2 тур ФГОС начального общего образования: нормативно-методологические основания и новые образовательные результаты

ФГОС начального общего образования: нормативно-методологические основания и новые образовательные результаты Гимназия № 12

Гимназия № 12 Презентация на тему Романтизм в музыке

Презентация на тему Романтизм в музыке