Слайд 2

Pachshenko

Galina Nikolaevna

Associate Professor of Information System Department,

Candidate

of Technical Science

Слайд 4Topics

Single-layer neural networks

Multi-layer neural networks

Single perceptron

Multi-layer perceptron

Hebbian Learning Rule

Back propagation

Delta-rule

Weight adjustment

Cost Function

Сlassification

(Independent Work)

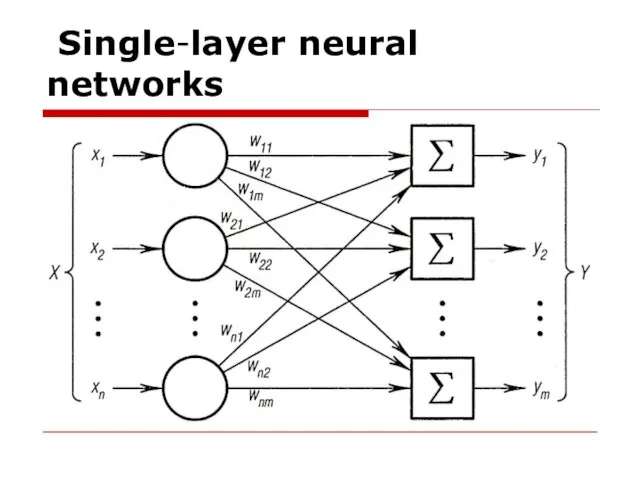

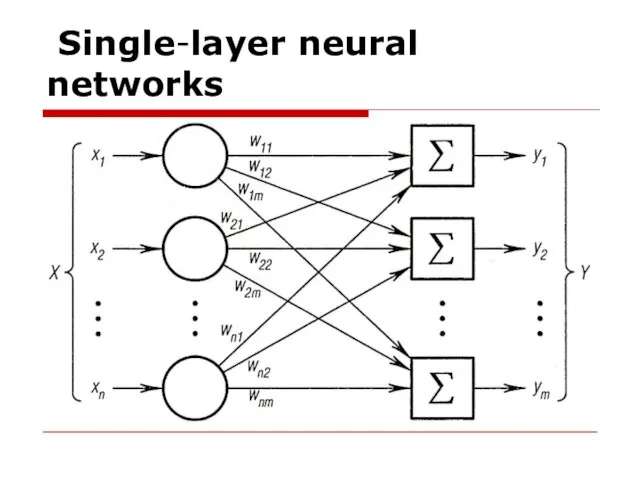

Слайд 5 Single-layer neural networks

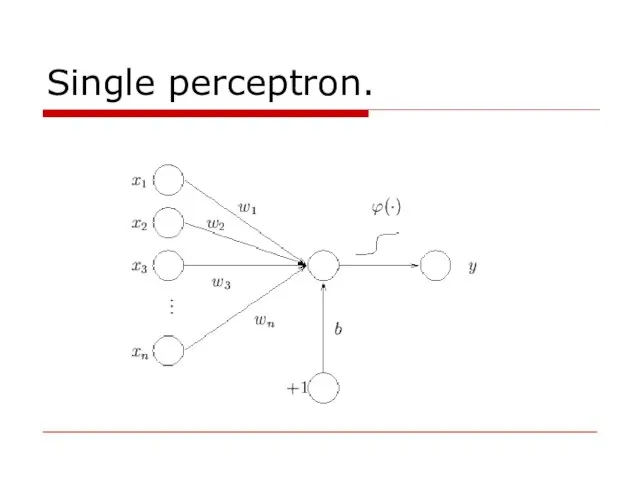

Слайд 7Single perceptron

The perceptron computes a single output from multiple real-valued inputs by forming a linear combination

according to its input weights and then possibly putting the output through activation function.

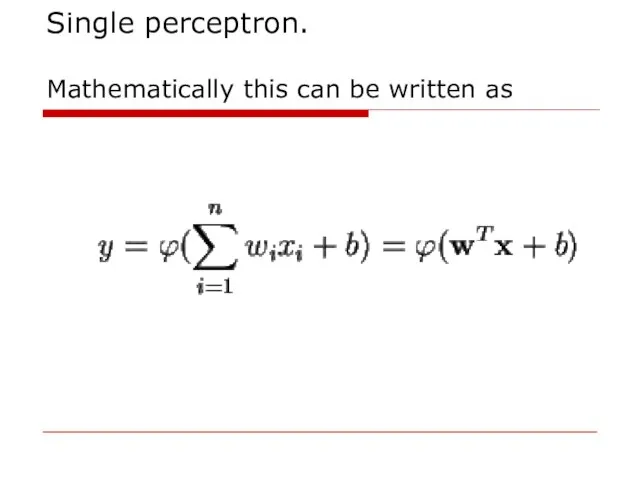

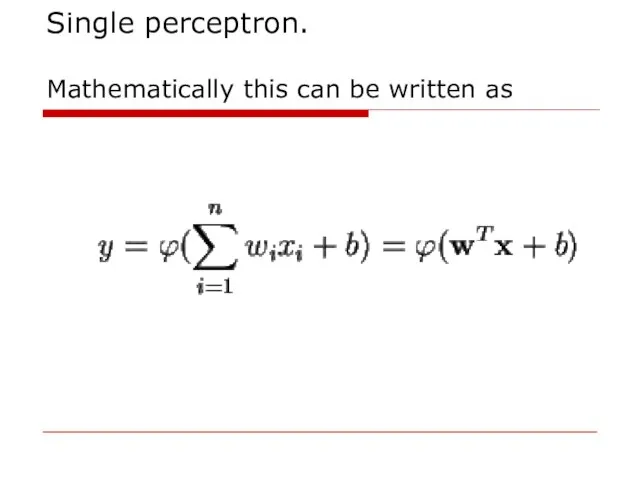

Слайд 8Single perceptron.

Mathematically this can be written as

Слайд 10Task 1:

Write a program that finds output of a single perceptron.

Note:

Use bias.

The bias shifts the decision boundary away from the origin and does not depend on any input value.

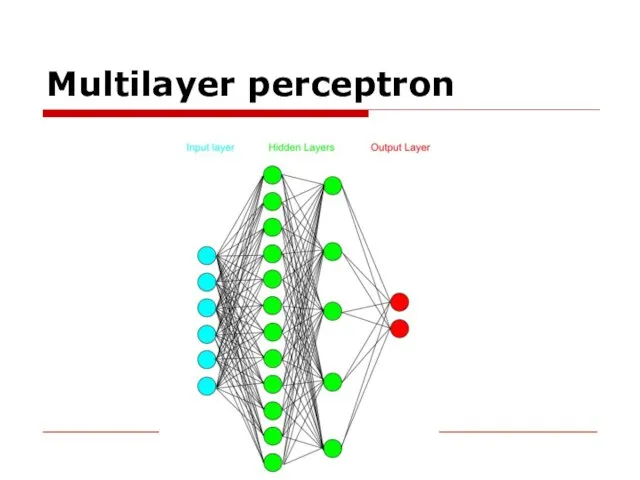

Слайд 11Multilayer perceptron

A multilayer perceptron (MLP) is a class of feedforward artificial neural network.

Слайд 13Structure

• nodes that are no target of any connection are called input

neurons.

Слайд 14• nodes that are no source of any connection are called output

neurons.

A MLP can have more than one output neuron.

The number of output neurons depends on the way the target values (desired values) of the training patterns are described.

Слайд 15• all nodes that are neither input neurons nor output neurons are

called hidden neurons.

• all neurons can be organized in layers, with the set of input layers being the first layer.

Слайд 16The original Rosenblatt's perceptron used a Heaviside step function as the activation

function.

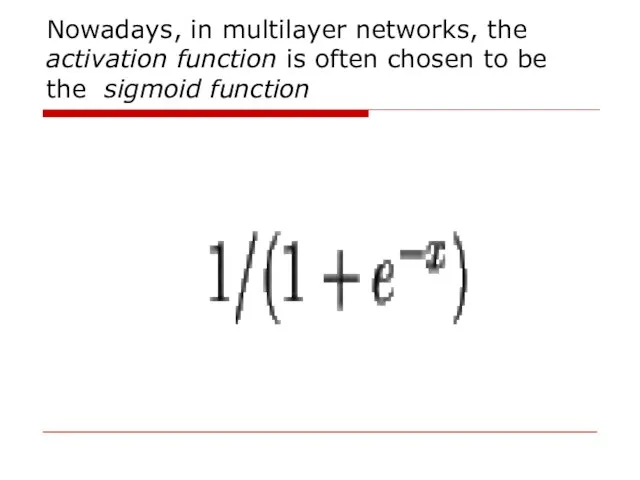

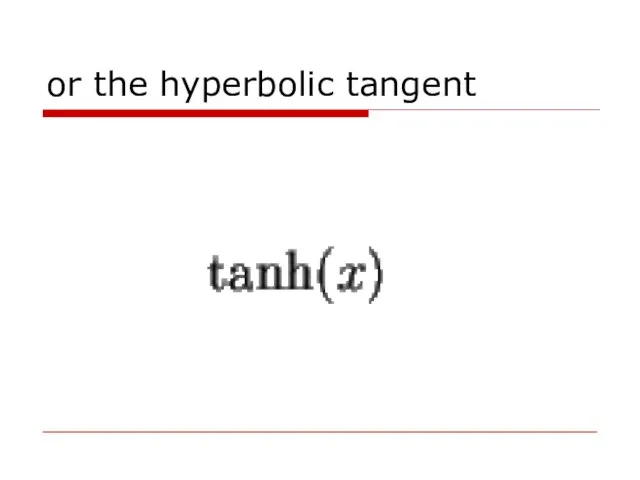

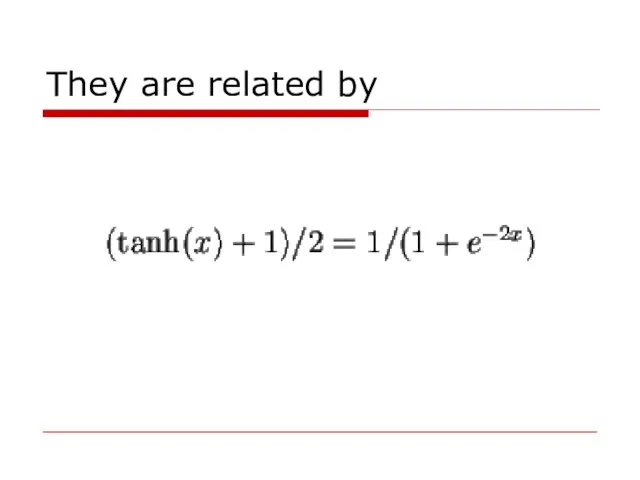

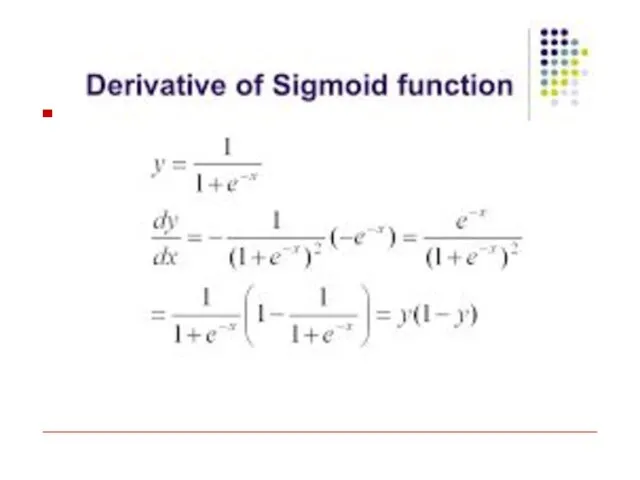

Слайд 17Nowadays, in multilayer networks, the activation function is often chosen to be

the sigmoid function

Слайд 20These functions are used because they are mathematically convenient.

Слайд 21An MLP consists of at least three layers of nodes.

Except for the

input nodes, each node is a neuron that uses a nonlinear activation function.

Слайд 22MLP utilizes a supervised learning technique called backpropagation for training.

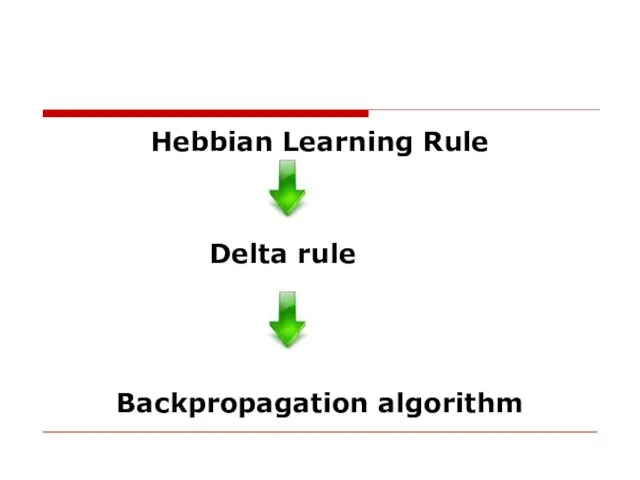

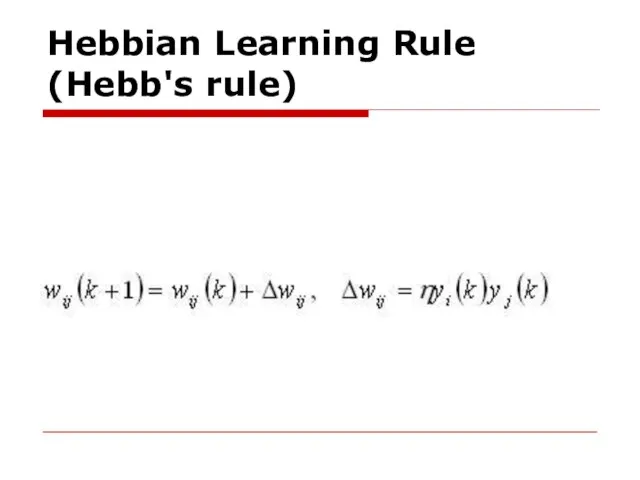

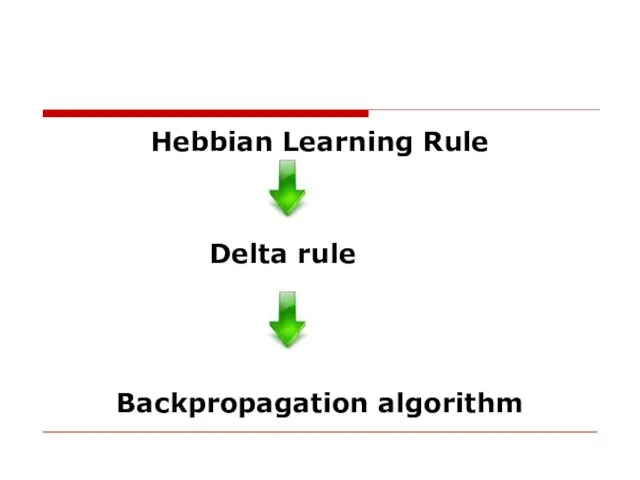

Слайд 23Hebbian Learning Rule

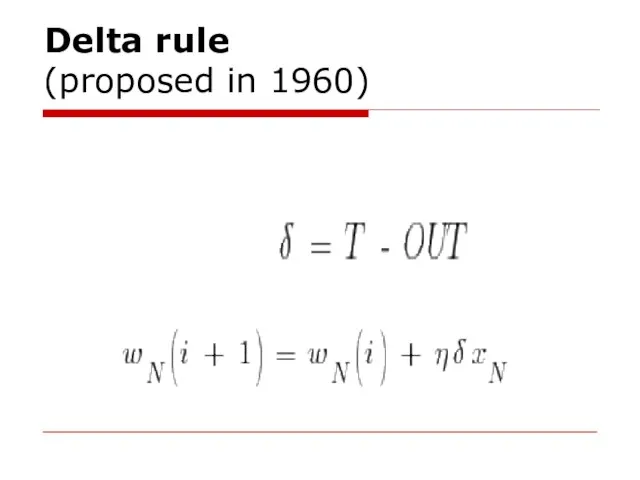

Delta rule

Backpropagation algorithm

Слайд 24

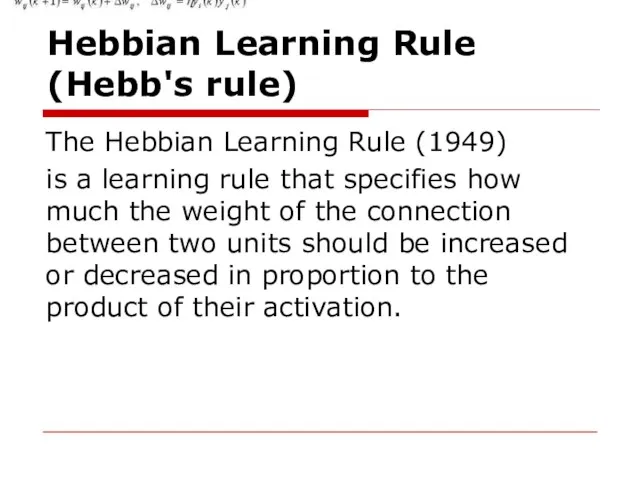

Hebbian Learning Rule

(Hebb's rule)

The Hebbian Learning Rule (1949)

is a learning rule that

specifies how much the weight of the connection between two units should be increased or decreased in proportion to the product of their activation.

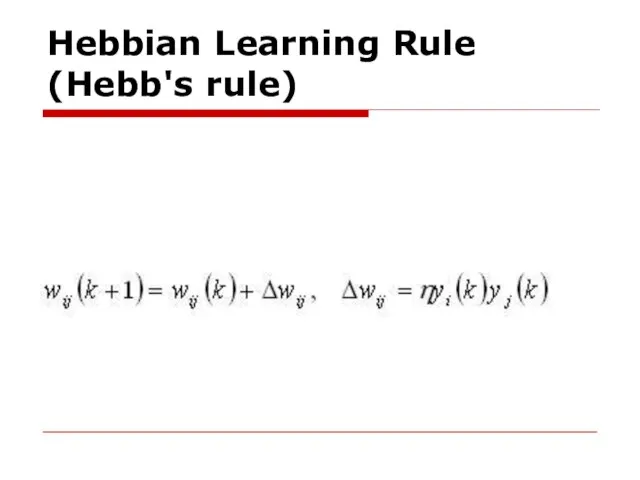

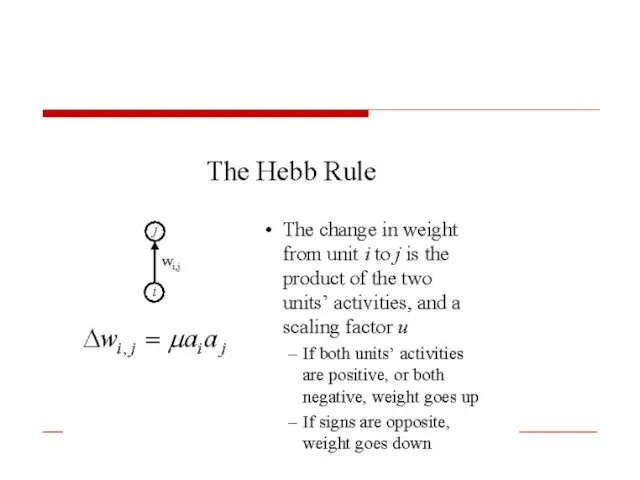

Слайд 25Hebbian Learning Rule

(Hebb's rule)

Слайд 28The backpropagation algorithm was originally introduced in the 1970s, but its importance

wasn't fully appreciated until a famous 1986 paper by David Rumelhart, Geoffrey Hinton, and Ronald Williams.

Слайд 29That paper describes several neural networks where backpropagation works far faster than

earlier approaches to learning, making it possible to use neural nets to solve problems which had previously been insoluble.

Слайд 30Supervised Backpropagation – The mechanism of backward error transmission (delta learning rule)

is used to modify the weights of the internal (hidden) and output layers

Слайд 31Back propagation

The back propagation learning algorithm uses the delta-rule.

What this does

is that it computes the deltas, (local gradients) of each neuron starting from the output neurons and going backwards until it reaches the input layer.

Слайд 32The delta rule is derived by attempting to minimize the error in

the output of the neural network through gradient descent.

Слайд 33To compute the deltas of the output neurons though we first have

to get the error of each output neuron.

Слайд 34That’s pretty simple, since the multi-layer perceptron is a supervised training network

so the error is the difference between the network’s output and the desired output.

ej(n) = dj(n) – oj(n)

where e(n) is the error vector, d(n) is the desired output vector and o(n) is the actual output vector.

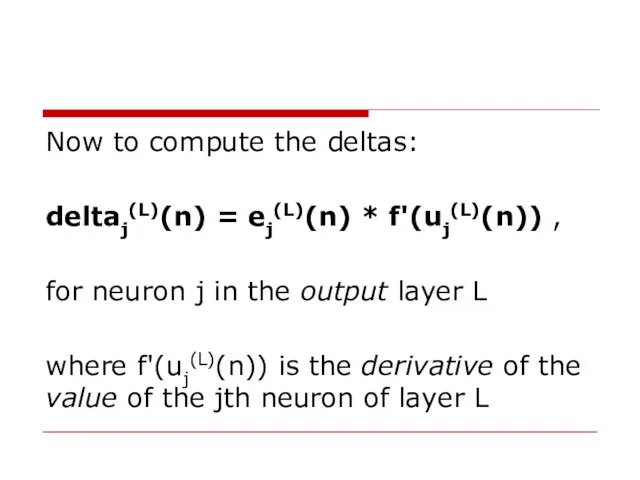

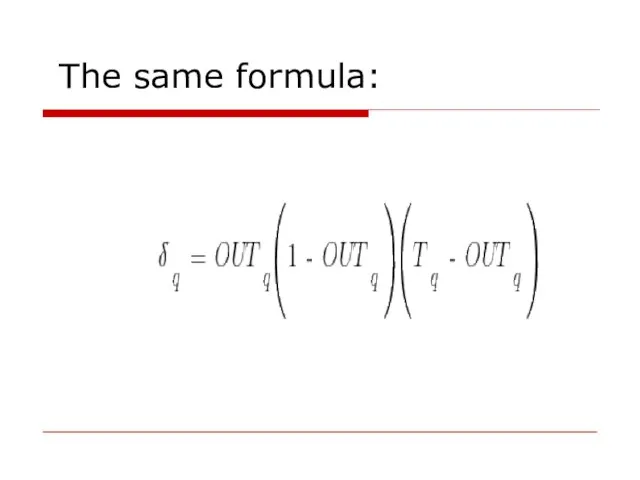

Слайд 35Now to compute the deltas:

deltaj(L)(n) = ej(L)(n) * f'(uj(L)(n)) ,

for neuron j

in the output layer L

where f'(uj(L)(n)) is the derivative of the value of the jth neuron of layer L

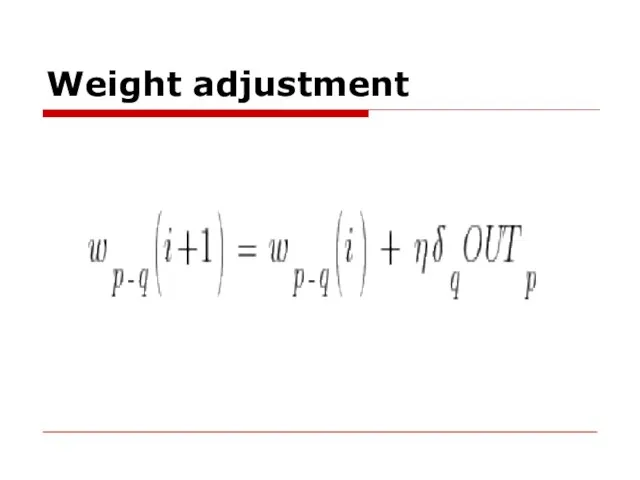

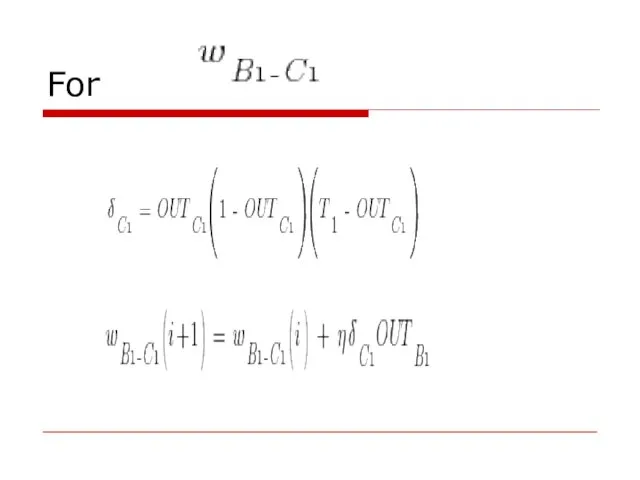

Слайд 37Weight adjustment

Having calculated the deltas for all the neurons we are now

ready for the third and final pass of the network, this time to adjust the weights according to the generalized delta rule:

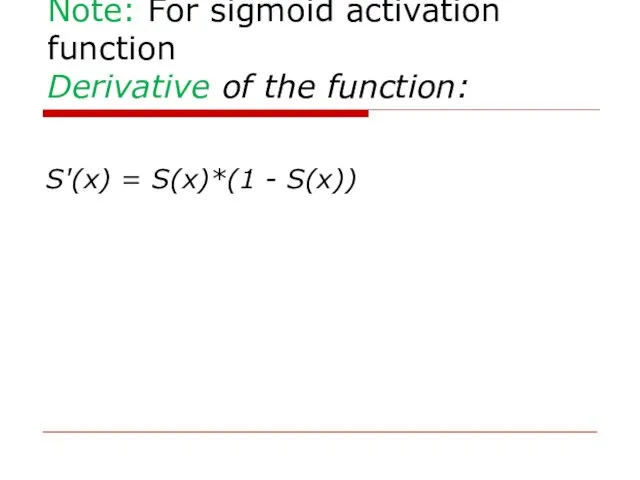

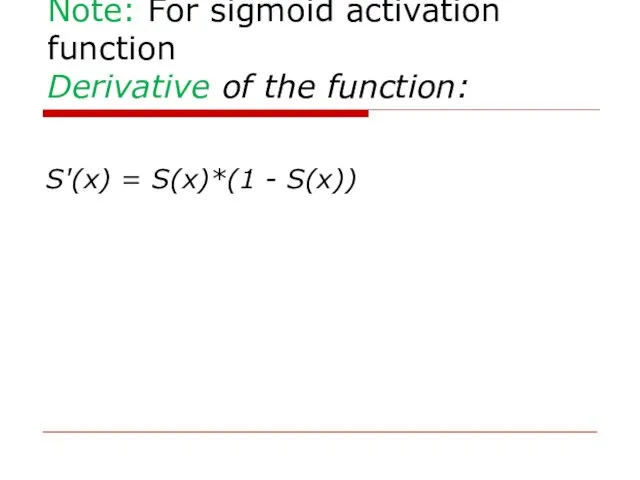

Слайд 40Note: For sigmoid activation function

Derivative of the function:

S'(x) = S(x)*(1 -

S(x))

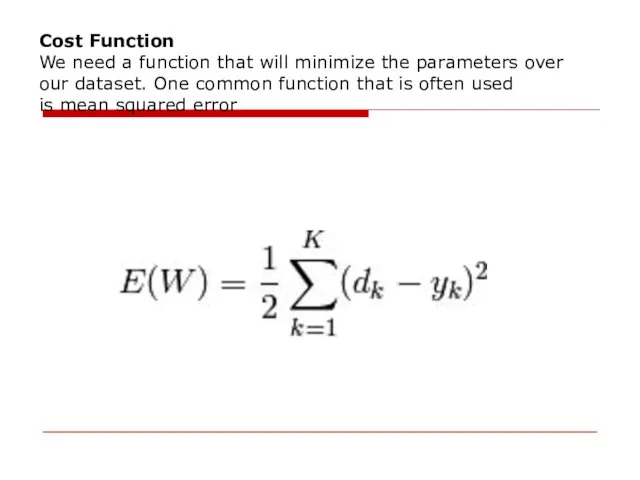

Слайд 42

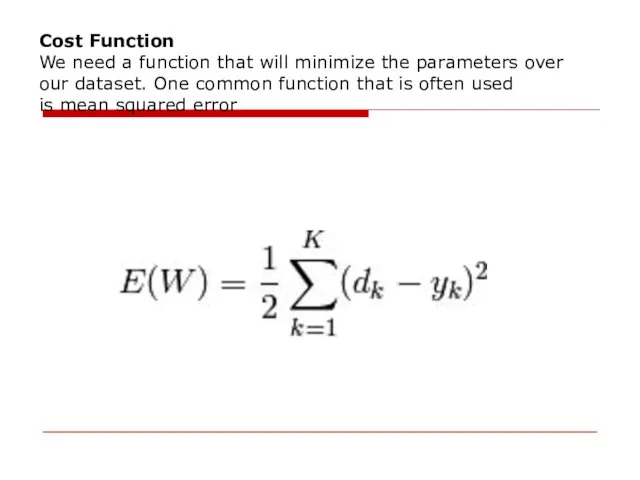

Cost Function

We need a function that will minimize the parameters over our

dataset. One common function that is often used is mean squared error

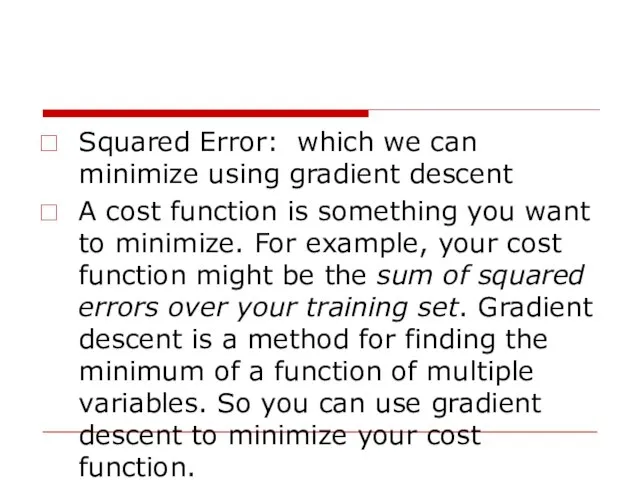

Слайд 43Squared Error: which we can minimize using gradient descent

A cost function is

something you want to minimize. For example, your cost function might be the sum of squared errors over your training set. Gradient descent is a method for finding the minimum of a function of multiple variables. So you can use gradient descent to minimize your cost function.

Слайд 44Back-propagation is a gradient descent over the entire networks weight vectors.

In practice,

it often works well and can run multiple times. It minimizes error over all training samples.

Слайд 45Task 2:

Write a program that can update weights of neural network using

backpropagation.

TEO-STROY Система для управления строительной фирмой/студией ремонта

TEO-STROY Система для управления строительной фирмой/студией ремонта Программный комплекс Autocad

Программный комплекс Autocad Few words about clouds

Few words about clouds Одномерные массивы и работа со строками

Одномерные массивы и работа со строками Платформа Aliexpress

Платформа Aliexpress 7-1-2

7-1-2 Проецирование. Бинарный урок

Проецирование. Бинарный урок Введение в ПОР. Основные теоретические вопросы проектирования ПОР

Введение в ПОР. Основные теоретические вопросы проектирования ПОР ALGA_CA-32

ALGA_CA-32 Создание формы для базы данных

Создание формы для базы данных Алгоритмы обработки данных при организации электронного архива предприятий радиоэлектронной промышленности на базе PLM-систем

Алгоритмы обработки данных при организации электронного архива предприятий радиоэлектронной промышленности на базе PLM-систем Создание электронного учебного пособия по помехоустойчивым кодам с разработкой справочника

Создание электронного учебного пособия по помехоустойчивым кодам с разработкой справочника Презентация на тему Применение систем счисления

Презентация на тему Применение систем счисления  Базіка - сервіс чат ботів

Базіка - сервіс чат ботів Программное обеспечение. Вводный урок. 8 класс

Программное обеспечение. Вводный урок. 8 класс Разработка АИС Советник для анализа и принятия решений при торговых операций на рынке Forex

Разработка АИС Советник для анализа и принятия решений при торговых операций на рынке Forex Составление и выполнение алгоритмов чертёжника

Составление и выполнение алгоритмов чертёжника Основы проектирования баз данных. Распределенная обработка данных

Основы проектирования баз данных. Распределенная обработка данных Курс Веб-разработка (01)

Курс Веб-разработка (01) Разработка подсистемы управления доступом инфрастуктуры безопасности распределительных информационно-вычислительных систем

Разработка подсистемы управления доступом инфрастуктуры безопасности распределительных информационно-вычислительных систем Олимпиада iSandBOX 2020 Воркшоп 1

Олимпиада iSandBOX 2020 Воркшоп 1 Электронные таблицы. Обработка числовой информации в электронных таблицах

Электронные таблицы. Обработка числовой информации в электронных таблицах Организация локальных и глобальных сетей

Организация локальных и глобальных сетей Создание в социальной сети В контакте группы о Перми с интересной подборкой фактов о городе и его жителях

Создание в социальной сети В контакте группы о Перми с интересной подборкой фактов о городе и его жителях Разработка программного продукта Угадай число на языке программирования C Sharp

Разработка программного продукта Угадай число на языке программирования C Sharp Презентация — это не слайды!

Презентация — это не слайды! Программное обеспечение

Программное обеспечение Информационная безопасность

Информационная безопасность